Fyrox Game Engine Book

Practical reference and user guides for Fyrox Game Engine and its editor FyroxEd.

⚠️ Tip: If you want to start using the engine as fast as possible - read this chapter.

Warning: The book is in early development stage, you can help to improve it by making a contribution in its repository. Don't be shy, every tip is helpful!

Engine Version

Fyrox Team is trying to keep the book up-to-date with the latest version from master branch. If something does not

compile with the latest release from crates.io, then you need to

use the latest engine from GitHub repo.

How to read the book

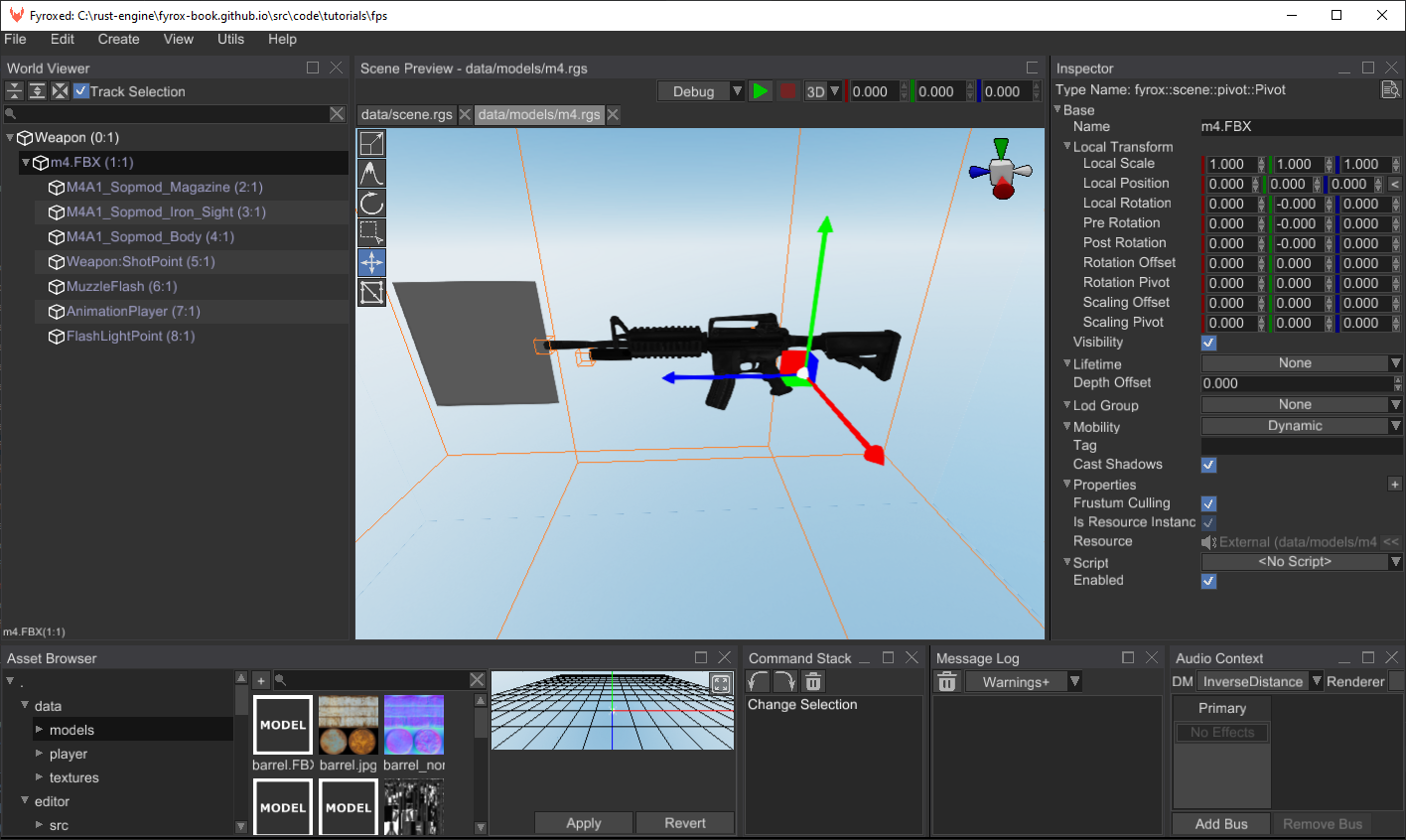

Almost every chapter in this book can be read in any order, but we recommend reading Chapters 1, 2, 3 (they're quite small) and then going through Platformer Tutorial (2D) while learning more about specific areas that interest you from the other chapters. There is also a First-Person Shooter Tutorial (3D) and RPG Tutorial (3D).

API Documentation

The book is primarily focused on game development with Fyrox, not on its API. You can find API docs here.

Required knowledge

We're expecting that you know basics of Rust programming language, its package manager Cargo. It is also necessary to know the basics of the game development, linear algebra, principles of software development and patterns, otherwise the book will probably be very hard for you.

Support the development

The future of the project fully depends on community support, every bit is important!

Introduction

This section of the book contains brief overview of engine's features, it should help you to decide if the engine suits your needs and will it be easy enough for you to use. Following chapters takes you into a tour over engine's features, its editor, basic concepts and design philosophy.

Introduction to Fyrox

Fyrox is a feature-rich, general purpose game engine that is suitable for any kind of games. It is capable to power games with small- or medium-sized worlds, large-sized world most likely will require some manual work.

Games made with the engine are capable to run on desktop platforms (PC, Mac, Linux) and Web (WebAssembly). Mobile is planned for future releases.

What can the engine do?

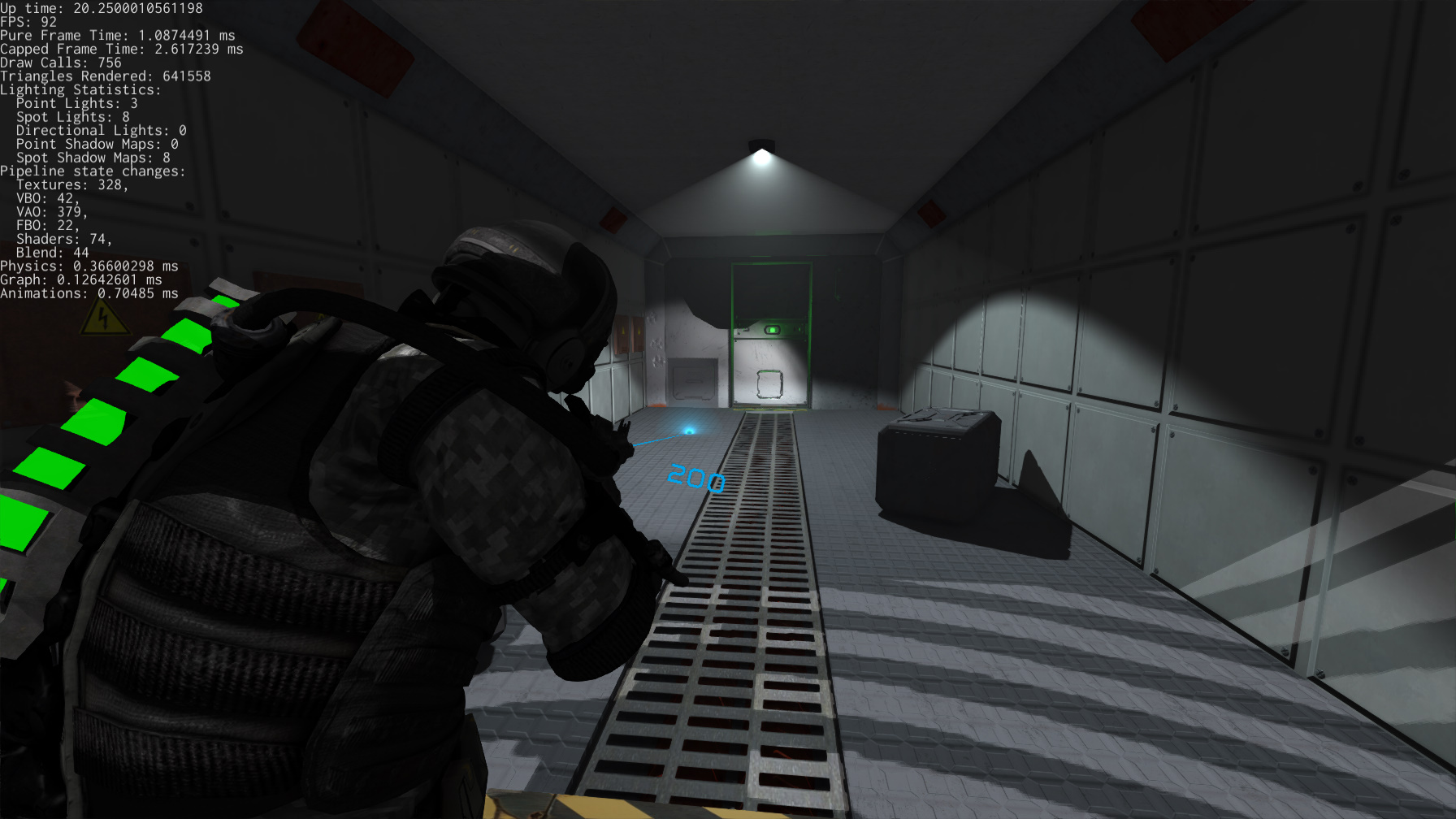

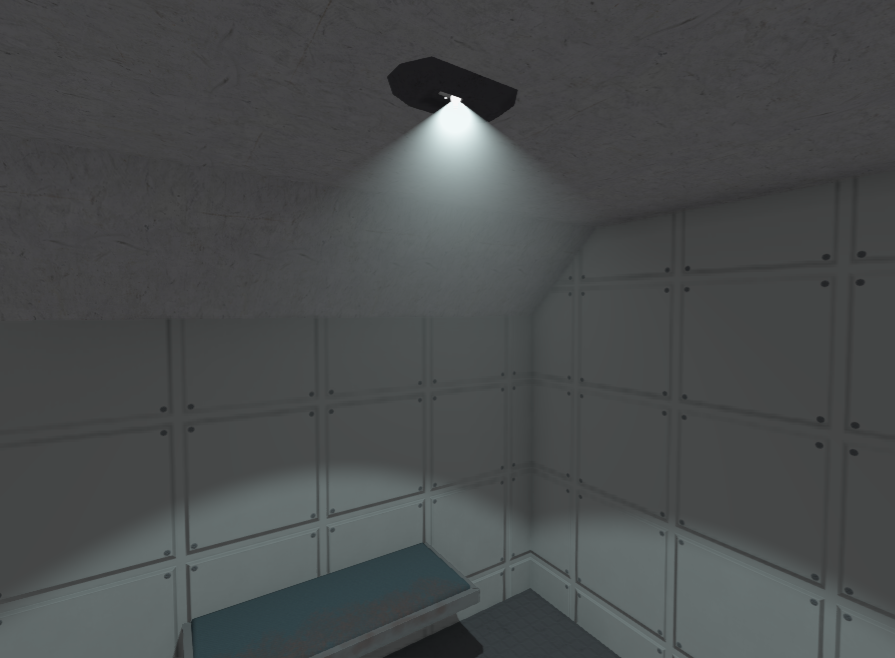

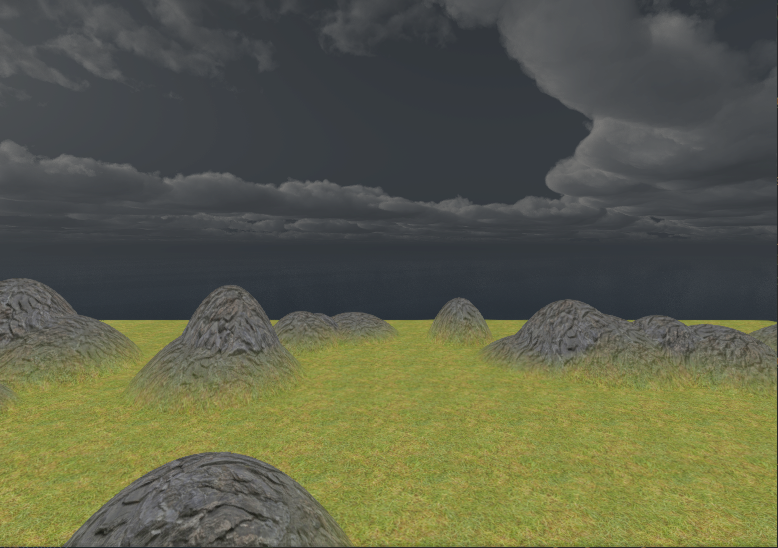

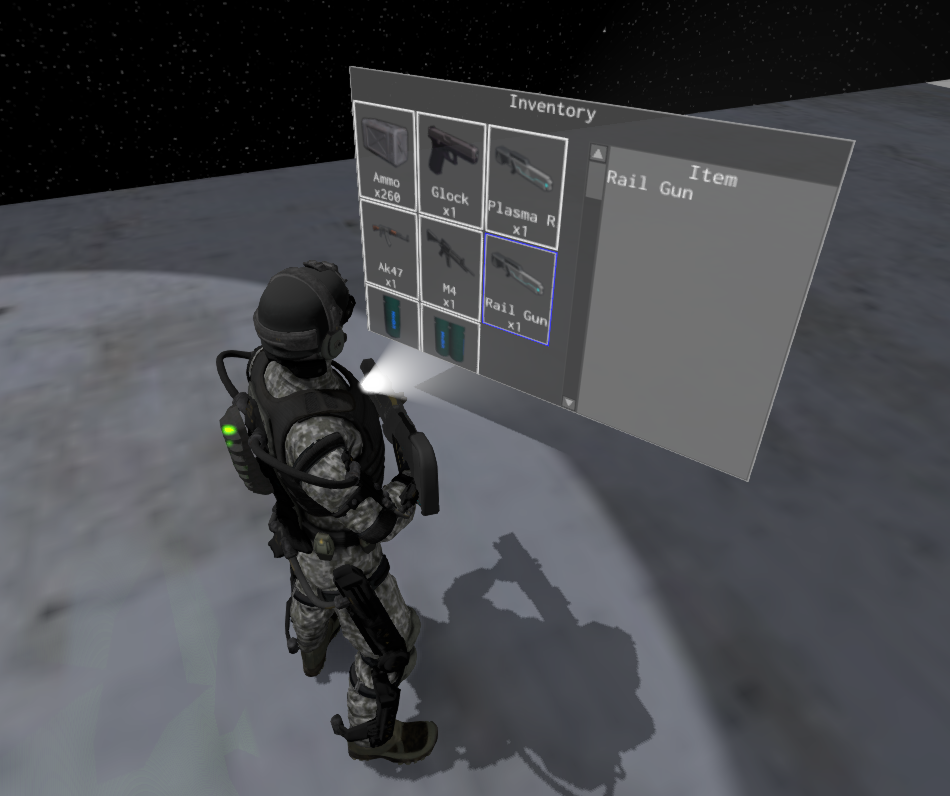

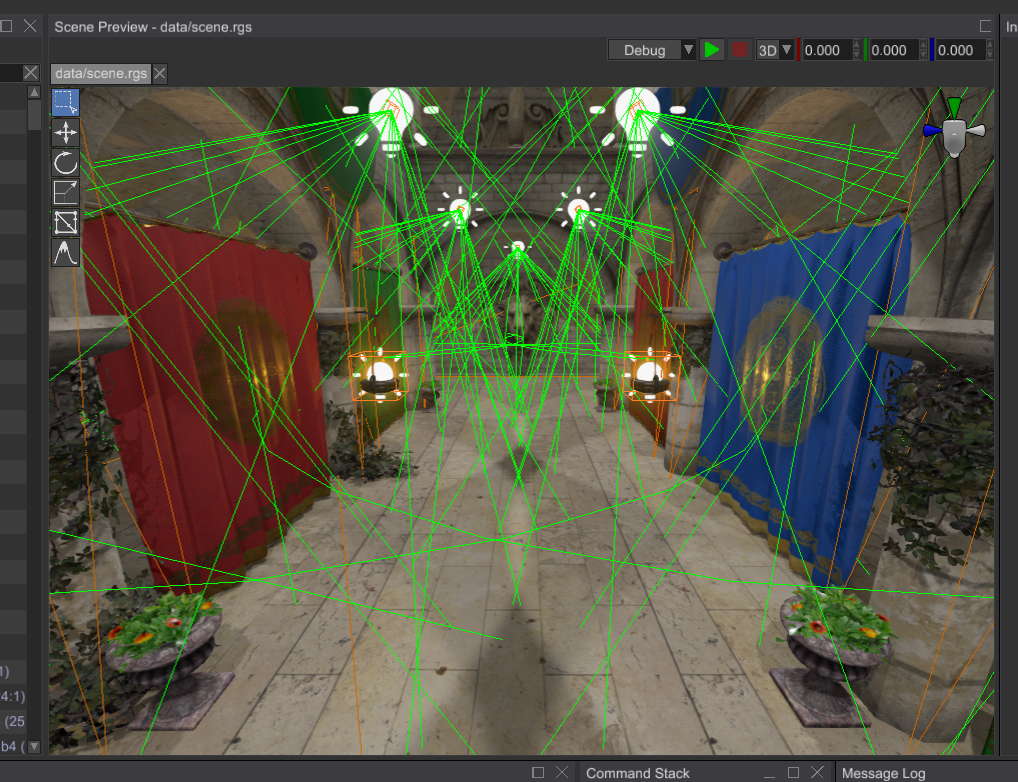

You can create pretty much any kind of game or interactive applications. Here's some examples of what the engine can do:

How does the engine work?

The engine consists of two parts that you'll be actively using: the framework and the editor. The framework is a foundation of the engine, it manages rendering, sound, scripts, plugins, etc. While the editor contains lots of tools that can be used to create game worlds, manage assets, edit game objects, scripts and more.

Programming languages

Everything of your game can be written entirely in Rust, utilizing its safety guarantees as well as speed. However, it is possible to use any scripting language you want, but that's have no built-in support, and you need to implement this manually.

Engine Features

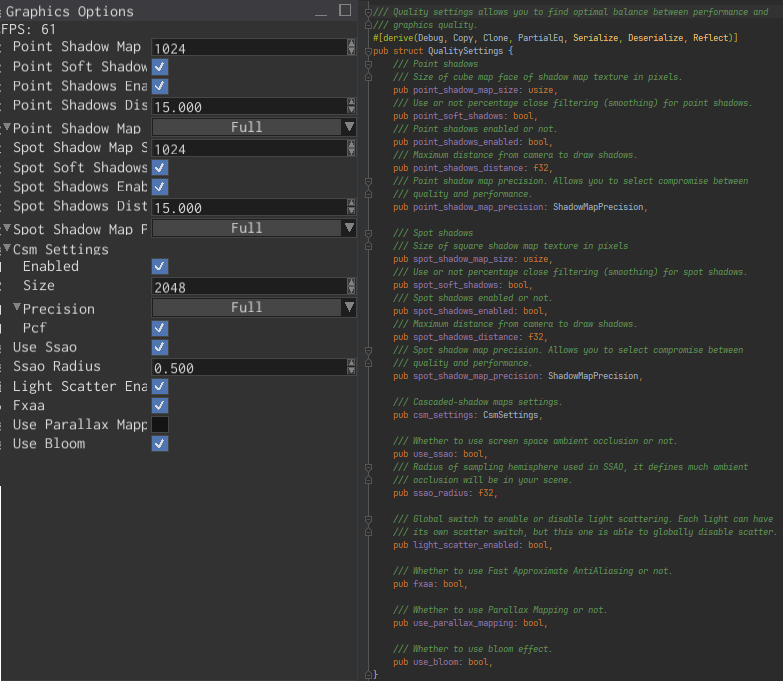

This is a more or less complete (yet, it can be outdated) list of engine features:

General

- Exceptional safety, reliability, and speed.

- PC (Windows, Linux, macOS), Android, Web (WebAssembly) support.

- Modern, PBR rendering pipeline.

- Comprehensive documentation.

- Guide book

- 2D support.

- Integrated editor.

- Fast iterative compilation.

- Classic object-oriented design.

- Lots of examples.

Rendering

- Custom shaders, materials, and rendering techniques.

- Physically-based rendering.

- Metallic workflow.

- High dynamic range (HDR) rendering.

- Tone mapping.

- Color grading.

- Auto-exposure.

- Gamma correction.

- Deferred shading.

- Directional light.

- Point lights + shadows.

- Spotlights + shadows.

- Screen-Space Ambient Occlusion (SSAO).

- Soft shadows.

- Volumetric light (spot, point).

- Batching.

- Instancing.

- Fast Approximate Anti-Aliasing (FXAA).

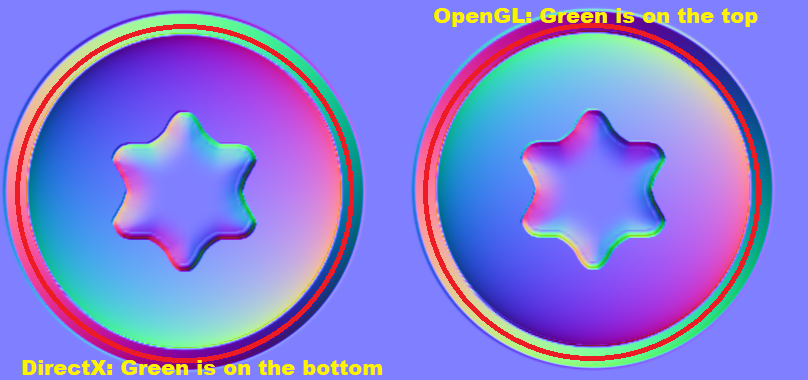

- Normal mapping.

- Parallax mapping.

- Render in texture.

- Forward rendering for transparent objects.

- Sky box.

- Deferred decals.

- Multi-camera rendering.

- Lightmapping.

- Soft particles.

- Fully customizable vertex format.

- Compressed textures support.

- High-quality mip-map on-demand generation.

Scene

- Multiple scenes.

- Full-featured scene graph.

- Level-of-detail (LOD) support.

- GPU Skinning.

- Various scene nodes:

- Pivot.

- Camera.

- Decal.

- Mesh.

- Particle system.

- Sprite.

- Multilayer terrain.

- Rectangle (2D Sprites)

- Rigid body + Rigid Body 2D

- Collider + Collider 2D

- Joint + Joint 2D

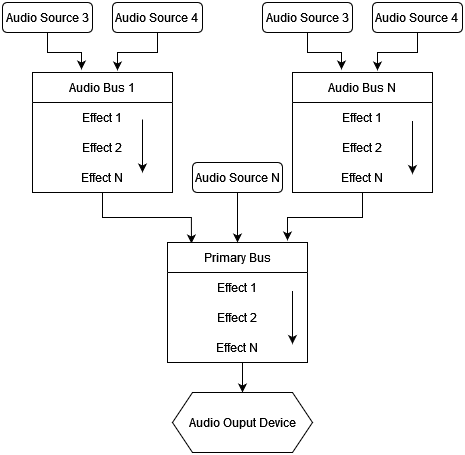

Sound

- High quality binaural sound with HRTF support.

- Generic and spatial sound sources.

- Built-in streaming for large sounds.

- Raw samples playback support.

- WAV/OGG format support.

- HRTF support for excellent positioning and binaural effects.

- Reverb effect.

Serialization

- Powerful serialization system

- Almost every entity of the engine can be serialized

- No need to write your own serialization.

Animation

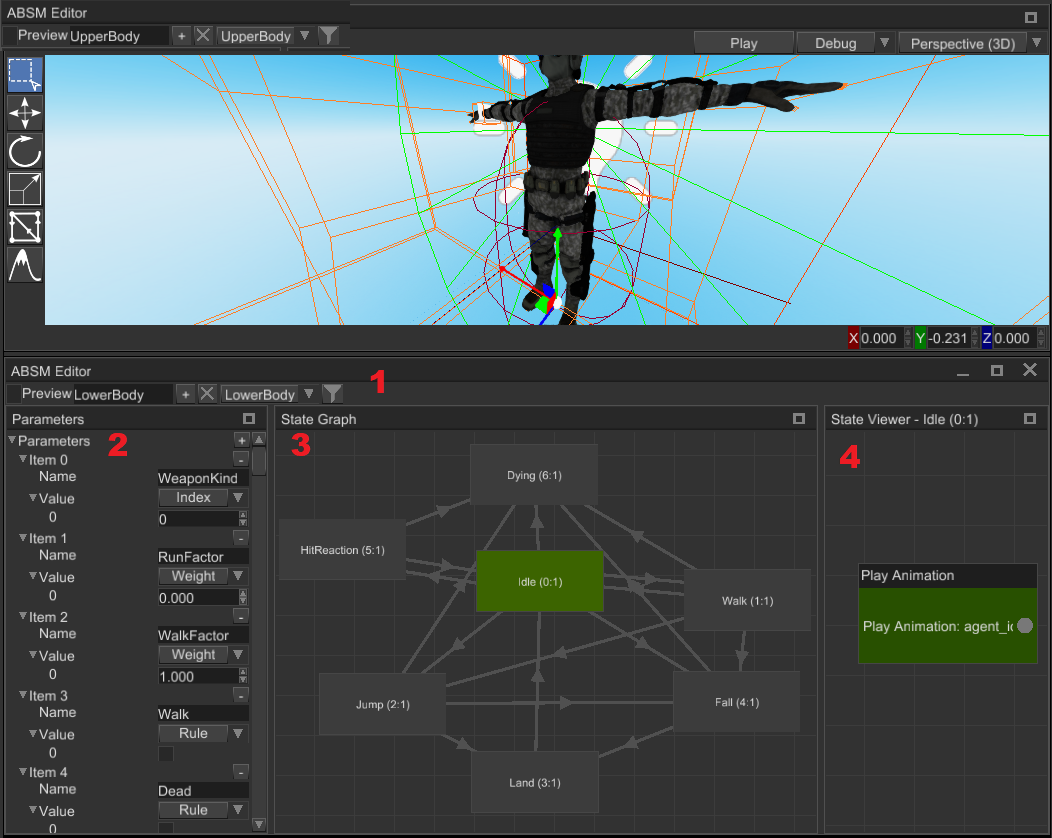

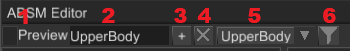

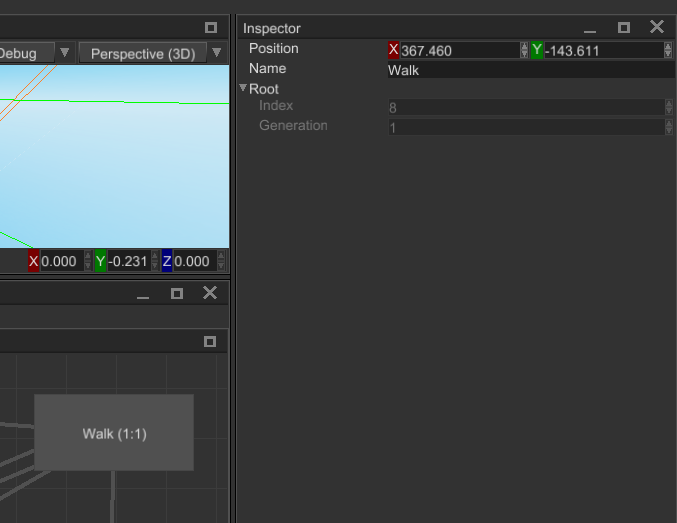

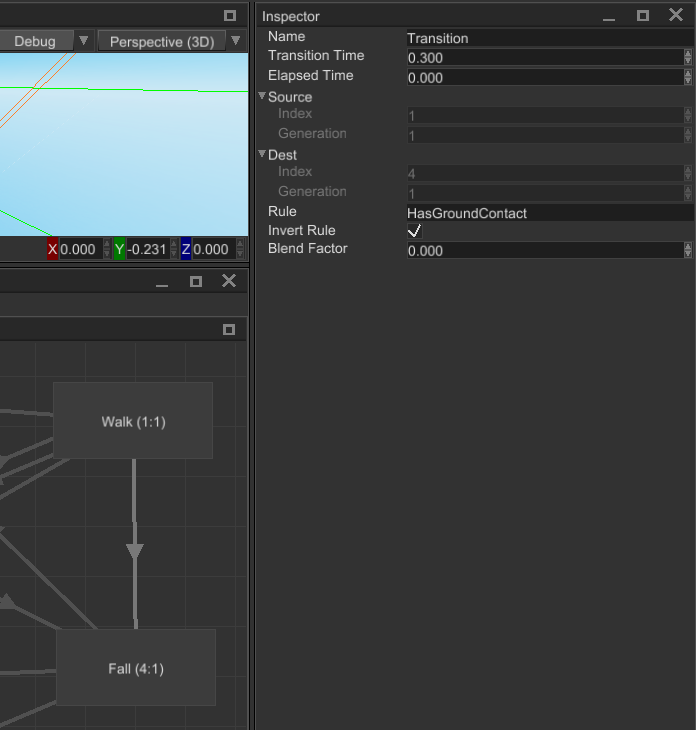

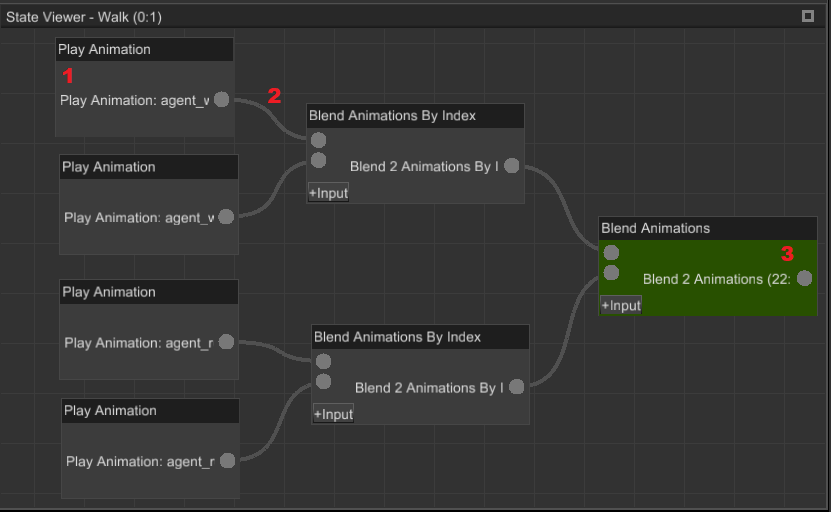

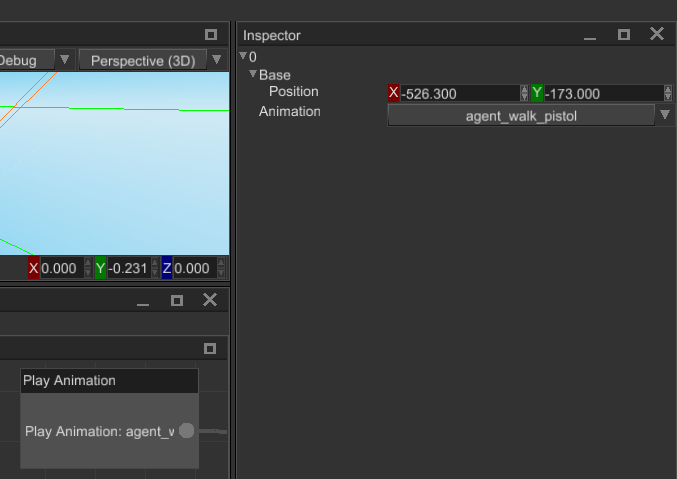

- Animation blending state machine - similar to Mecanim in Unity Engine.

- Animation retargetting - allows you to remap animation from one model to another.

Asset management

- Advanced asset manager.

- Fully asynchronous asset loading.

- PNG, JPG, TGA, DDS, etc. textures.

- FBX models loader.

- WAV, OGG sound formats.

- Compressed textures support (DXT1, DXT3, DTX5).

Artificial Intelligence (AI)

- A* pathfinder.

- Navmesh.

- Behavior trees.

User Interface (UI)

- Advanced node-based UI with lots of widgets.

- More than 32 widgets

- Powerful layout system.

- Full TTF/OTF fonts support.

- Based on message passing.

- Fully customizable.

- GAPI-agnostic.

- OS-agnostic.

- Button widget.

- Border widget.

- Canvas widget.

- Color picker widget.

- Color field widget.

- Check box widget.

- Decorator widget.

- Drop-down list widget.

- Grid widget.

- Image widget.

- List view widget.

- Popup widget.

- Progress bar widget.

- Scroll bar widget.

- Scroll panel widget.

- Scroll viewer widget.

- Stack panel widget.

- Tab control widget.

- Text widget.

- Text box widget.

- Tree widget.

- Window widget.

- File browser widget.

- File selector widget.

- Docking manager widget.

- NumericUpDown widget.

Vector3<f32>editor widget.- Menu widget.

- Menu item widget.

- Message box widget.

- Wrap panel widget.

- Curve editor widget.

- User defined widget.

Physics

- Advanced physics (thanks to the rapier physics engine)

- Rigid bodies.

- Rich set of various colliders.

- Joints.

- Ray cast.

- Many other useful features.

- 2D support.

System Requirements

As any other software, Fyrox has its own system requirements that will provide the best user experience.

- CPU - at least 2 core CPU with 1.5 GHz per each core. The more is better.

- GPU - any relatively modern GPU with OpenGL 3.3+ support. If the editor fails to start, then it is most likely your video card does not support OpenGL 3.3+. Do not try to run the editor on virtual machines, pretty much all of them have rudimentary support for graphics APIs which won't let you run the editor.

- RAM - at least 1 Gb of RAM. The more is better.

- VRAM - at least 256 Mb of video memory. It highly depends on your game.

Supported Platforms

| Platform | Engine | Editor |

|---|---|---|

| Windows | ✅ | ✅ |

| Linux | ✅ | ✅ |

| macOS | ✅¹ | ✅ |

| WebAssembly | ✅ | ❌² |

| Android | ✅ | ❌² |

- ✅ - first-class support

- ❌ - not supported

- ¹ - macOS suffers from bad GPU performance on Intel chipsets, M1+ works well.

- ² - the editor works only on PC, it requires rich filesystem functionality as well as decent threading support.

Basic concepts

Let's briefly get over some basic concepts of the engine, there's not much, but all of them are crucial to understand design decisions made in the engine.

Classic OOP

The engine uses somewhat classic OOP with composition over inheritance - complex objects in the engine can be constructed using simpler objects.

Scenes

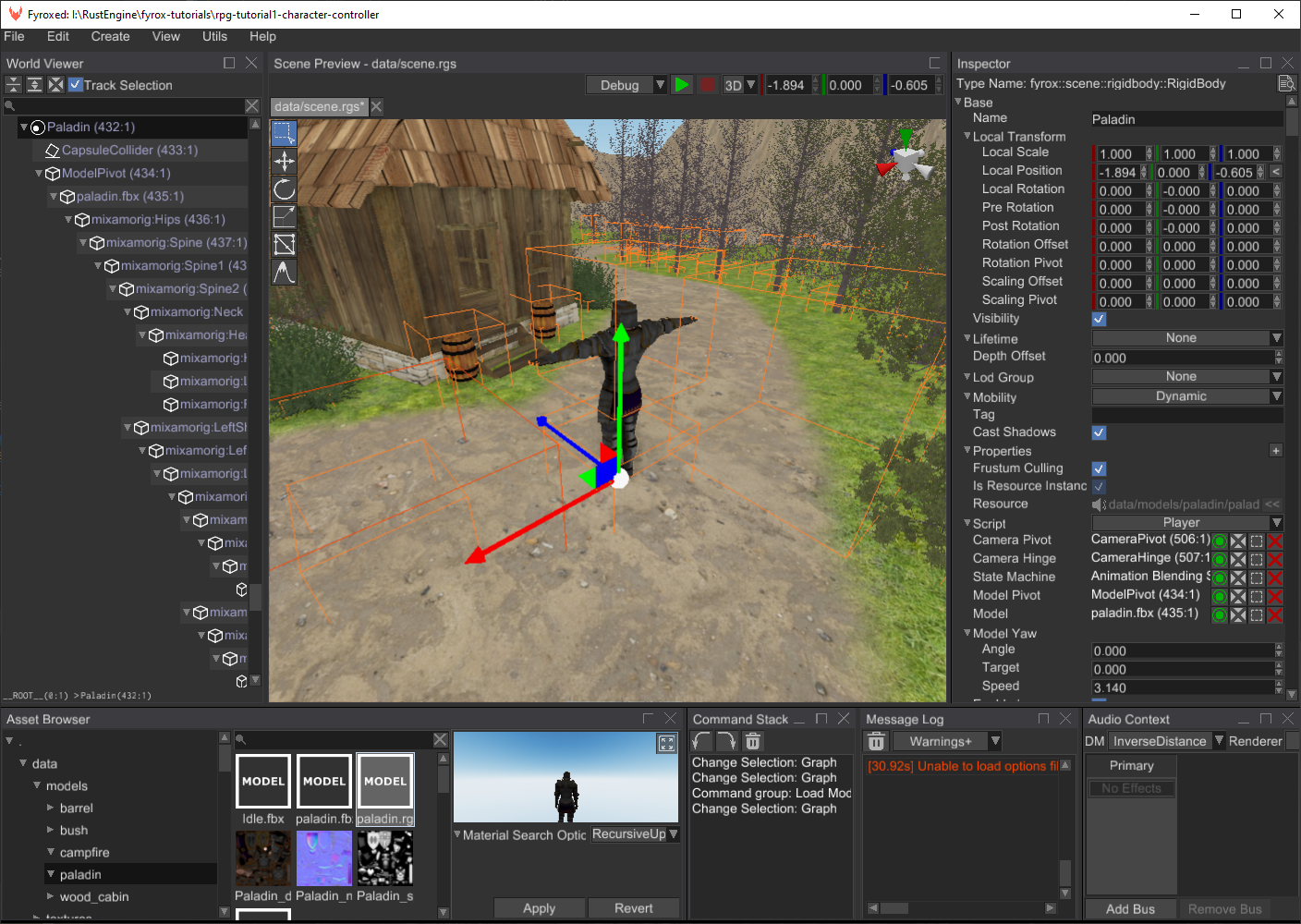

In Fyrox, you break down your game in a set of reusable scenes. Pretty much anything can be a scene: a player, a weapon, a bot, level parts, etc. Scenes can be nested one into another, this helps you to break down complex scenes into reusable parts. Scene in Fyrox is also plays a role of prefab, there's pretty much no difference between them.

Nodes and Scene Graph

A scene is made of one or more nodes (every scene must have at least one root node, to which everything else is attached). Scene node contains specific set of properties as well as one optional script instance which is responsible for custom game logic.

Typical structure of a scene node could be represented by the following example. The base object for every scene node is

a Base node, it contains a transform, a list of children, etc. A more complex node, that extends functionality of the Base

node stores an instance of Base inside of them. For example, a Mesh node is a Base node plus some specific info

(a list of surfaces, material, etc.). The "hierarchy" depth is unlimited - a Light node in the engine is an enumeration

of three possible types of light source. Directional, Point, and Spot light sources both use BaseLight node,

which in its turn contains Base node inside. Graphically it can be represented like so:

`Point`

|__ Point Light Properties (radius, etc.)

|__`BaseLight`

|__ Base Light Properties (color, etc.)

|__`Base`

|__ Base Node Properties (transform, children nodes, etc.)

As you can see, this forms the nice tree (graph) that shows what the object contains. This is very natural way of describing scene nodes, it gives you the full power of building an object of any complexity.

Plugins

Plugin is a container for "global" game data and logic, its main usage is to provide scripts with some data and to manage global game state.

Scripts

Script - is a separate piece of data and logic, that can be attached to scene nodes. This is primary (but not single) way of adding custom game logic.

Design Philosophy and Goals

Let's talk a bit about design philosophy and goals of the engine. Development of the engine started in the beginning of 2019 as a hobby project to learn Rust, and it quickly showed that Rust can be a game changer in the game development industry. Initially, the engine was just a port of an engine that is written in C. At the beginning, it was very interesting to build such complex thing as game engine in such low level language without any safety guarantees. After a year of development it became annoying to fix memory related issues (memory corruption, leaks, etc.), luckily at that time Rust's popularity grew, and it showed on my radar. I (@mrDIMAS) was able to port the engine to it in less than a year. Stability has improved dramatically, no more random crashes, performance was at the same or better levels - time invested in learning new language was paid off. Development speed does not degrade over time as it was in C, it is very easy to manage growing project.

Safety

One of the main goals of in the development of the engine is to provide high level of safety. What does this mean? In short: protect from memory-unsafety bugs. This does not include any logic errors, but when your game is free of random crashes due to memory unsafety it is much easier to fix logic bugs, because you don't have to think about potentially corrupted memory.

Safety is also dictates architecture design decisions of your game, typical callback hell, that is possible to do in many other languages, is very tedious to implement in Rust. It is possible, but it requires quite a lot of manual work and quickly tell you that you're doing it wrong.

Performance

Game engines usually built using system-level programming languages, that provides peak performance levels. Fyrox is not an exception. One if its design goals is to provide high levels of performance by default, leaving an opportunity for adding custom solutions for performance-critical places.

Ease of use

Other very important part is that the engine should be friendly to newcomers. It should lower entry threshold, not make it worse. Fyrox uses well known and battle-tested concepts, thus making it easier to make games with it. On other hand, it still can be extended with anything you need - it tries to be as good for veterans of the game industry as for newcomers.

Battle-tested

Fyrox has large projects built on it, that helps to understand real needs for general-purpose game engine. This helps in revealing weak spots in design and fix them.

Frequently Asked Questions

This chapter contains answers for frequently asked questions.

Which graphics API does the engine use?

Fyrox uses OpenGL 3.3 on PC and OpenGL ES 3.0 on WebAssembly. Why? Mainly due to historical reasons. Back in the day

(Q4 of 2018), there weren't any good alternatives to it with a wide range of supported platforms. For example, wgpu

didn't even exist, as its first version was released in January 2019. Other crates were taking their first baby

steps and weren't ready for production.

Why not use alternatives now?

There is no need for it. The current implementation works and is more than good enough. So instead of focusing on replacing something that works for little to no benefit, the current focus is on adding features that are missing as well as improving existing features when needed.

Is the engine based on ECS?

No, the engine uses a mixed composition-based, object-oriented design with message passing and other different approaches that fit the most for a particular task. Why not use ECS for everything, though? Pragmatism. Use the right tool for the job. Don't use a microscope to hammer nails.

What kinds of games can I make using Fyrox?

Pretty much any kind of games, except maybe games with vast open-worlds (since there's no built-in world streaming). In general, it depends on your game development experience.

Getting Started

This section of the book will guide you through the basics of the engine. You will learn how to create a project, use plugins, scripts, assets, and the editor. Fyrox is a modern game engine with its own scene editor, that helps you to edit game worlds, manage assets, and many more. At the end of reading this section, you'll also learn how to manage game and engine entities, how they're structured and what are the basics of data management in the engine.

Next chapter will guide you through major setup of the engine - creating a game project using special project generator tool.

Editor, Plugins and Scripts

Every Fyrox game is just a plugin for both the engine and the editor, such approach allows the game to run from the editor and be able to edit the game entities in it. A game can define any number of scripts, which can be assigned to scene objects to run custom game logic on them. This chapter will cover how to install the engine with its platform- specific dependencies, how to use the plugins and scripting system, how to run the editor.

Platform-specific Dependencies

Before starting to use the engine, make sure all required platform-specific development dependencies are installed. If using Windows or macOS, no additional dependencies are required other than the latest Rust installed with appropriate toolchain for your platform.

Linux

On Linux, Fyrox needs the following libraries for development: libxcb-shape0, libxcb-xfixes0, libxcb1,

libxkbcommon, libasound2 and the build-essential package group.

For Debian based distros like Ubuntu, they can be installed like below:

sudo apt install libxcb-shape0-dev libxcb-xfixes0-dev libxcb1-dev libxkbcommon-dev libasound2-dev build-essential

For NixOS, you can use a shell.nix like below:

{ pkgs ? import <nixpkgs> { } }:

pkgs.mkShell rec {

nativeBuildInputs = with pkgs.buildPackages; [

pkg-config

xorg.libxcb

alsa-lib

wayland

libxkbcommon

libGL

];

shellHook = with pkgs.lib; ''

export LD_LIBRARY_PATH=${makeLibraryPath nativeBuildInputs}:/run/opengl-driver/lib:$LD_LIBRARY_PATH

'';

}

Quick Start

Run the following commands to start using the editor as quickly as possible.

cargo install fyrox-template

fyrox-template init --name fyrox_test --style 2d

cd fyrox_test

cargo run --package editor --release

Project Generator

Fyrox plugins are static, this means that if the source code of the game changes one must recompile. This architecture

requires some boilerplate code. Fyrox offers a special tiny tool - fyrox-template - that helps generate all this

boilerplate with a single command. Install it by running the following command:

cargo install fyrox-template

Note for Linux: This installs it in $user/.cargo/bin. If receiving errors about the fyrox-template command not

being found, add this hidden cargo bin folder to the operating systems $PATH environment variable.

Now, navigate to the desired project folder and run the following command:

fyrox-template init --name my_game --style 3d

Note that unlike cargo init, this will create a new folder with the given name.

The tool accepts two arguments - a project name (--name) and a style (--style), which defines the contents of the default

scene. After initializing the project, go to game/src/lib.rs - this is where the game logic is located, as you can

see, the fyrox-template generated quite a bit of code for you. There are comments explaining what each place is for. For

more info about each method, please refer to the docs.

Once the project is generated, memorize the two commands that will help run your game in different modes:

cargo run --package editor --release- launches the editor with your game attached. The editor allows you to run your game from it and edit its game entities. It is intended to be used only for development.cargo run --package executor --release- creates and runs the production binary of your game, which can be shipped (for example - to a store).

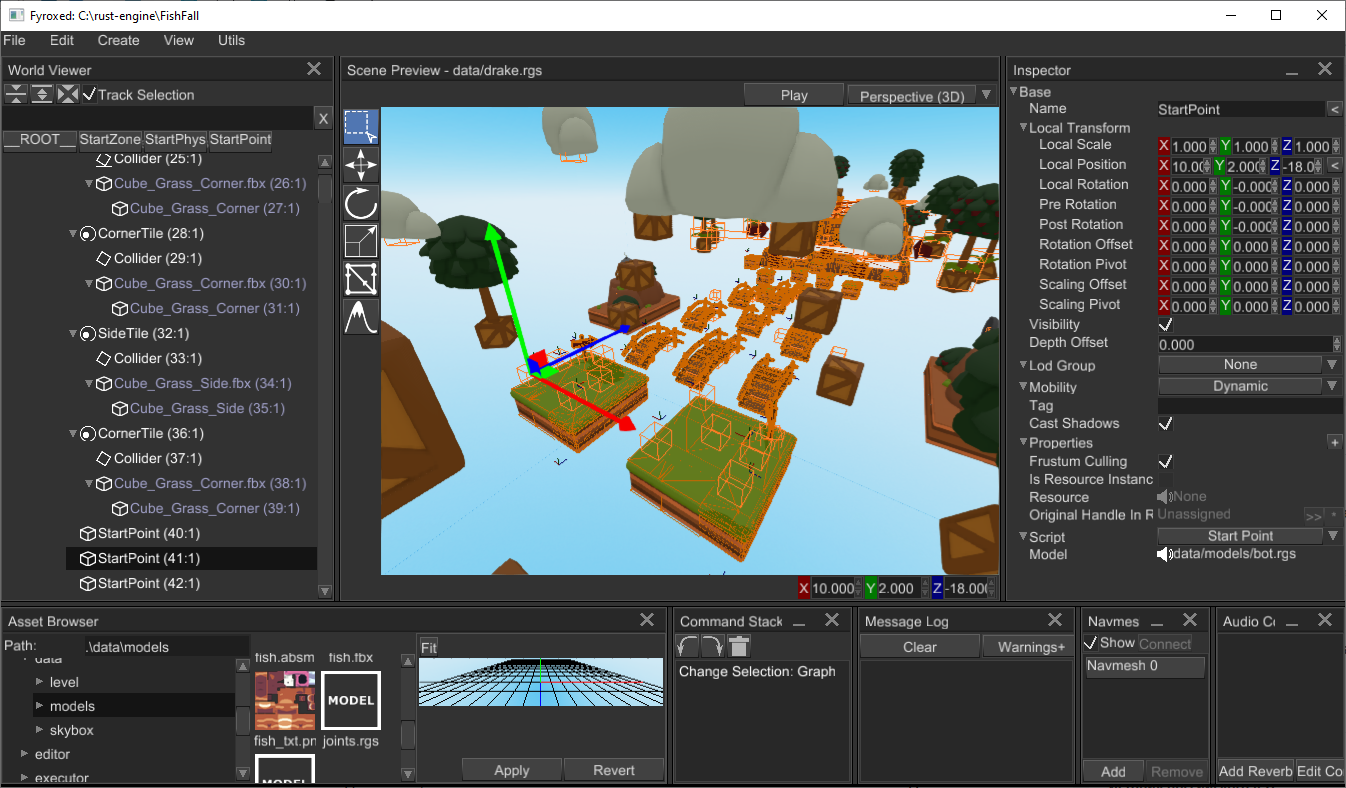

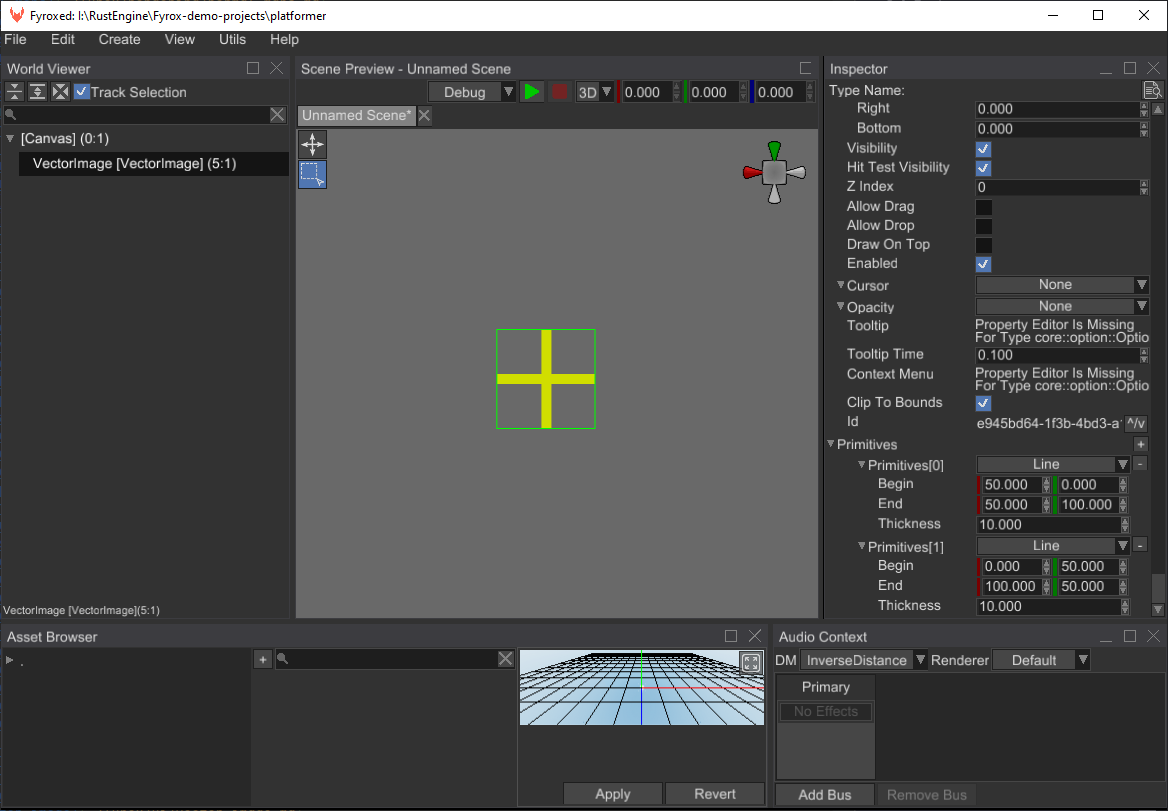

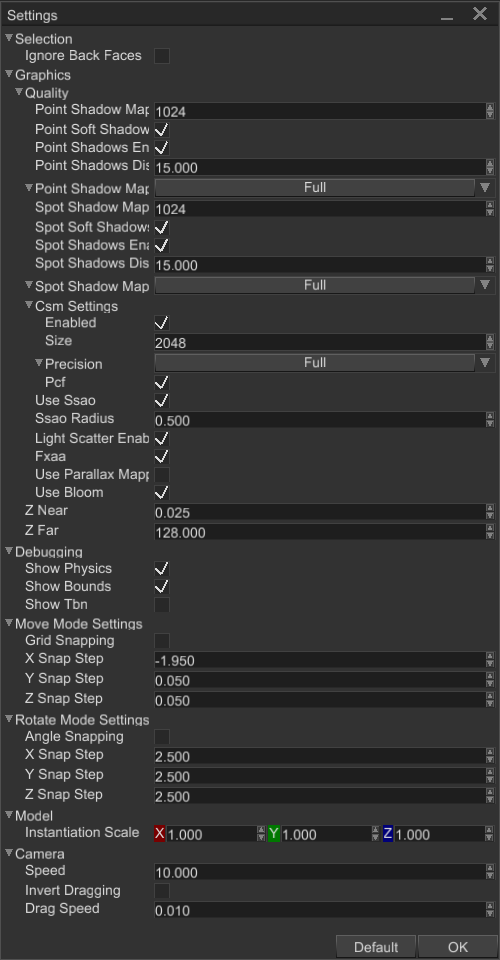

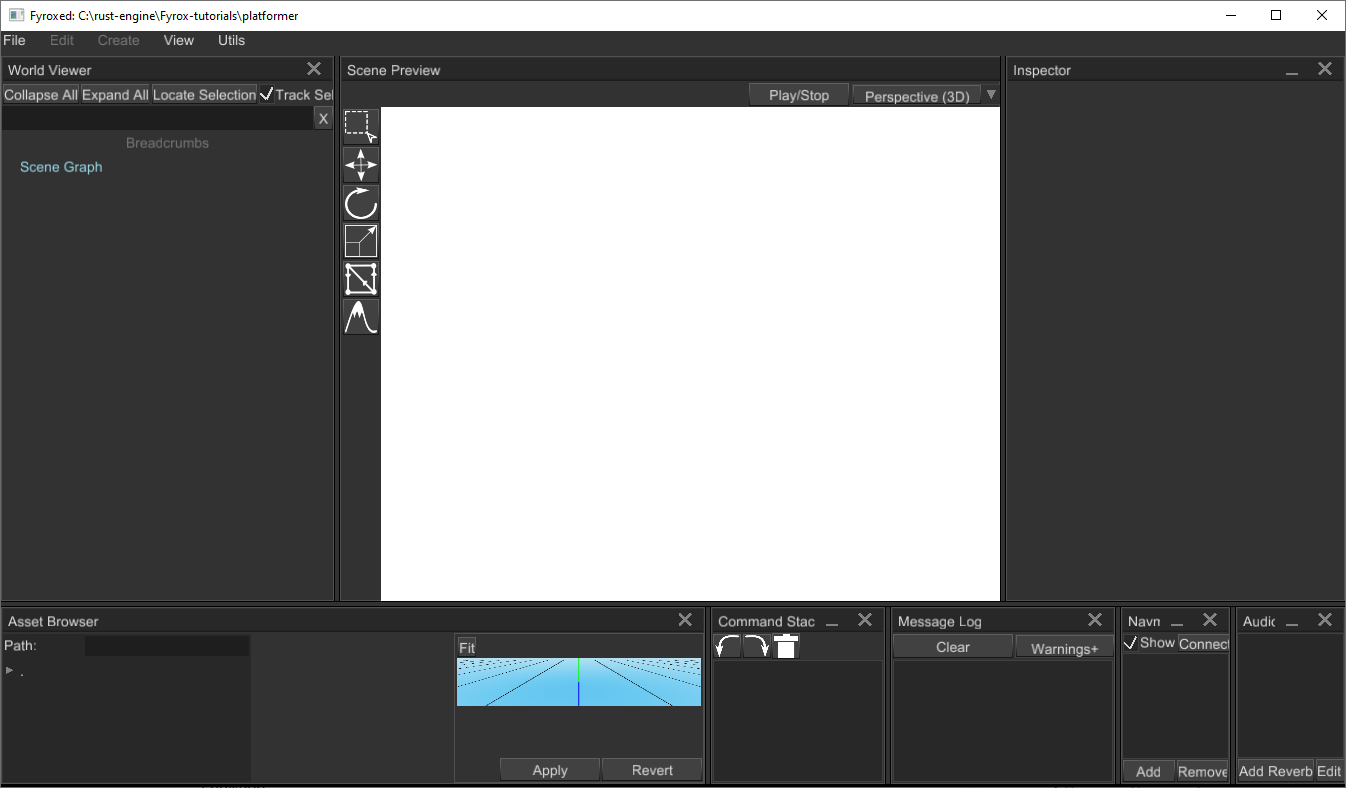

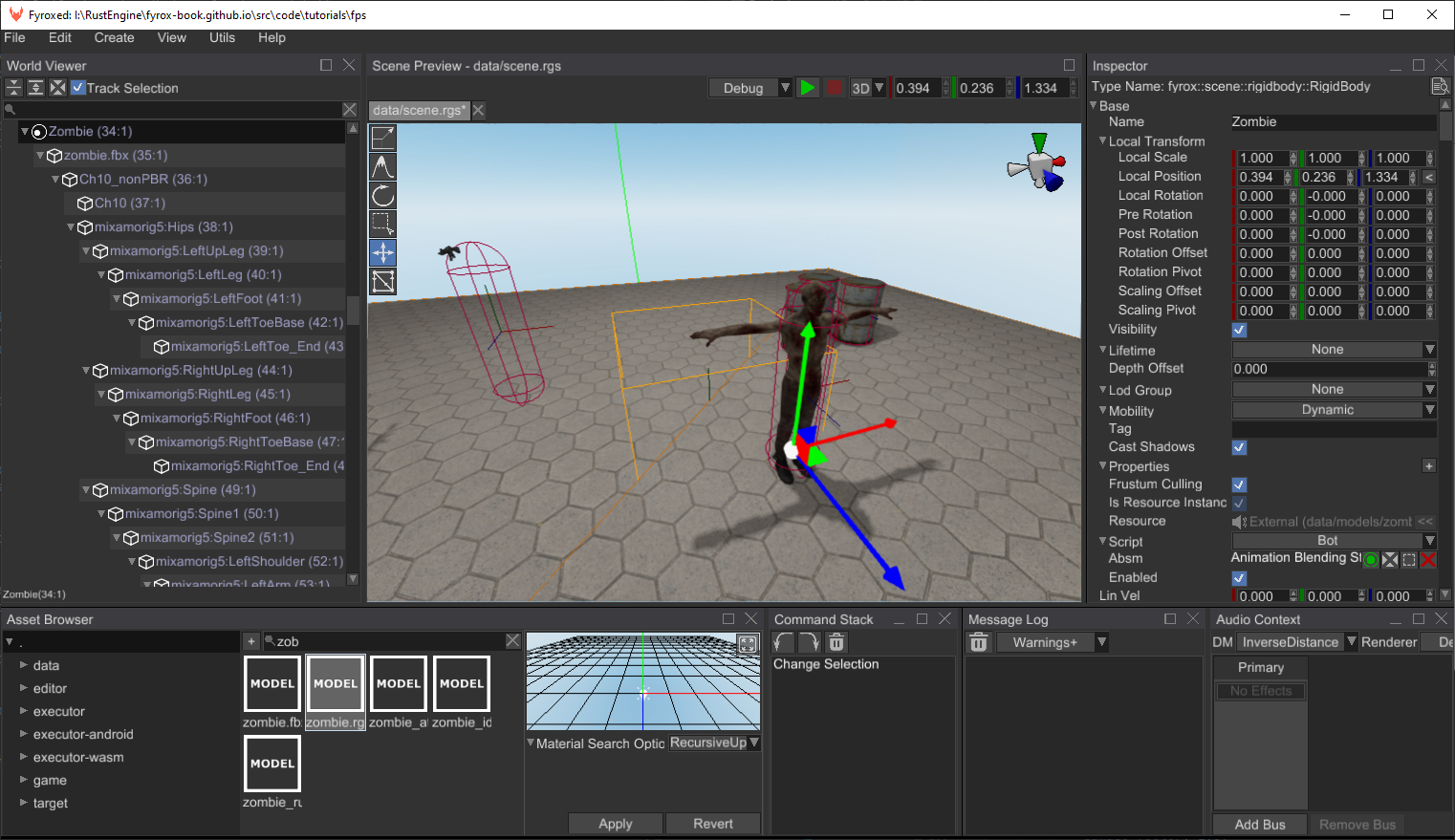

Navigate to your project's directory and run cargo run --package editor --release, after some time you should see the

editor:

In the editor you can start building your game scene. Important note: your scene must have at least one camera, otherwise you won't see a thing. Read the next chapter to learn how to use the editor.

Using the Latest Engine Version

Due to the nature of the software development, some bugs will inevitably sneak into the major releases, due to this, you may want to use the latest engine version from the repository on GitHub, since it is the most likely to have bugs fixed (you can also contribute by fixing any bugs you find or at least, by filing an issue).

Automatic

⚠️

fyrox-templatehas special sub-command -upgradeto quickly upgrade to desired engine version. To upgrade to the latest version (nightly) you should executefyrox-template upgrade --version nightlycommand in your game's directory.

There are three main variants for --version switch:

nightly- uses latest nightly version of the engine from GitHub directly. This is the preferable version if you want to use the latest changes and bug fixes as they release.latest- uses latest stable version of the engine.major.minor.patch- uses specific stable version from crates.io (0.30.0for example).

Manual

Engine version can also be updated manually. The first step to take is to install the latest fyrox-template, this can be done

with a single cargo command:

cargo install fyrox-template --force --git https://github.com/FyroxEngine/Fyrox

This will ensure you're using the latest project/script template generator, which is important, since old versions of the template generator will most likely generate outdated code, no longer be compatible with the engine.

To switch existing projects to the latest version of the engine, you need to specify paths pointing to the remote repository

for the fyrox and fyroxed_base dependencies. You need to do this in the game, executor, and editor projects. First,

open game/Cargo.toml and change the fyrox dependency to the following:

[dependencies]

fyrox = { git = "https://github.com/FyroxEngine/Fyrox" }

Do the same for executor/Cargo.toml. The editor has two dependencies we need to change: fyrox and fyroxed_base.

Open the editor/Cargo.toml and set both dependencies to the following:

[dependencies]

fyrox = { git = "https://github.com/FyroxEngine/Fyrox" }

fyroxed_base = { git = "https://github.com/FyroxEngine/Fyrox" }

Now your game will use the latest engine and editor, but beware - new commits could bring some API breaks. You can avoid these by

specifying a particular commit, just add rev = "desired_commit_hash" to every dependency like so:

[dependencies]

fyrox = { git = "https://github.com/FyroxEngine/Fyrox", rev = "0195666b30562c1961a9808be38b5e5715da43af" }

fyroxed_base = { git = "https://github.com/FyroxEngine/Fyrox", rev = "0195666b30562c1961a9808be38b5e5715da43af" }

To bring a local git repository of the engine to being up-to-date, just call cargo update at the root of the project's

workspace. This will pull the latest changes from the remote, unless there is no rev specified.

Learn more about dependency paths on the official cargo documentation,

here.

Adding Game Logic

Any object-specific game logic should be added using scripts. A script is a "container" for data and code, that will be executed by the engine. Read the Scripts chapter to learn how to create, edit, and use scripts in your game.

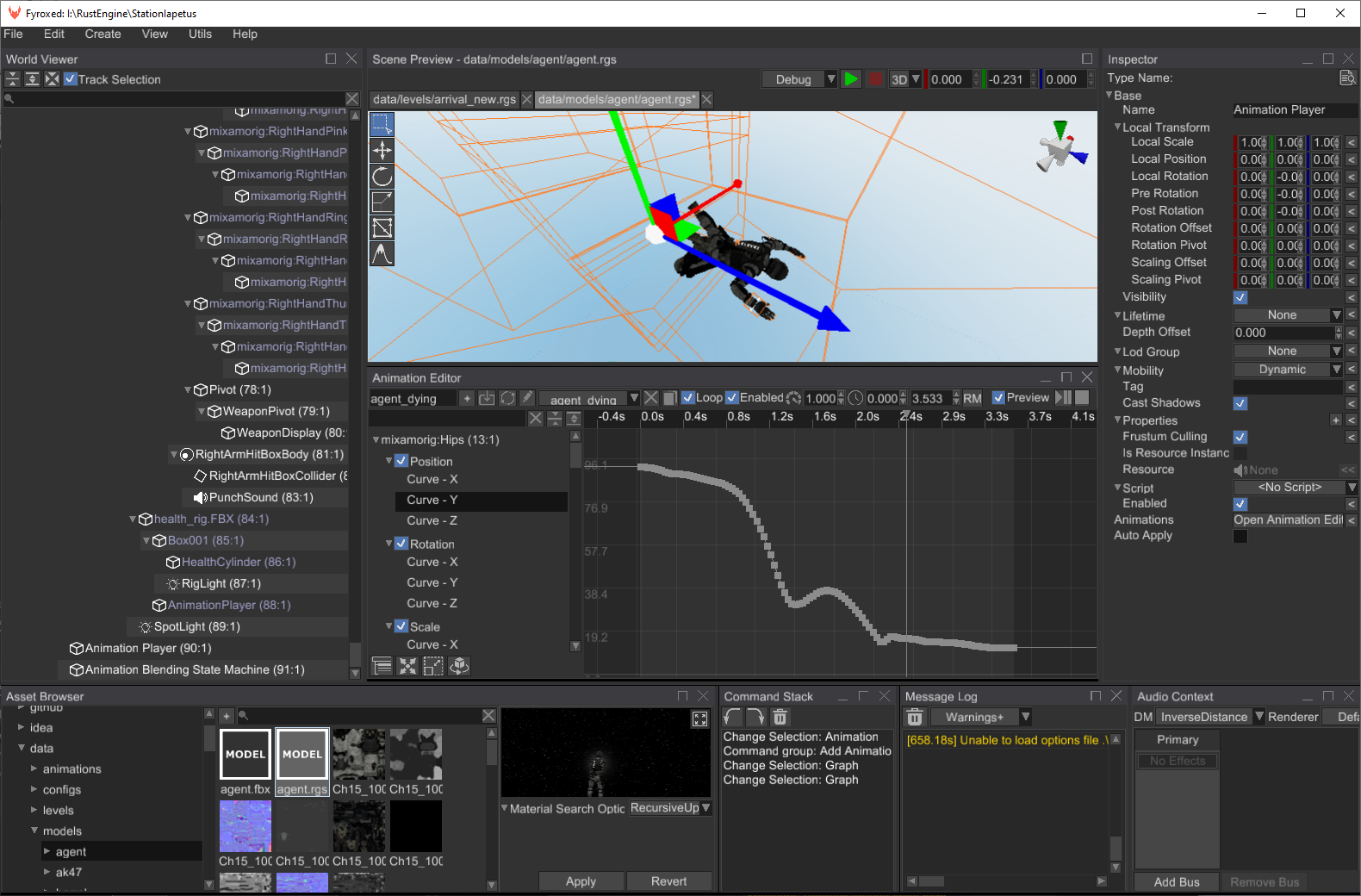

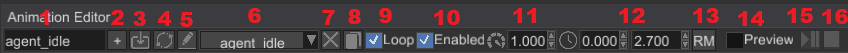

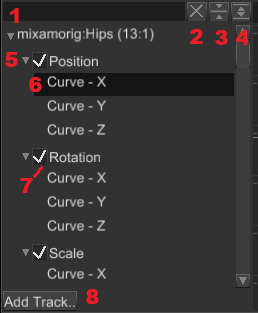

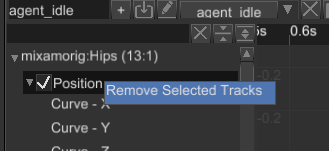

FyroxEd Overview

FyroxEd - is the native editor of Fyrox, it is made with one purpose - to be an integrated game development environment that helps you build your game from start to finish with relatively low effort.

You'll be spending a lot of time in the editor, so you should get familiar with it and learn how to use its basic functionalities. This chapter will guide you through the basics, advanced topics will be covered in their respective chapters.

Windows

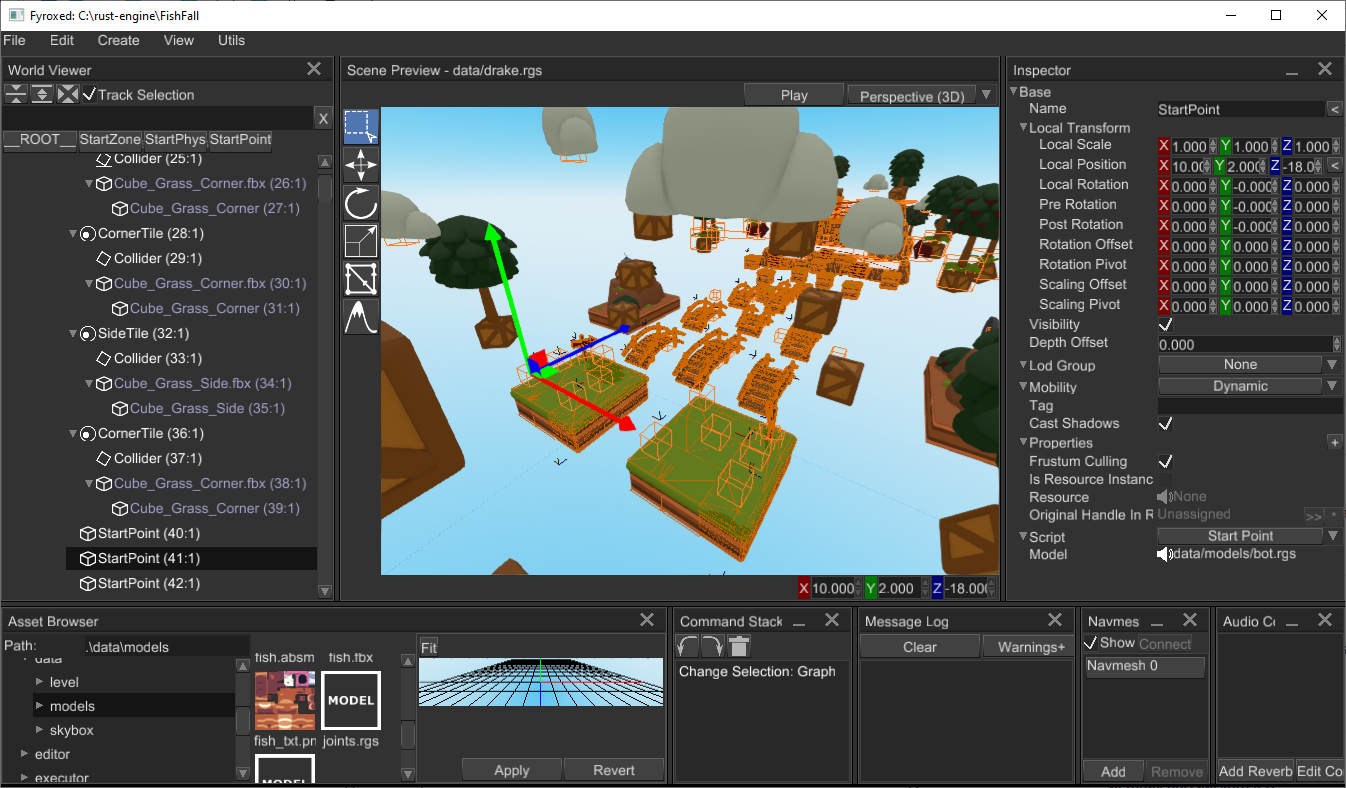

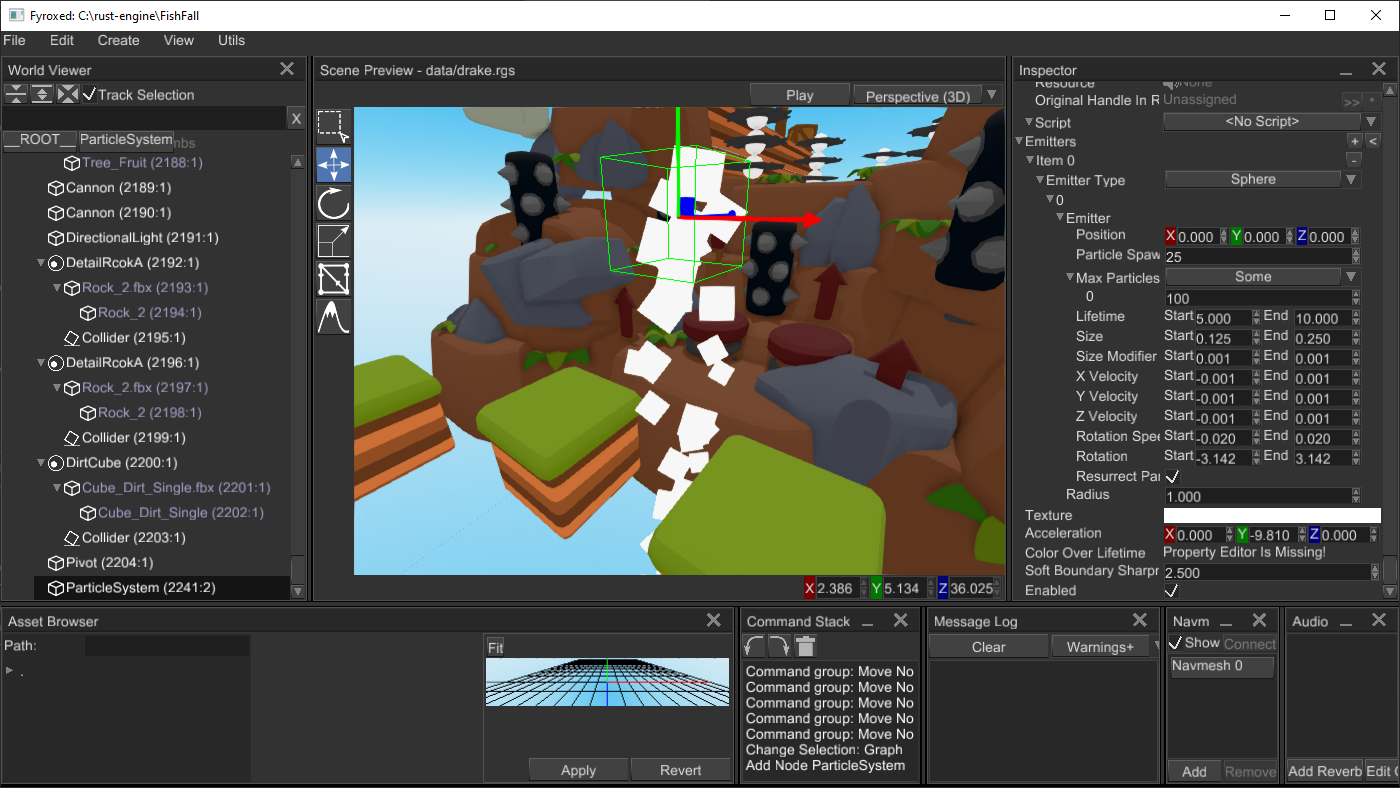

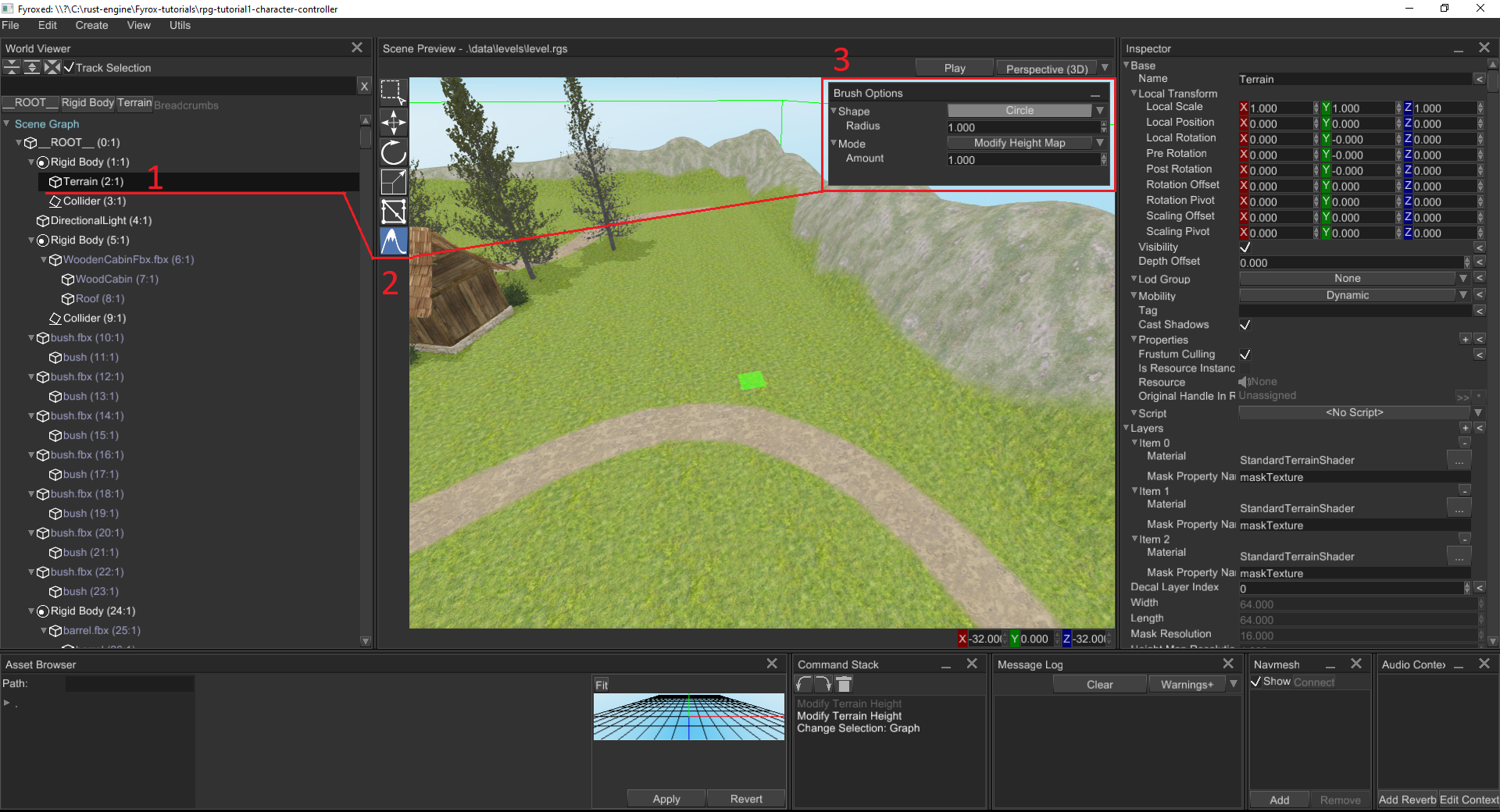

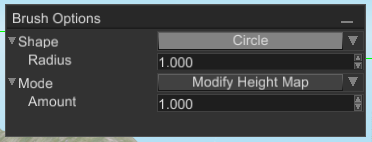

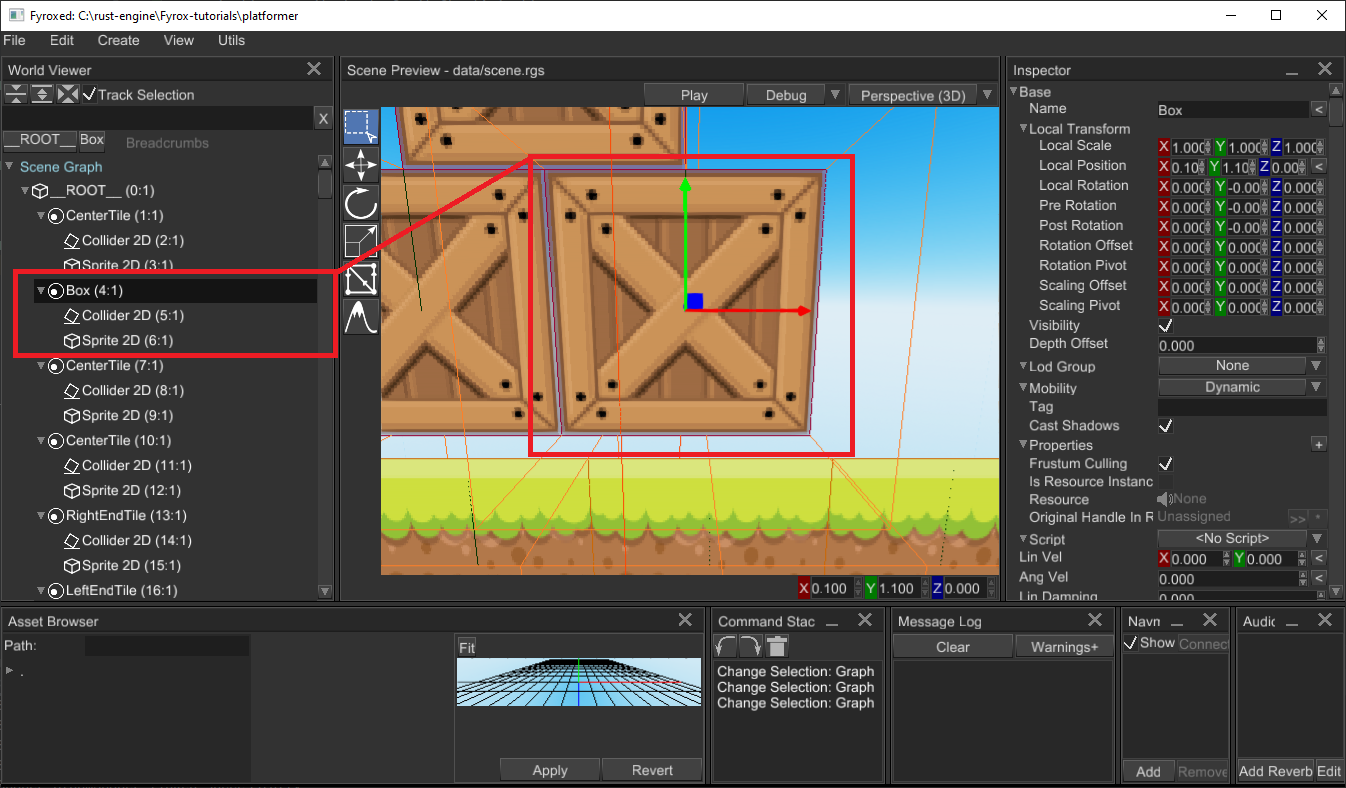

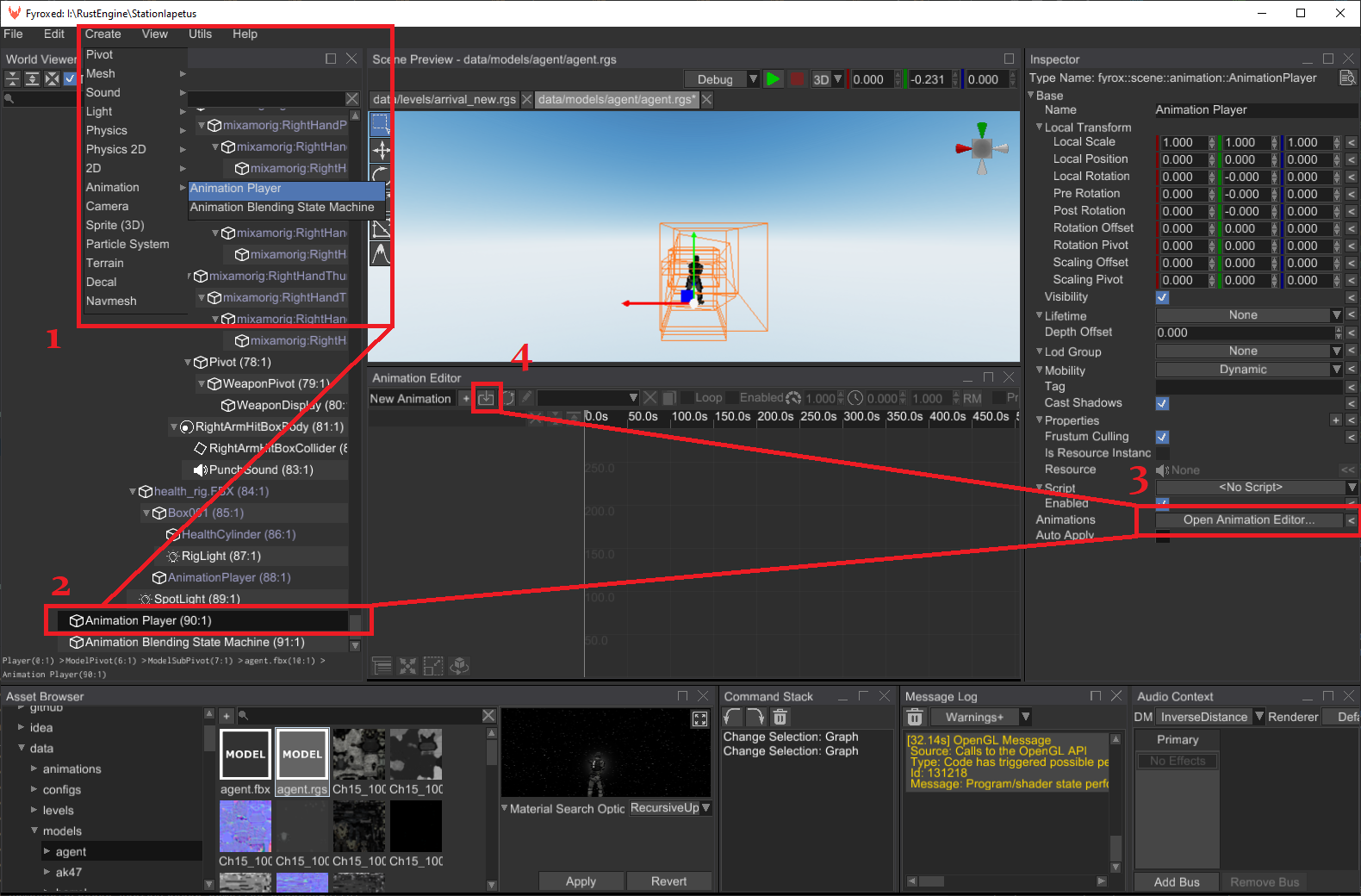

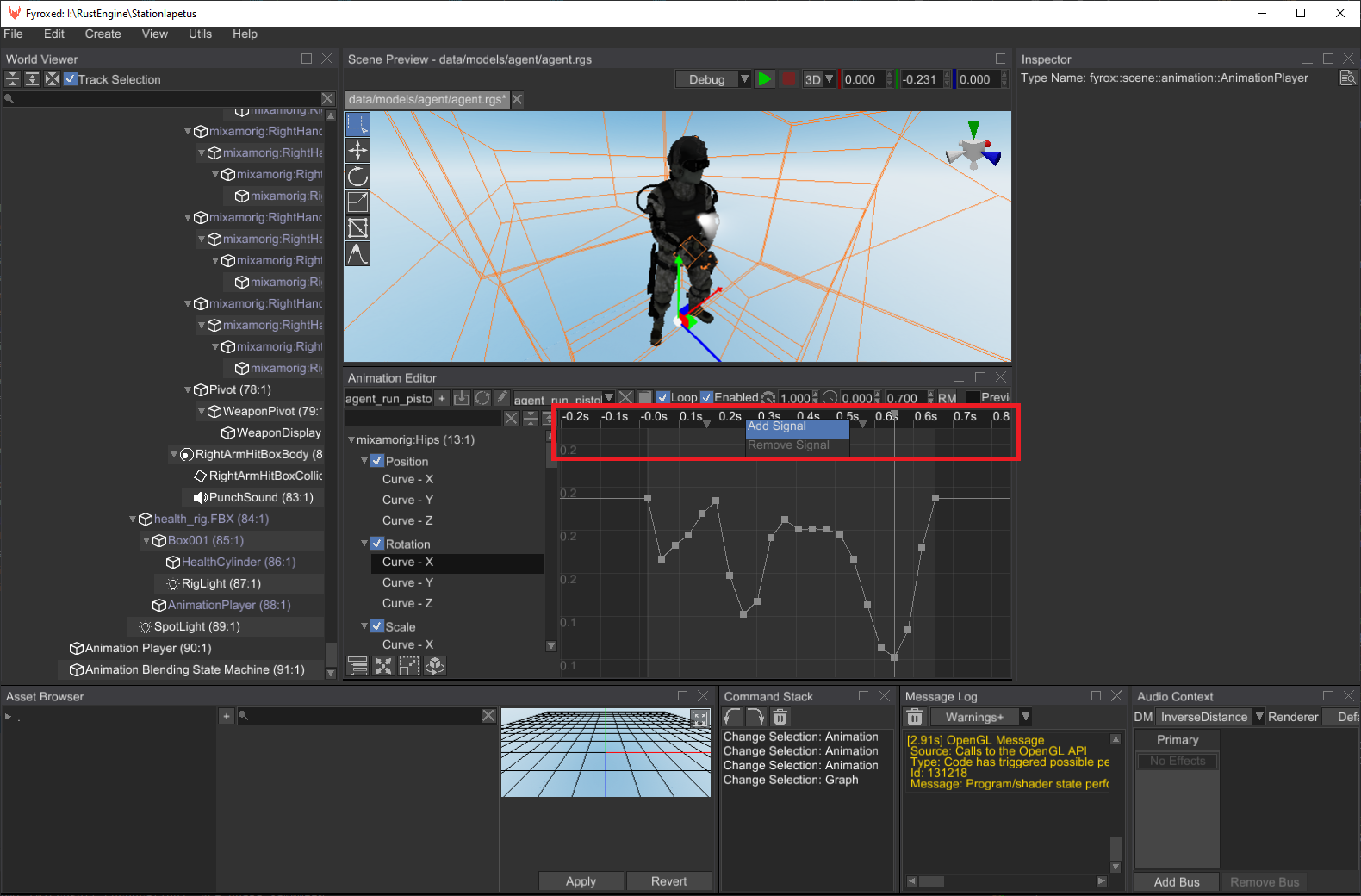

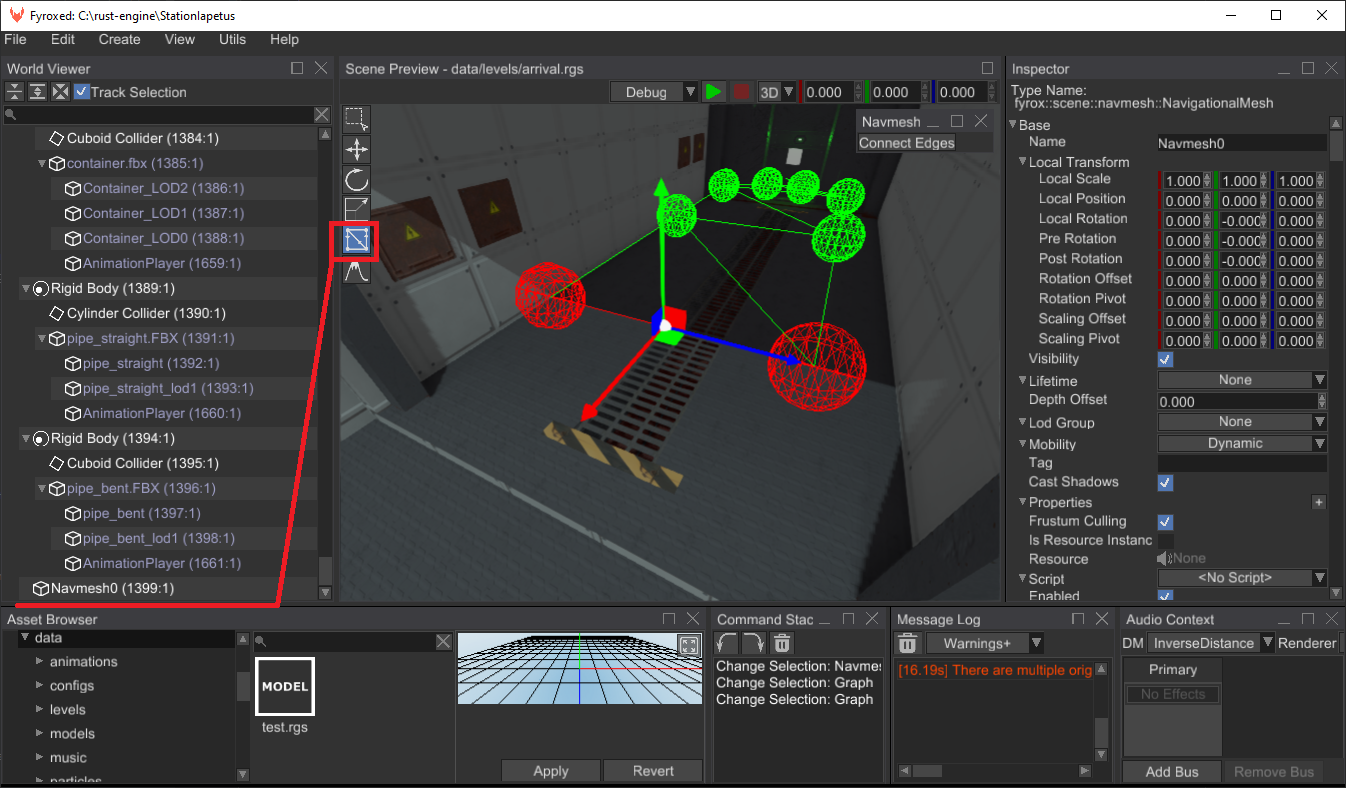

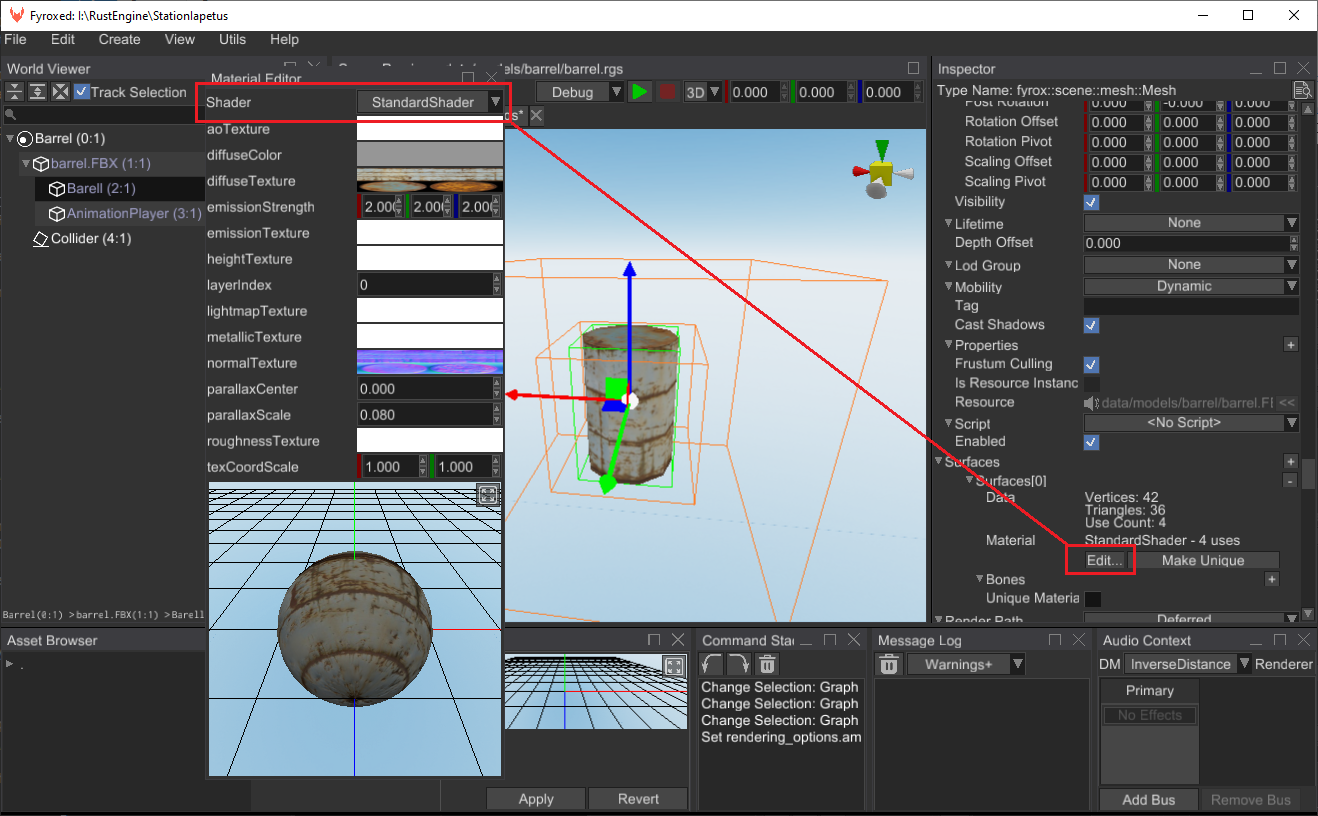

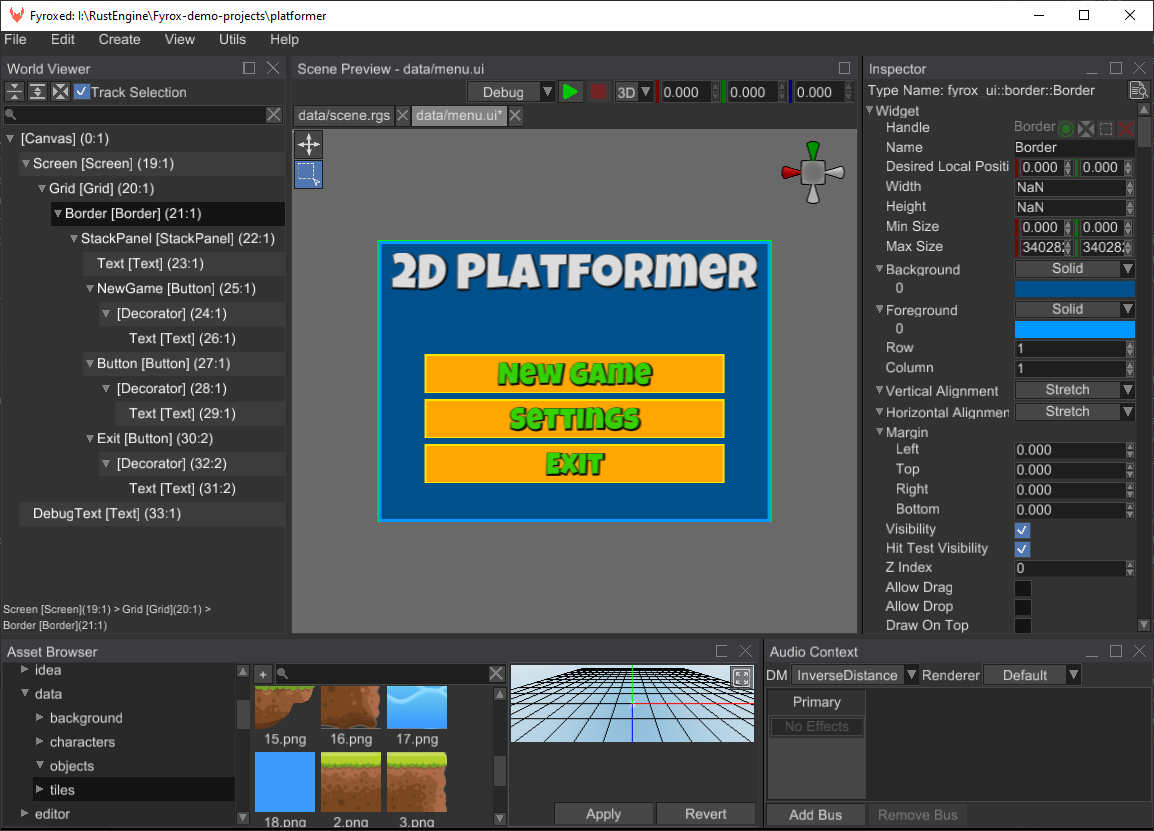

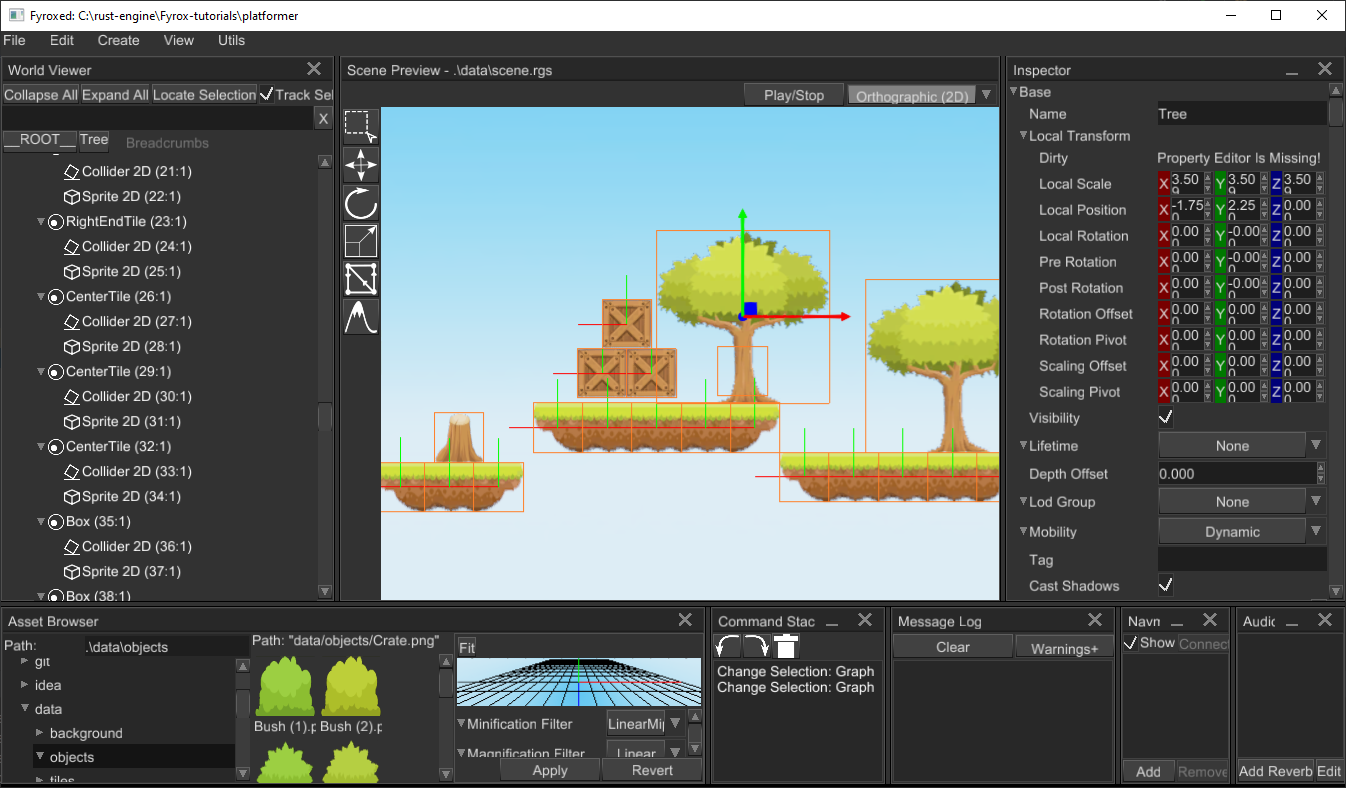

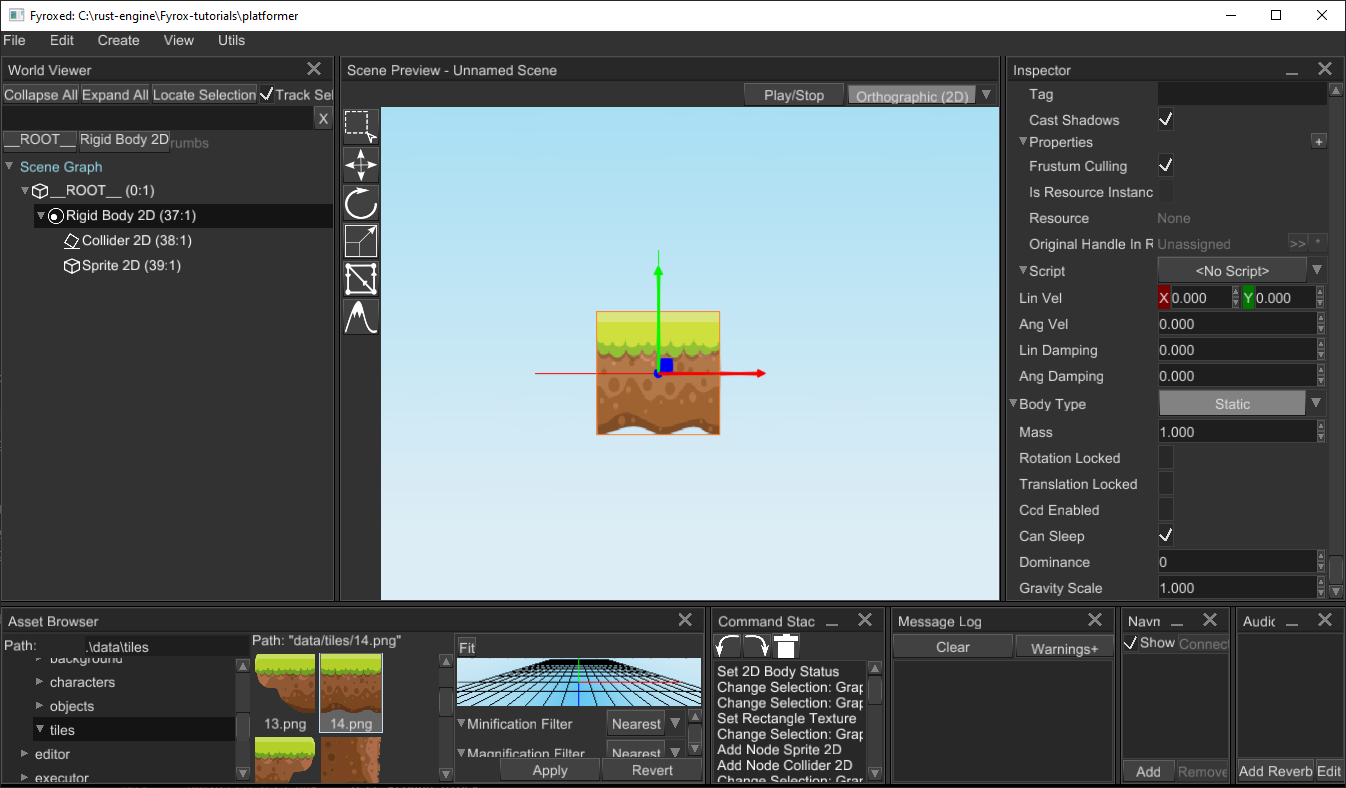

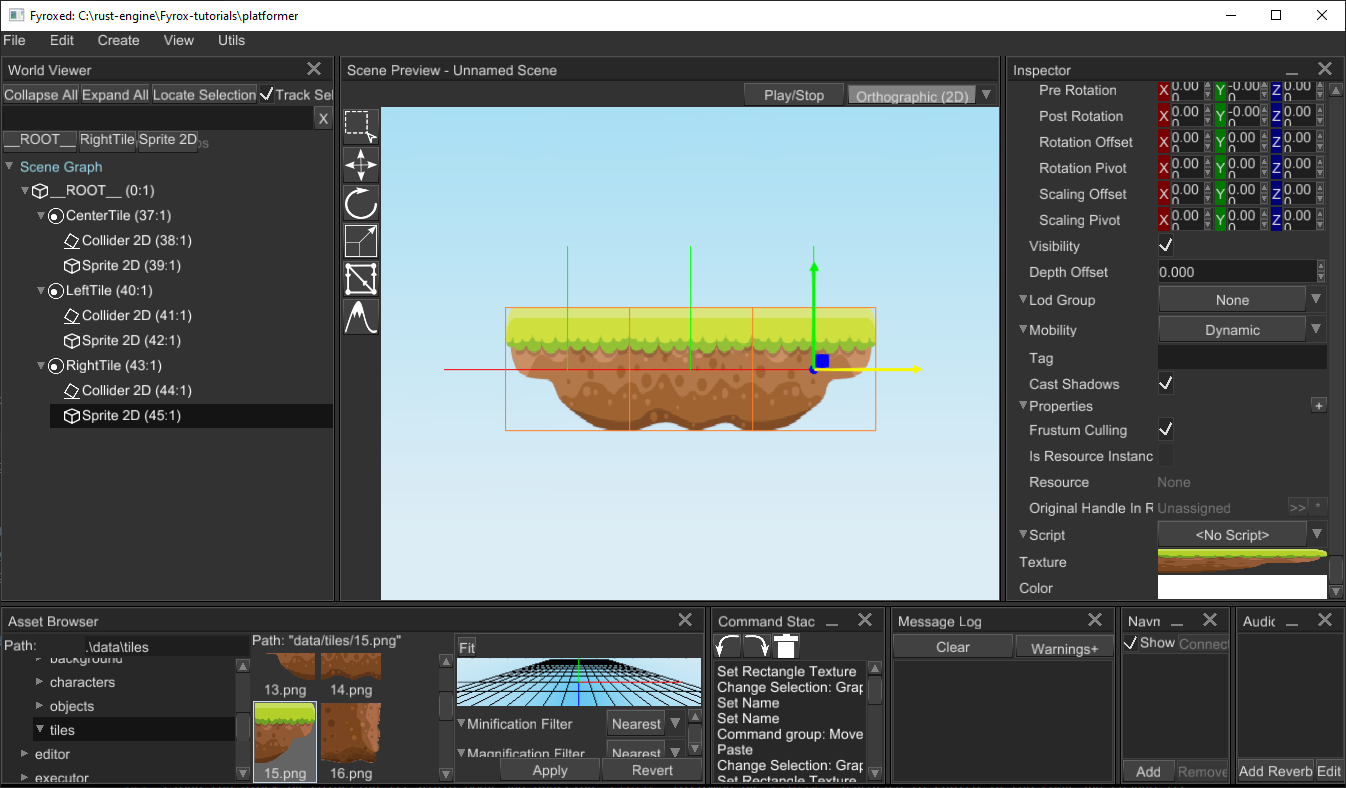

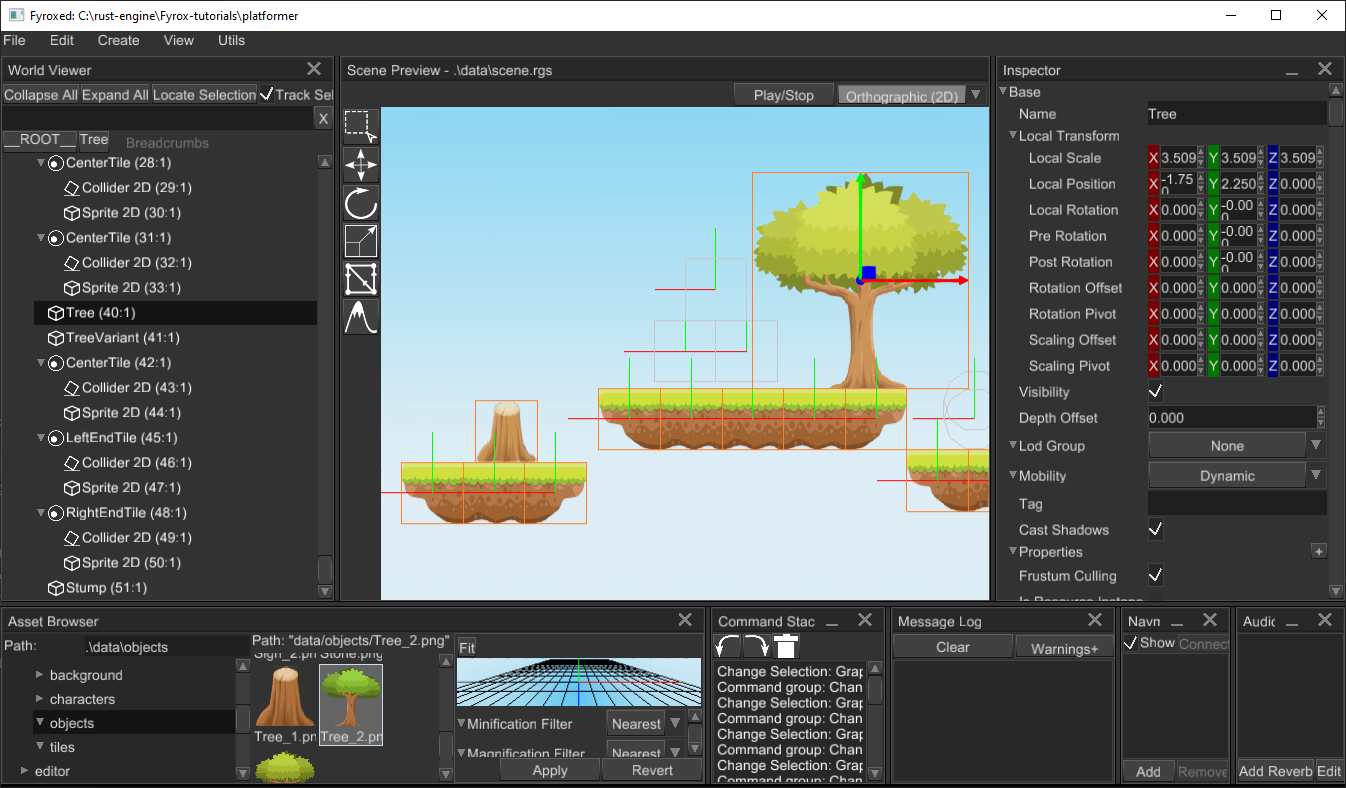

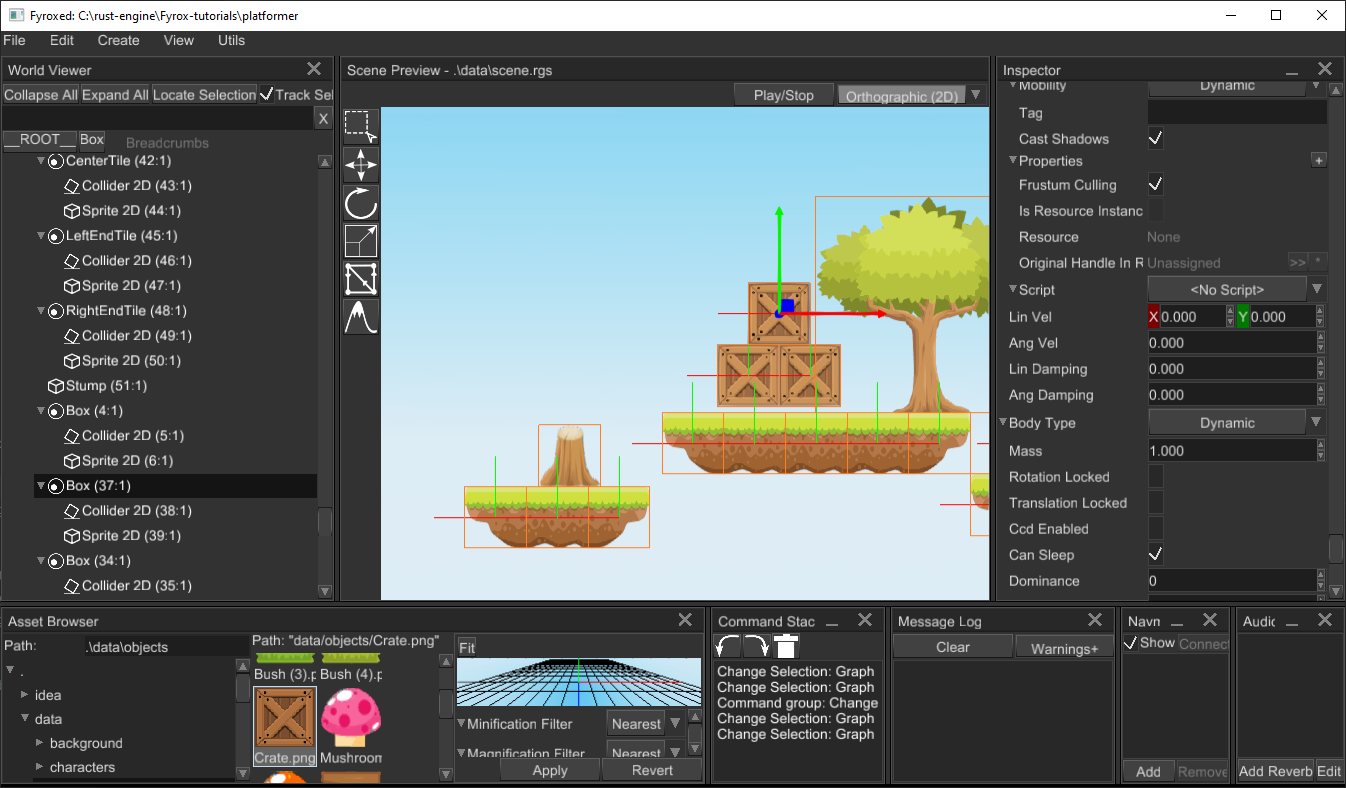

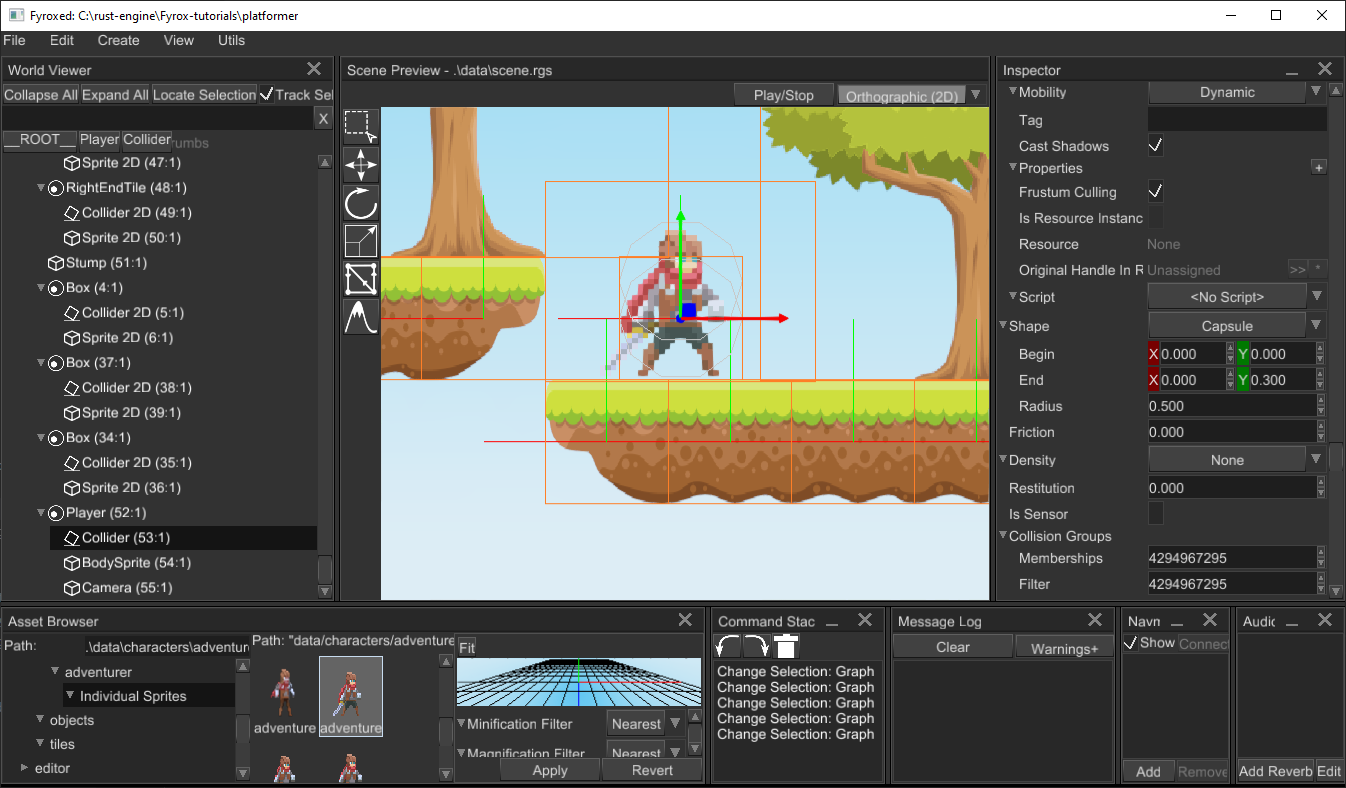

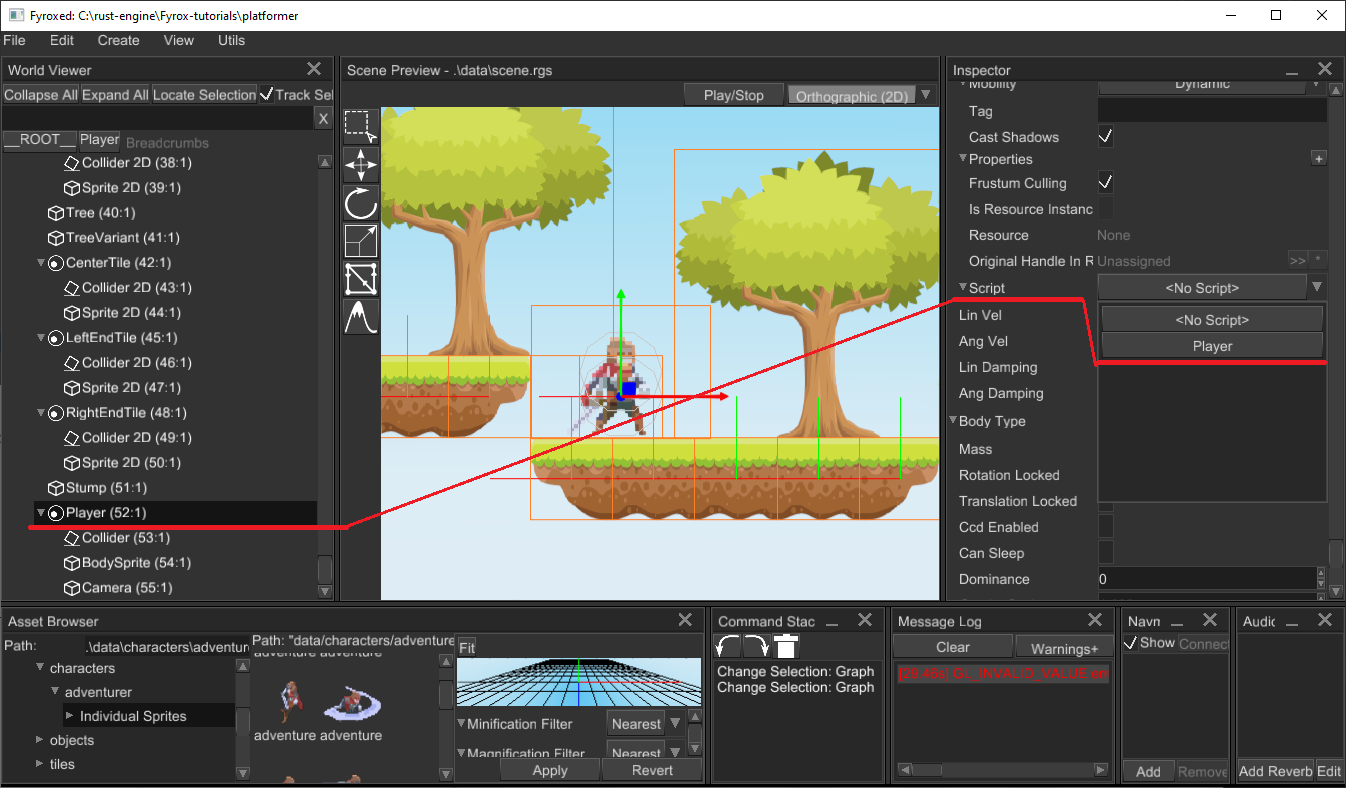

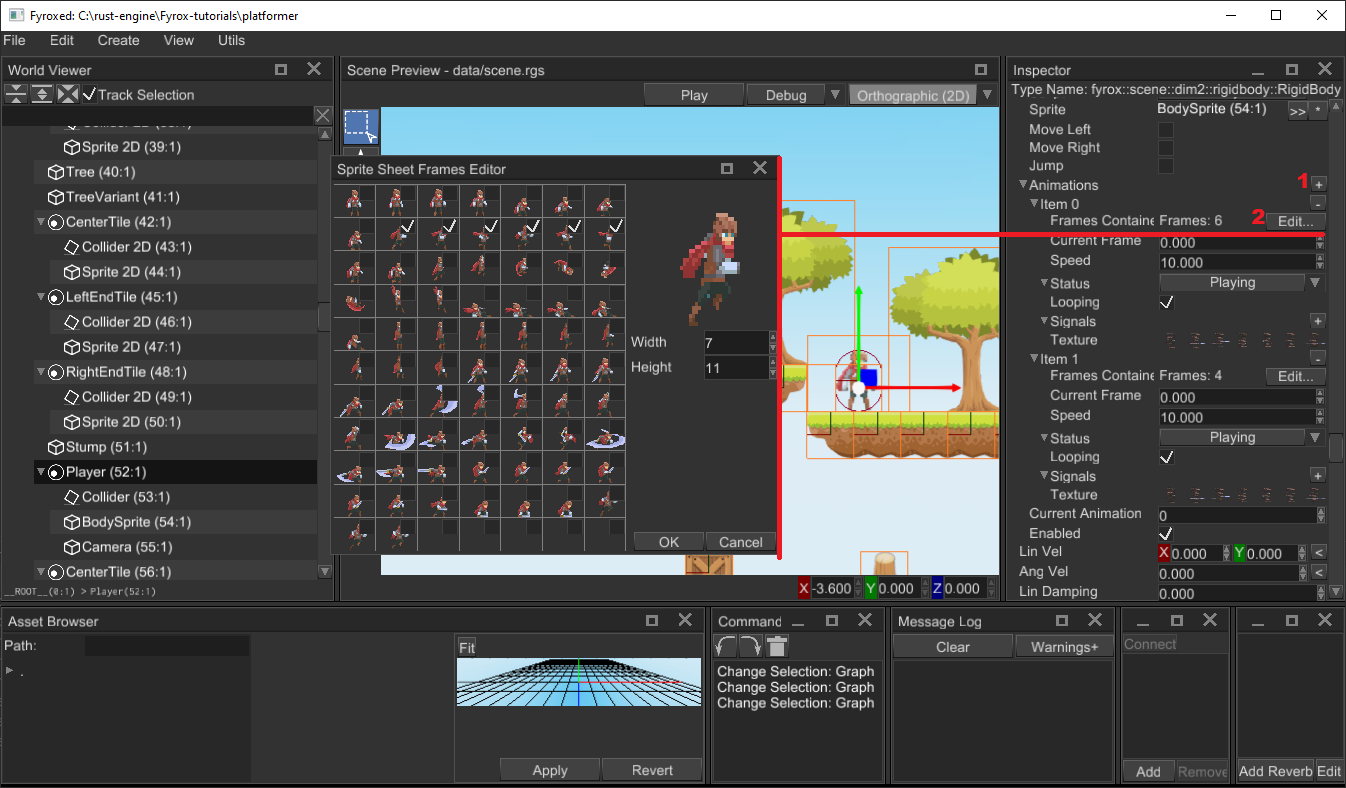

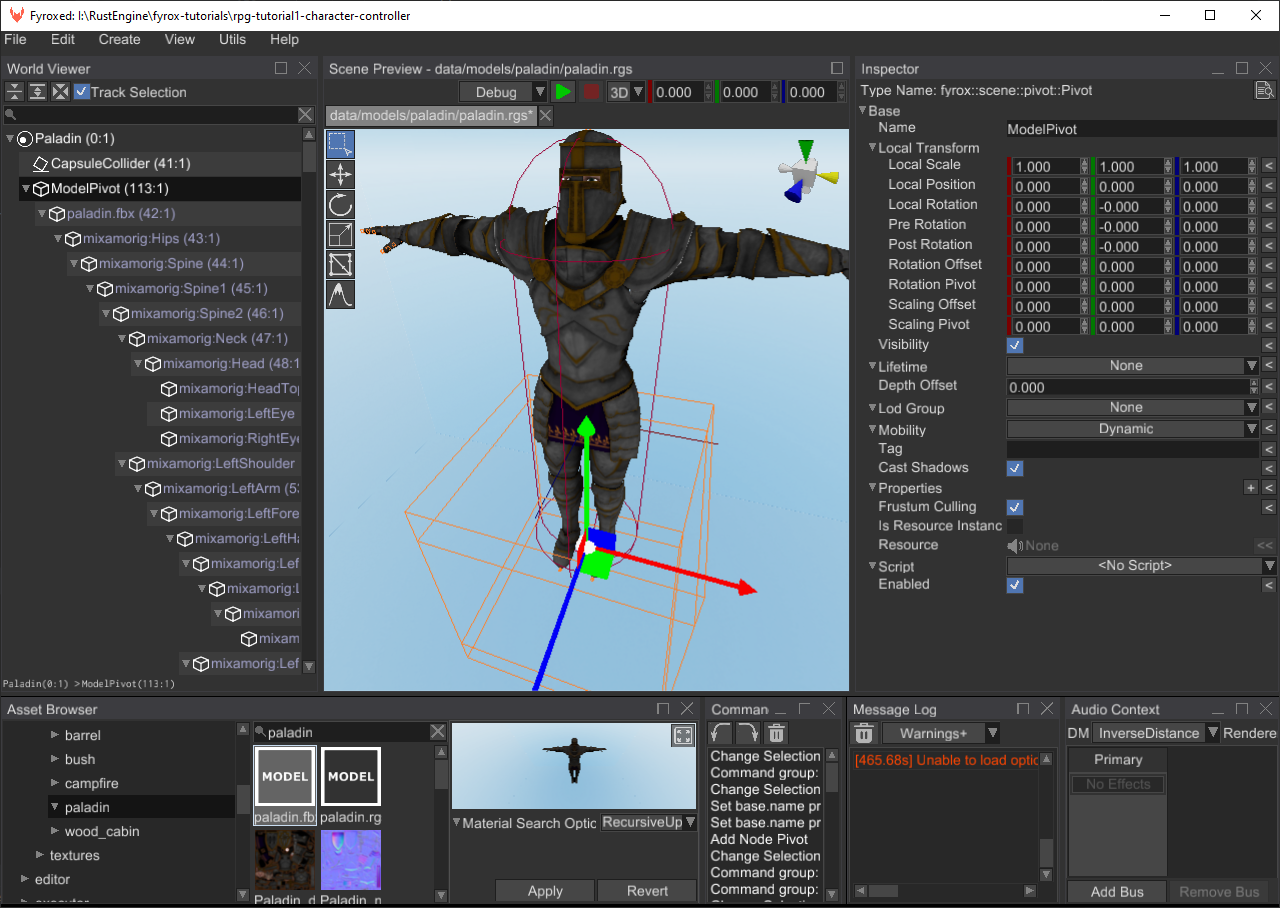

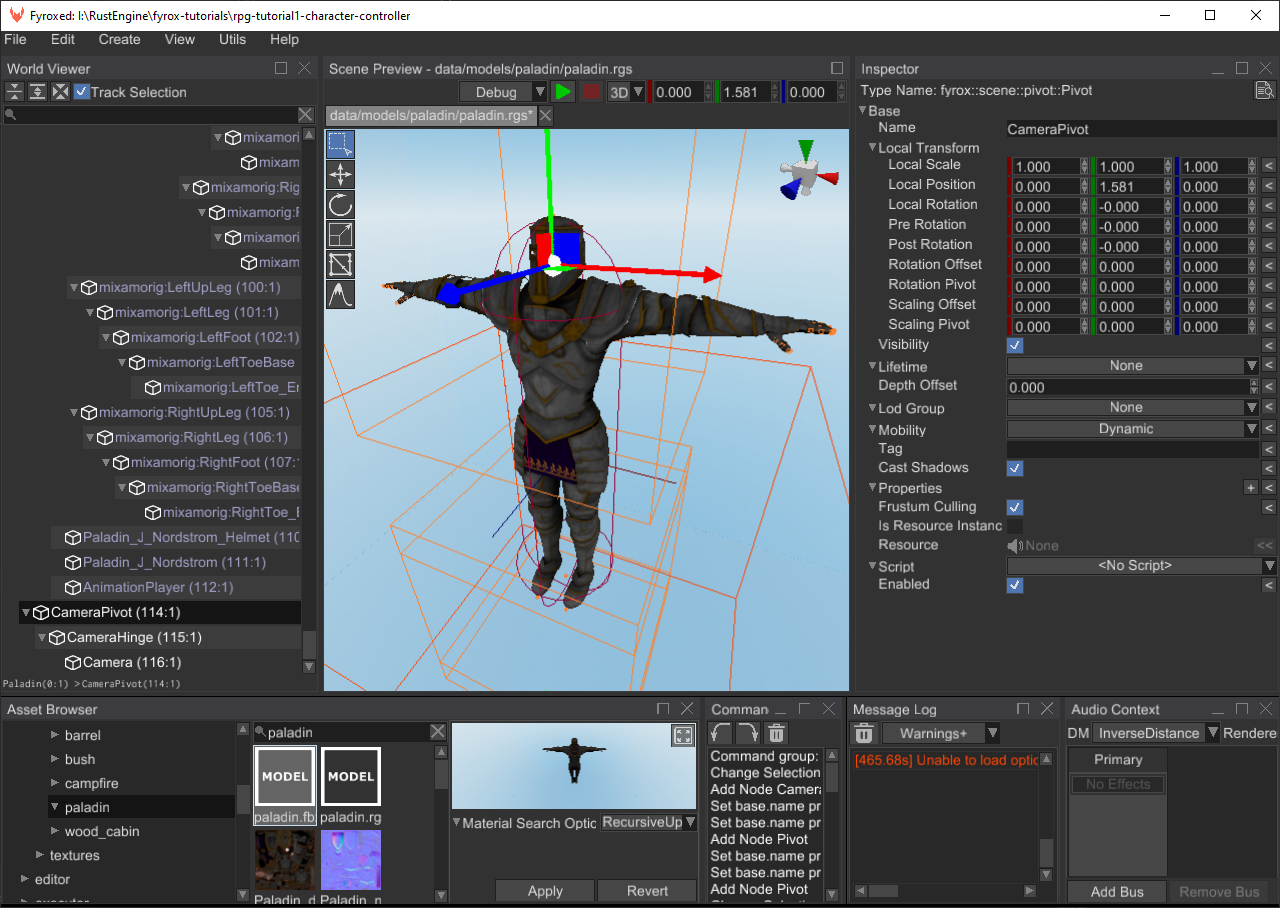

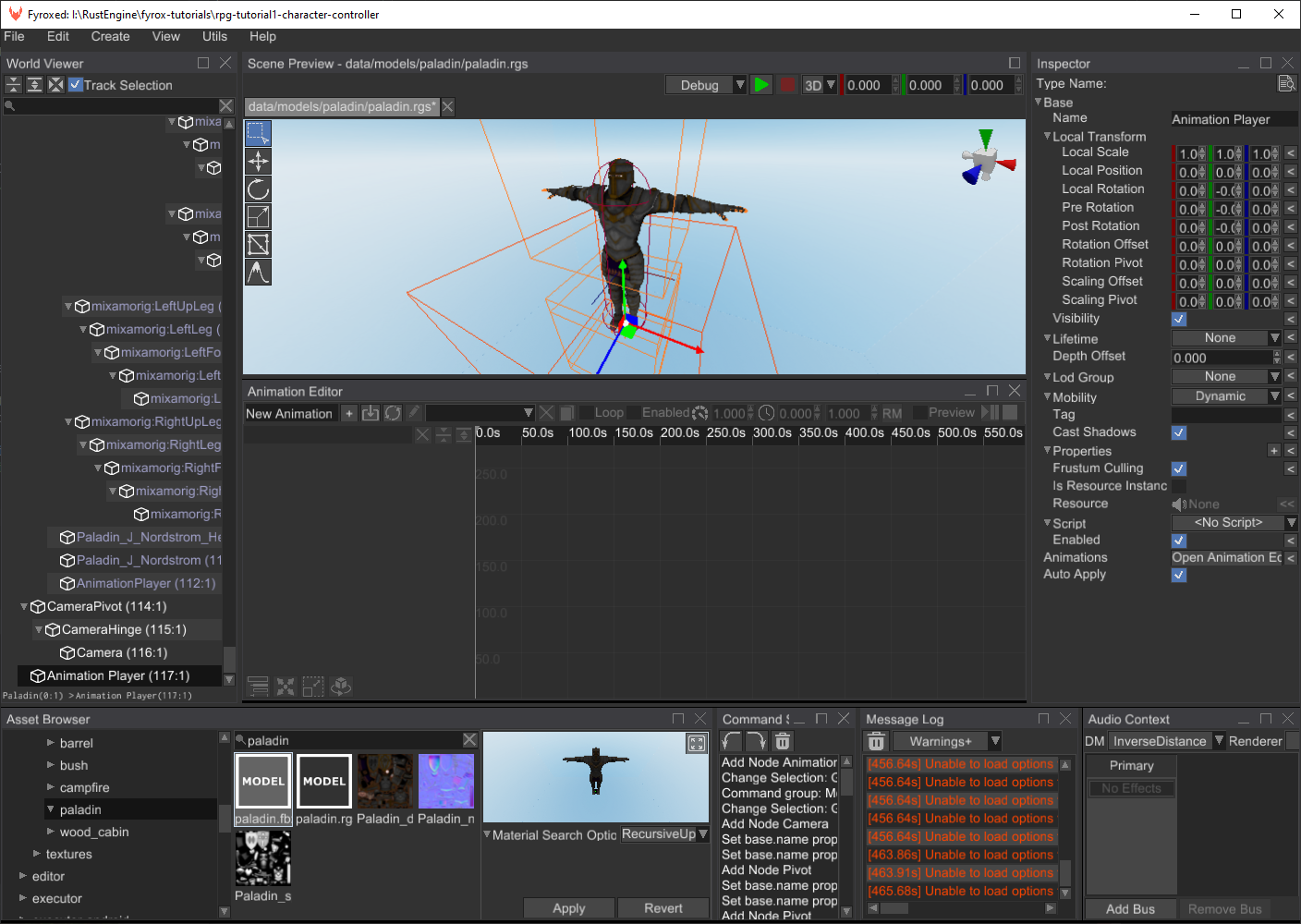

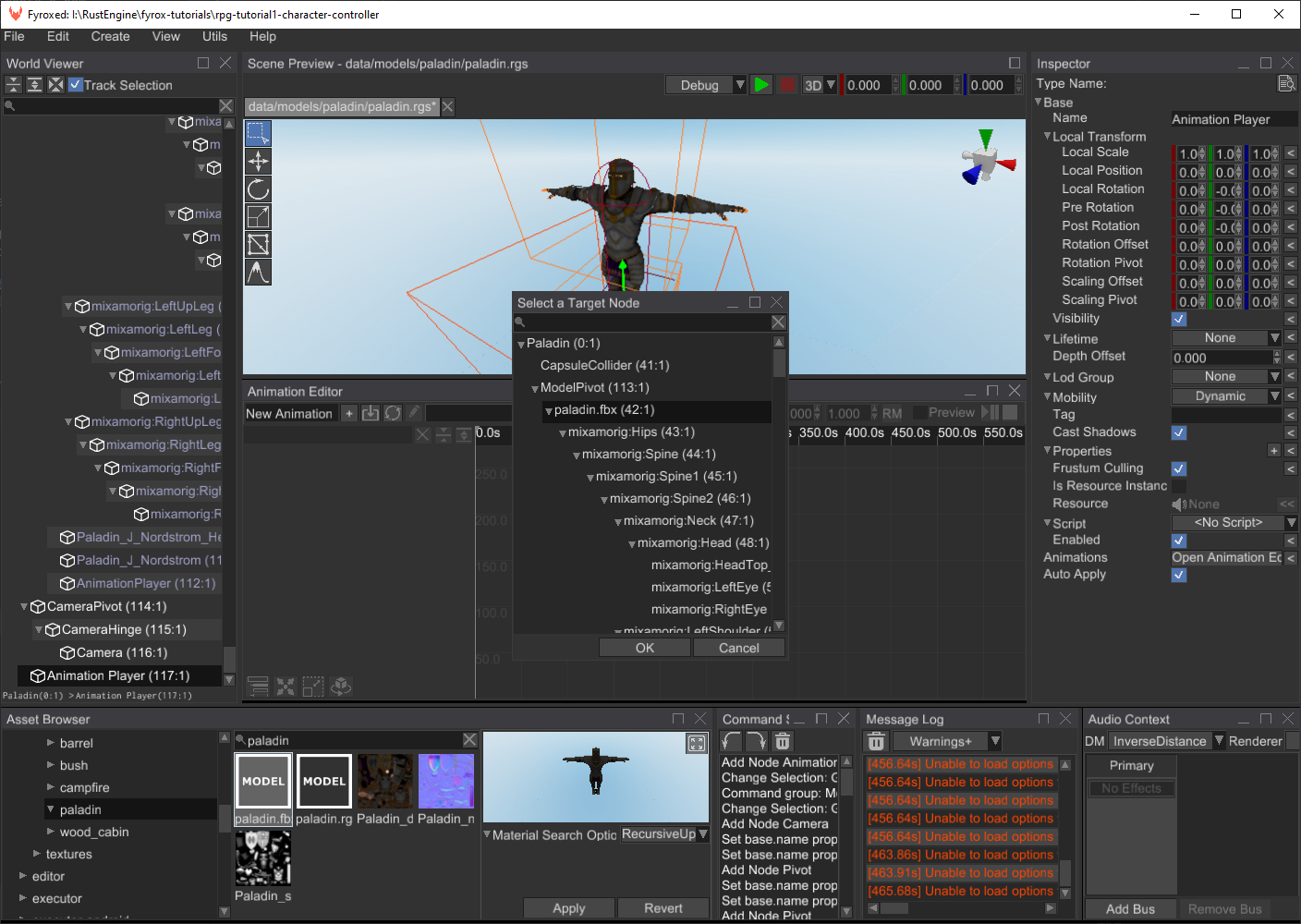

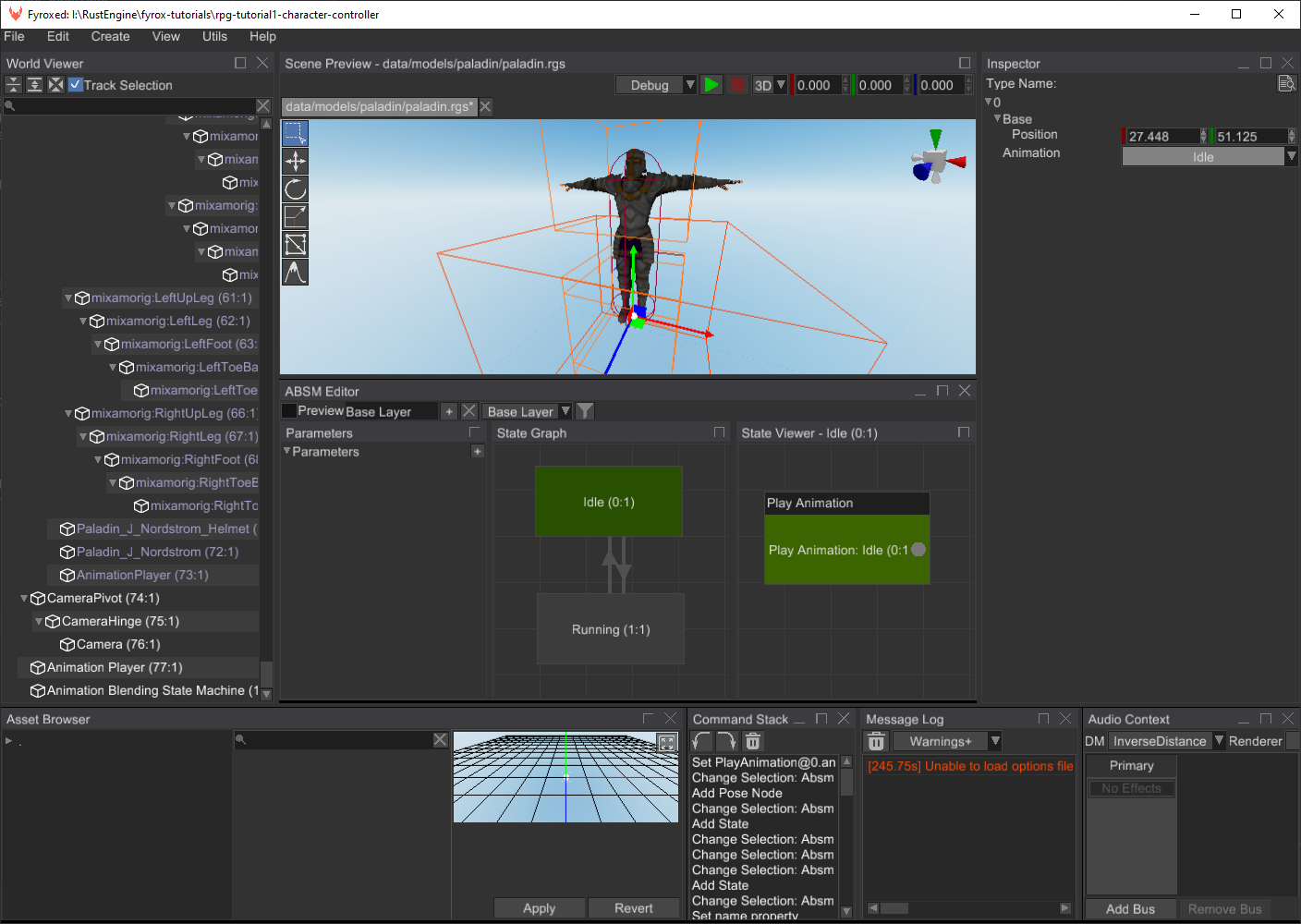

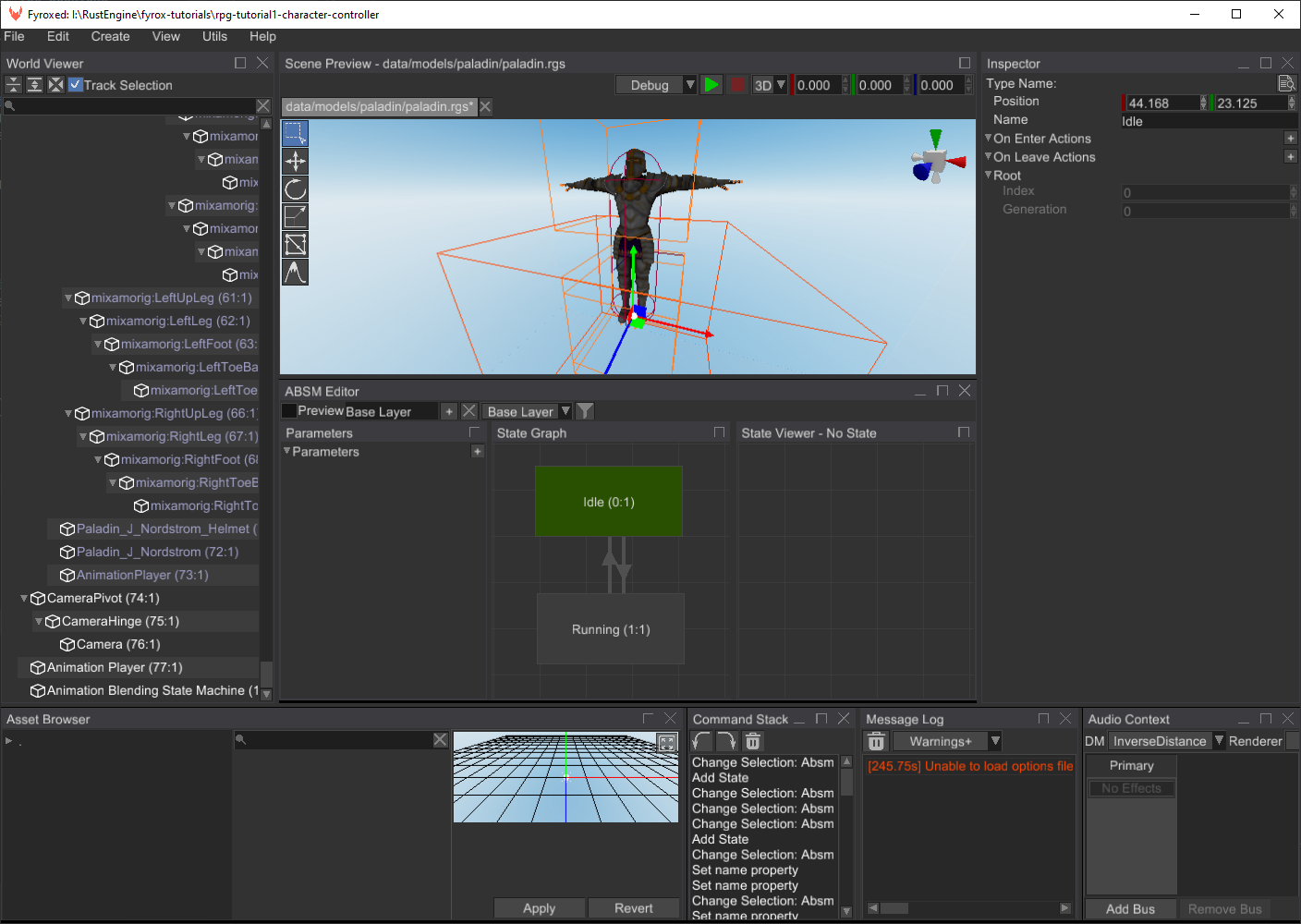

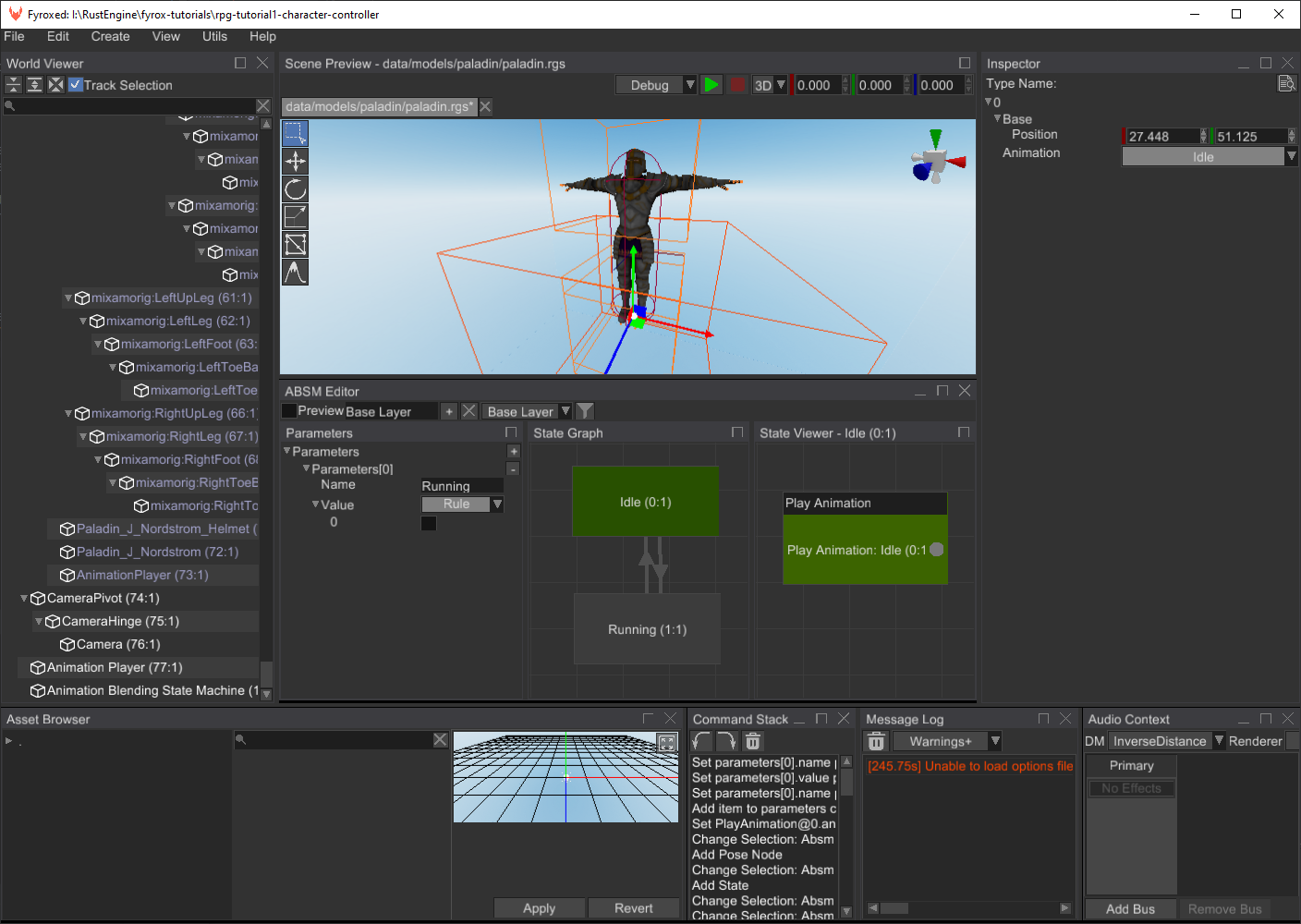

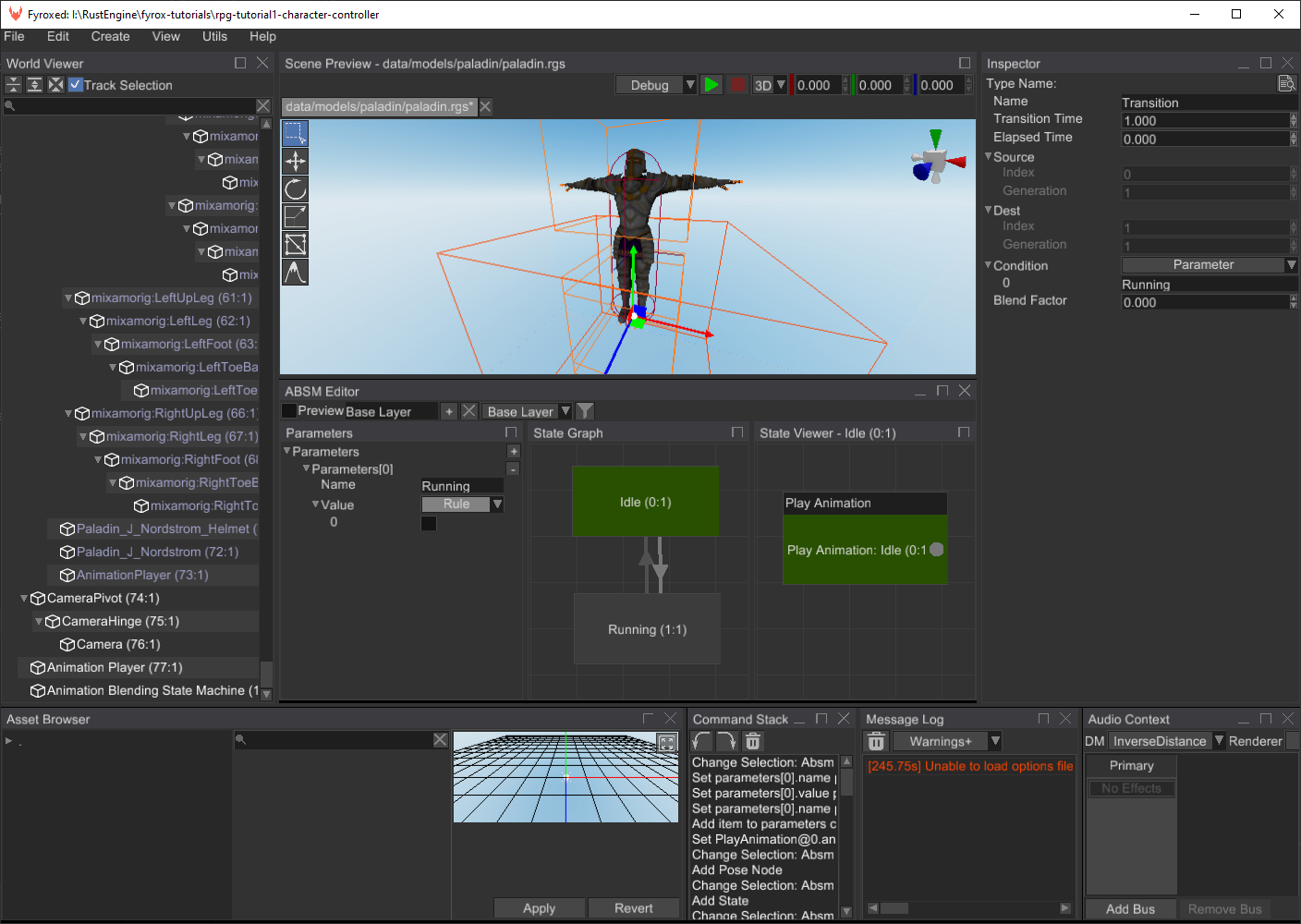

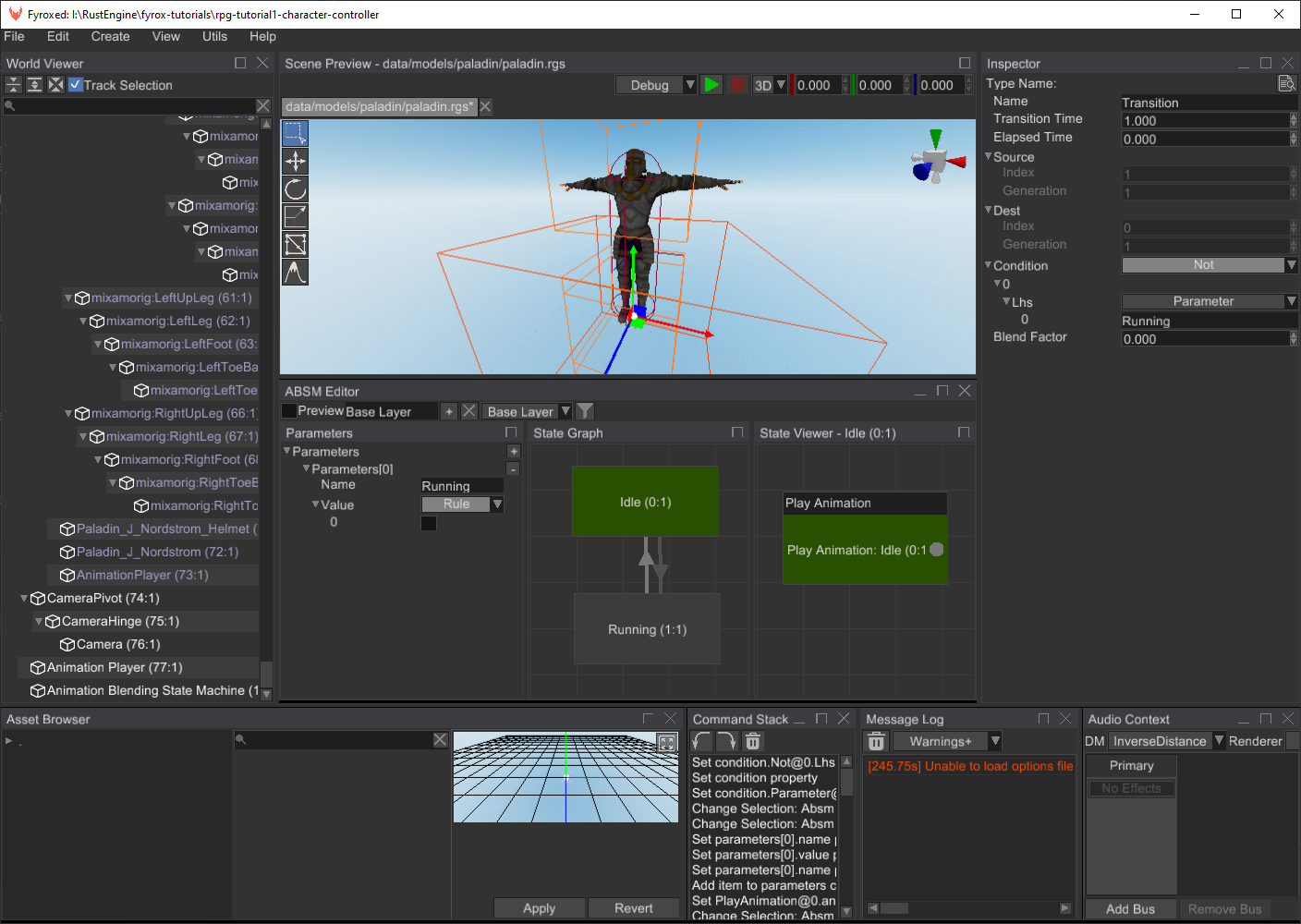

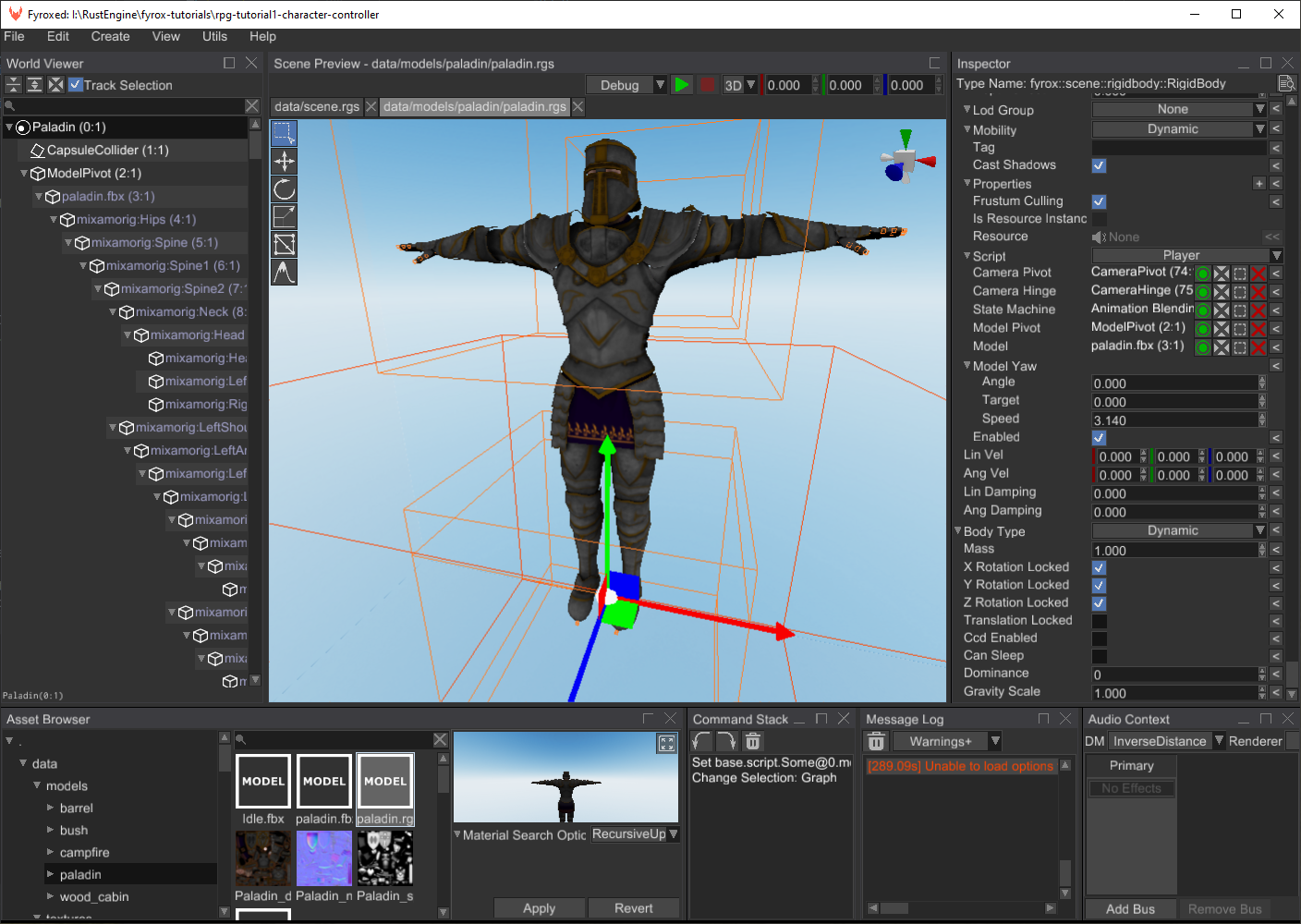

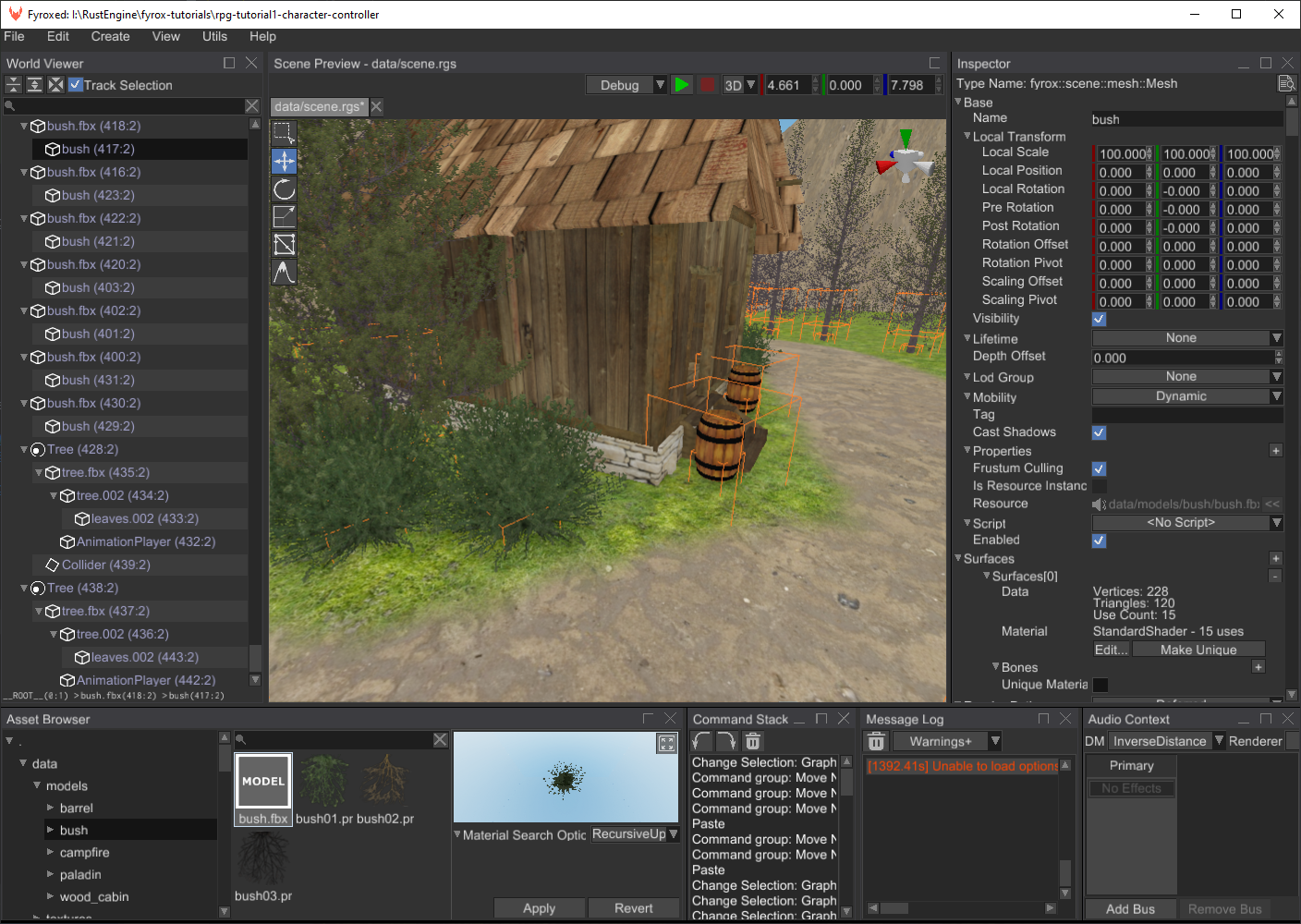

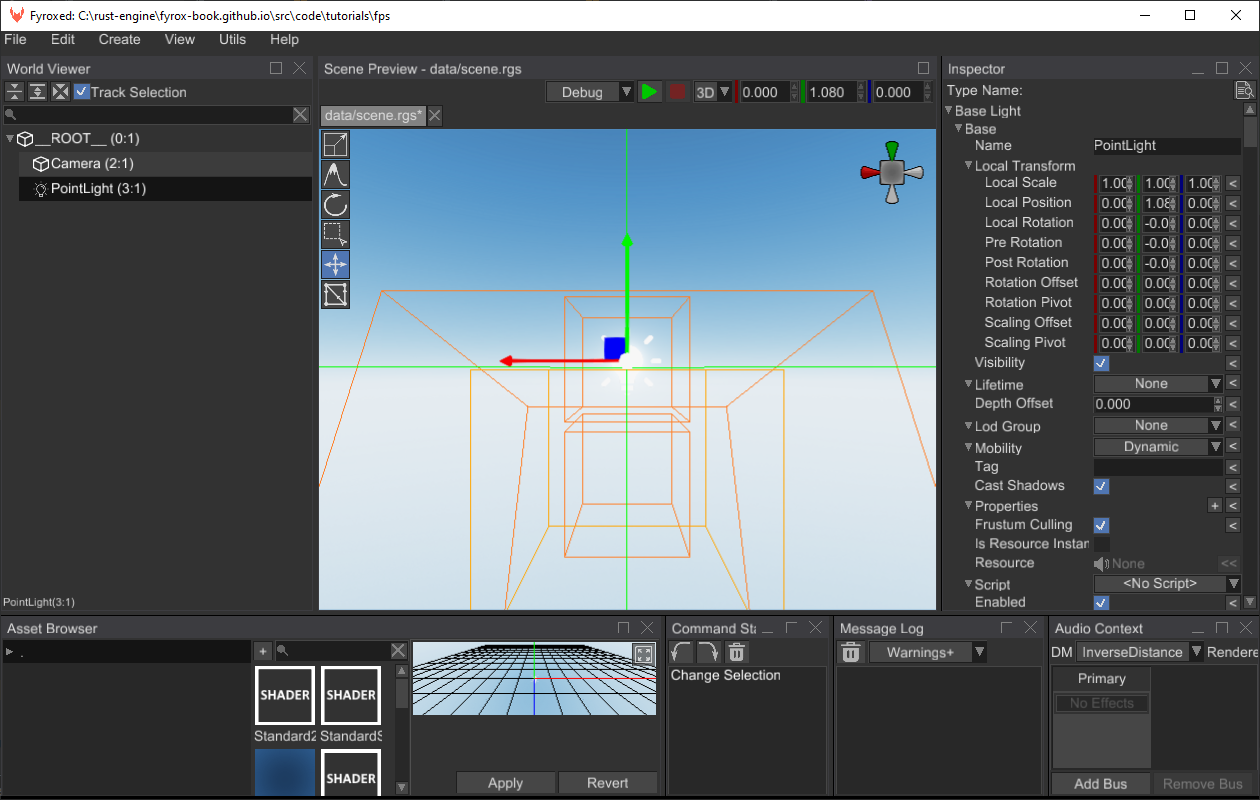

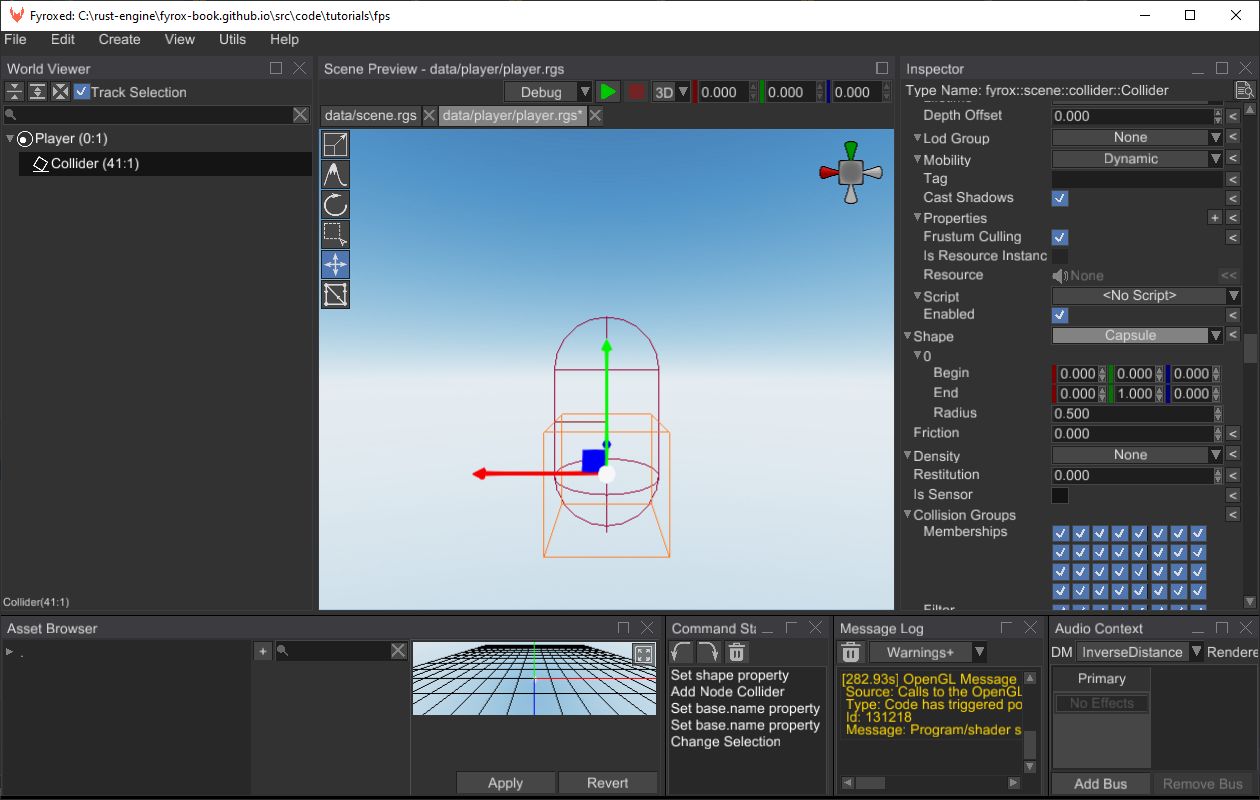

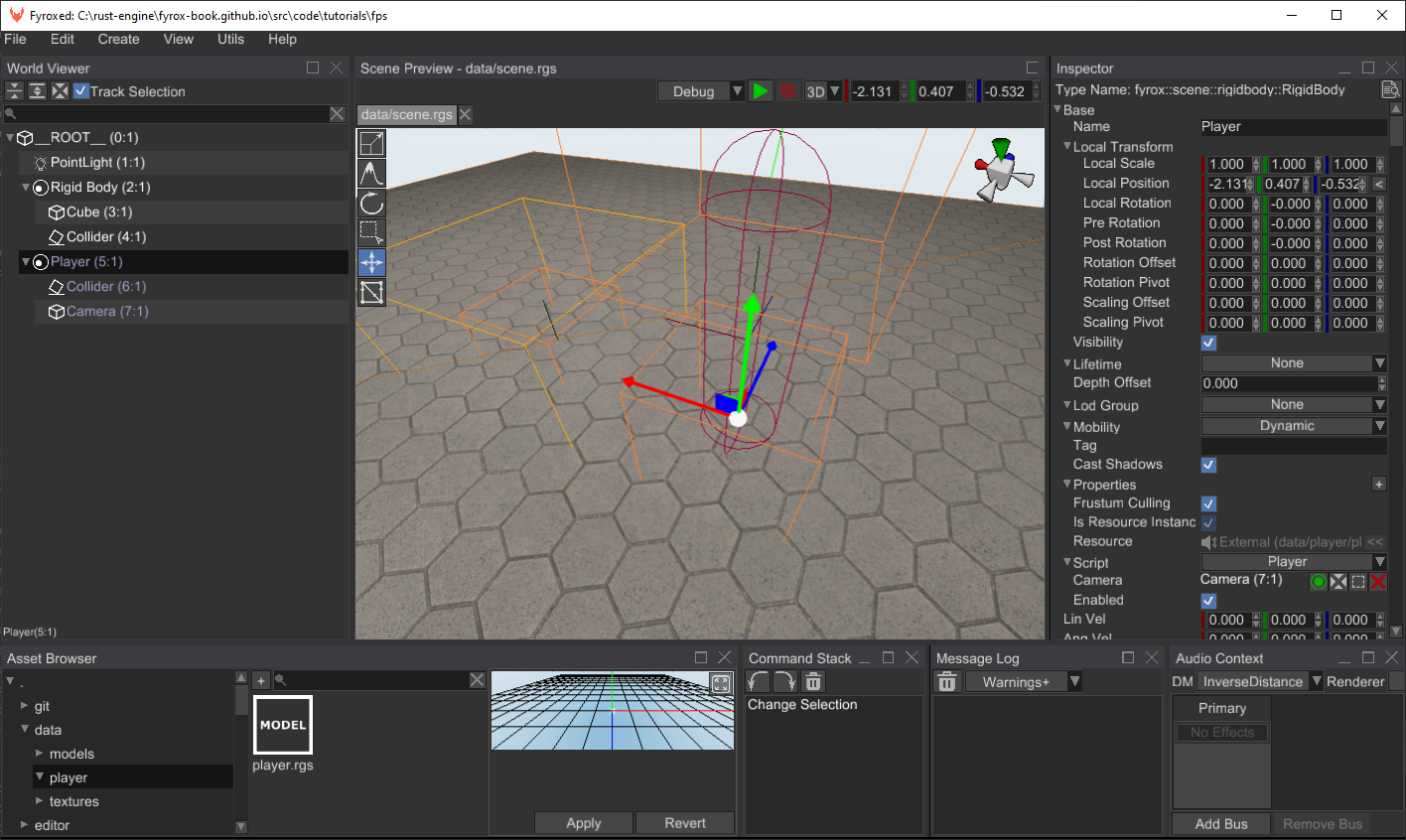

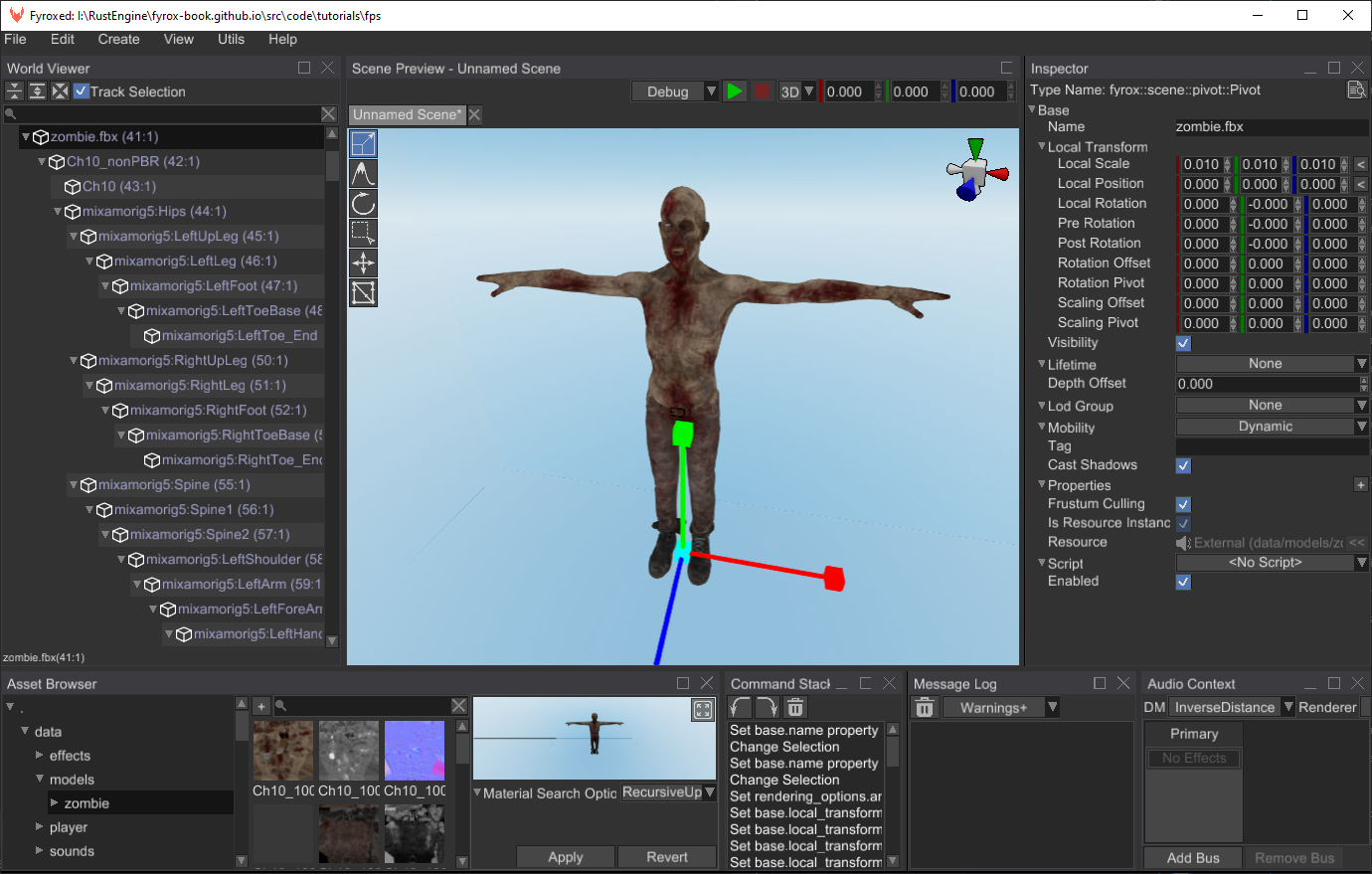

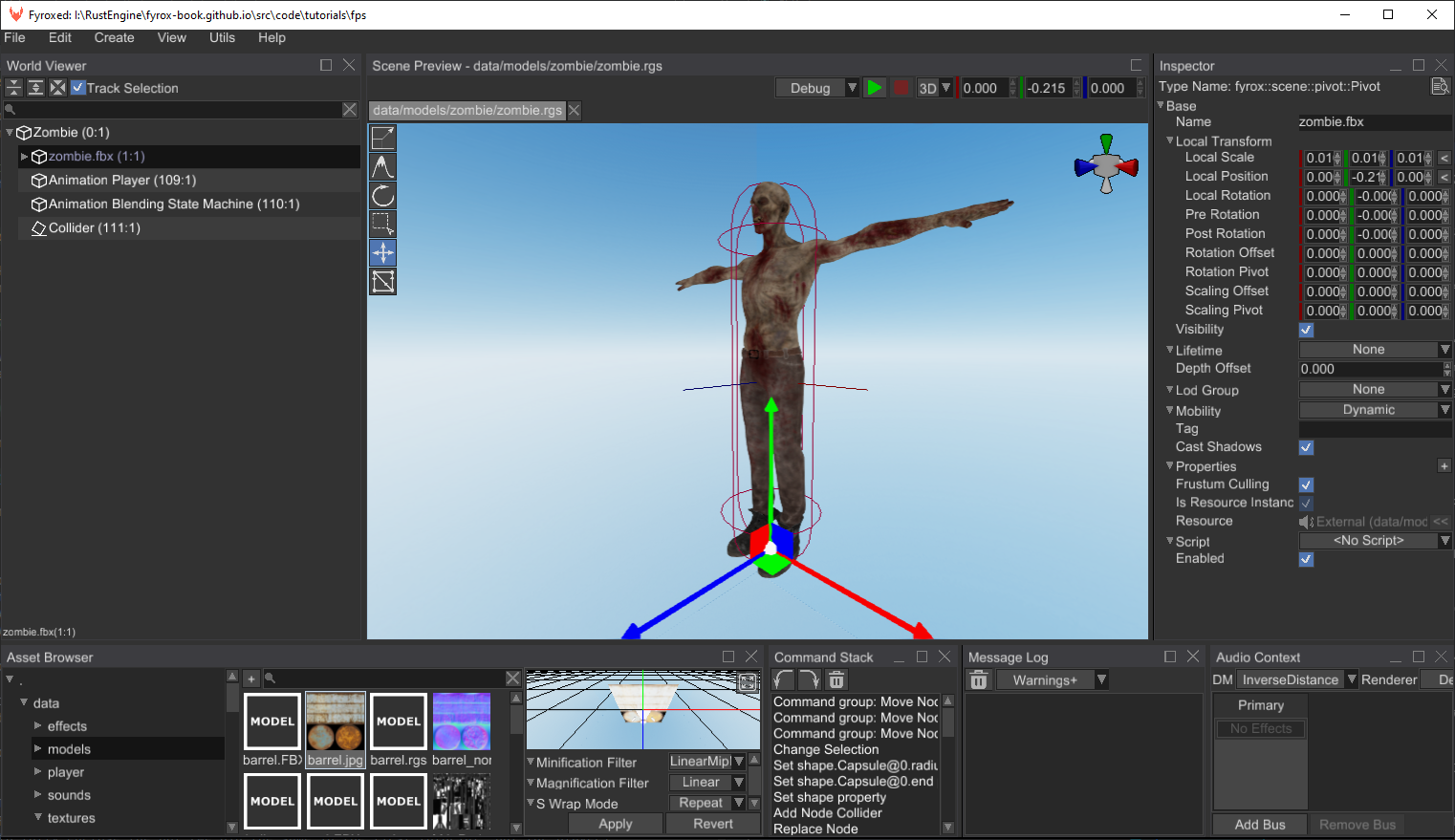

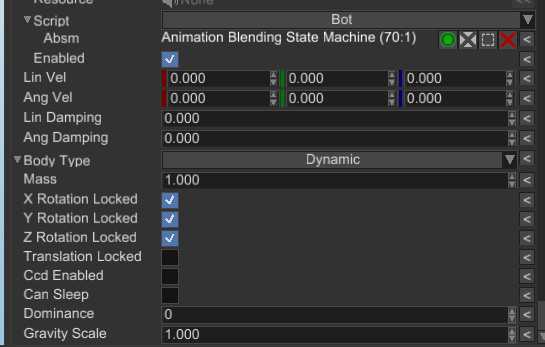

When you open the editor for the first time you may be confused by the amount of windows, buttons, lists, etc. you'll be presented with. Each window serves a different purpose, but all of them work together to help you make your game. Let's take a look at a screenshot of the editor and learn what each part of it is responsible for (please note that this can change over time, because development is quite fast and images can easily become outdated):

- World viewer - shows every object in the scene and their relationships. Allows inspecting and editing the contents of the scene in a hierarchical form.

- Scene preview - renders the scene with debug info and various editor-specific objects (gizmos, entity icons, etc.). Allows you to select, move, rotate, scale, delete, etc. various entities. The Toolbar on its left side shows available context-dependent tools.

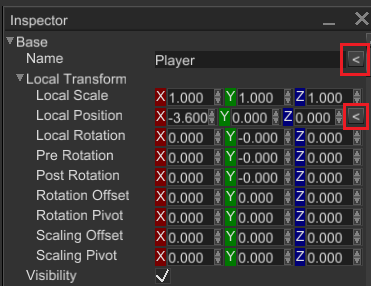

- Inspector - allows you to modify various properties of the selected object.

- Message Log - displays important messages from the editor.

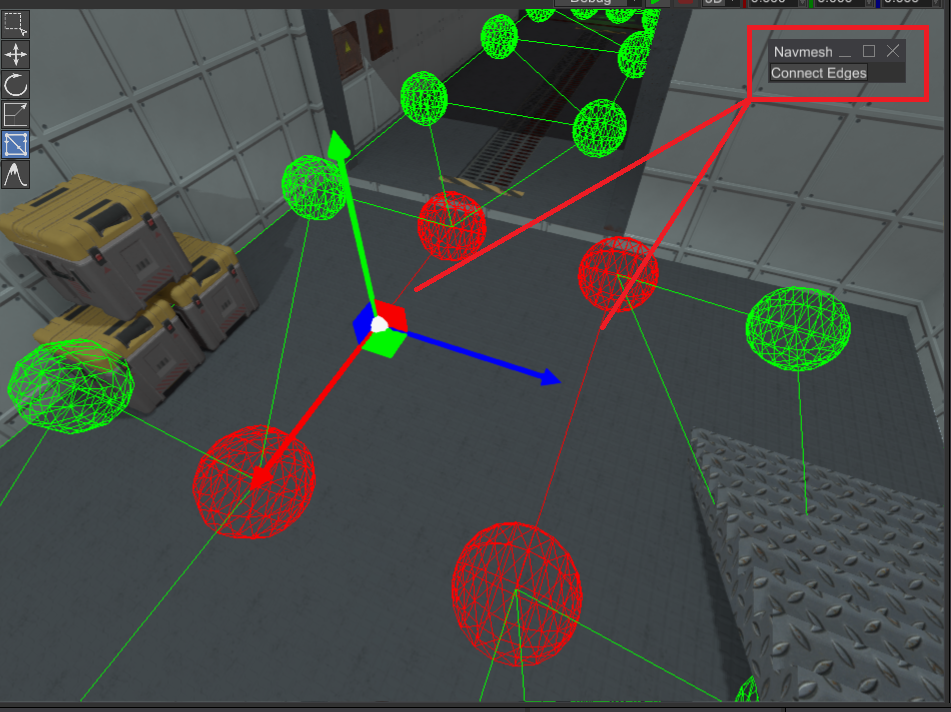

- Navmesh Panel - allows you to create, delete, and edit navigational meshes.

- Command Stack - displays your most recent actions and allows you to undo or redo their changes.

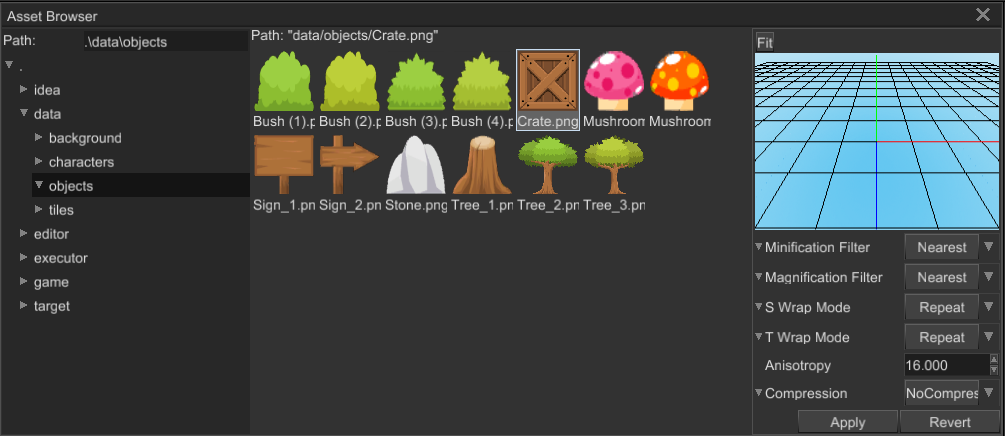

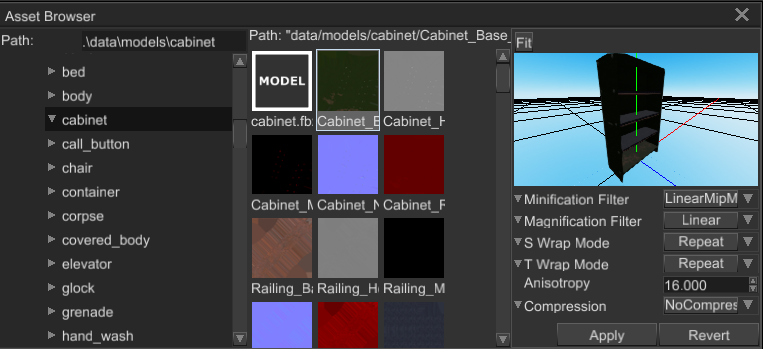

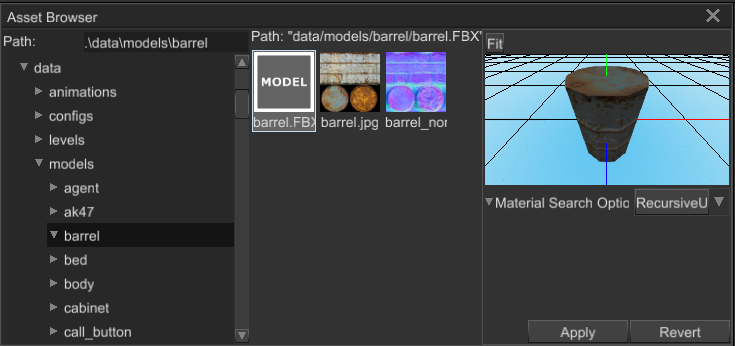

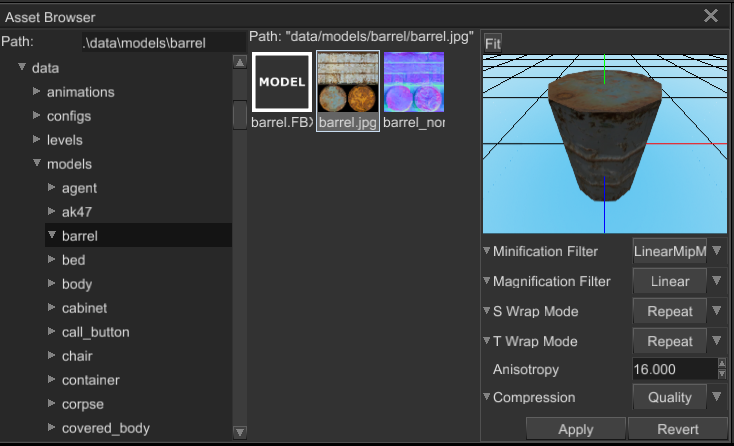

- Asset Browser - allows you to inspect the assets of your game and to instantiate resources in the scene, among other things.

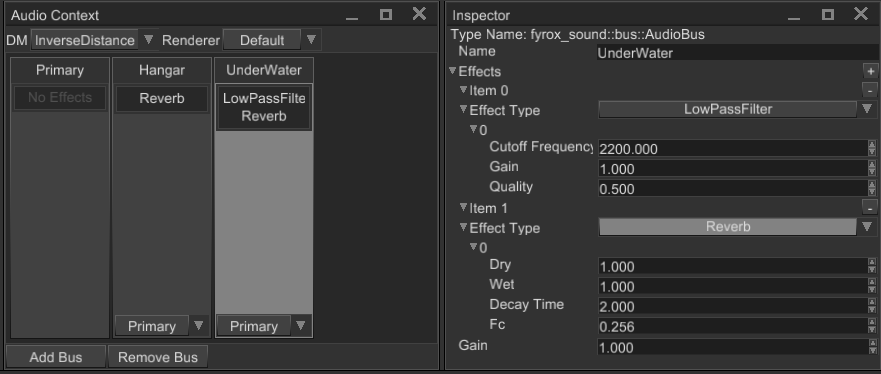

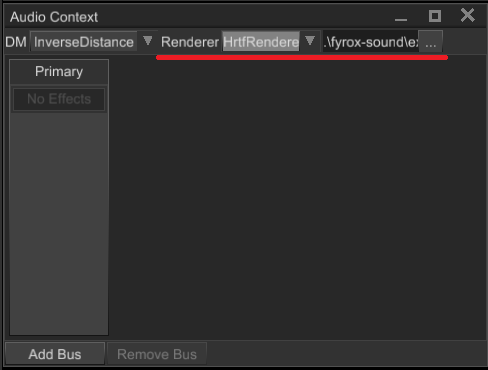

- Audio Context - allows you to edit the settings of the scene's sound context (global volume, available audio buses, effects, etc.)

Creating or loading a Scene

FyroxEd works with scenes - a scene is a container for game entities, you can create and edit one scene at a time. You must have a

scene loaded to begin working with the editor. To create a scene go to File -> New Scene.

To load an existing scene, go to File -> Load and select the desired scene through the file browser. Recently opened

scenes can be loaded more quickly by going to File -> Recent Scenes and selecting the desired one.

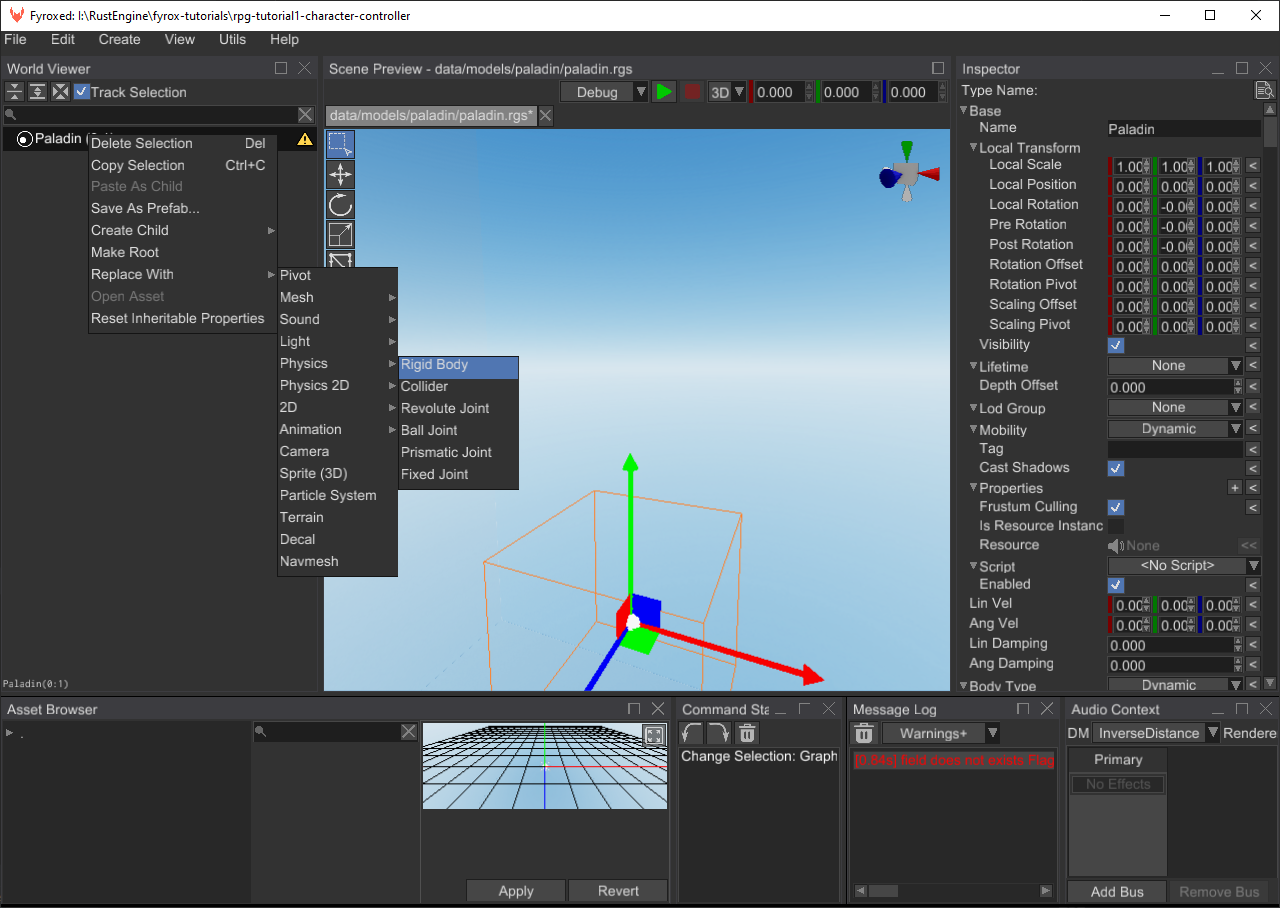

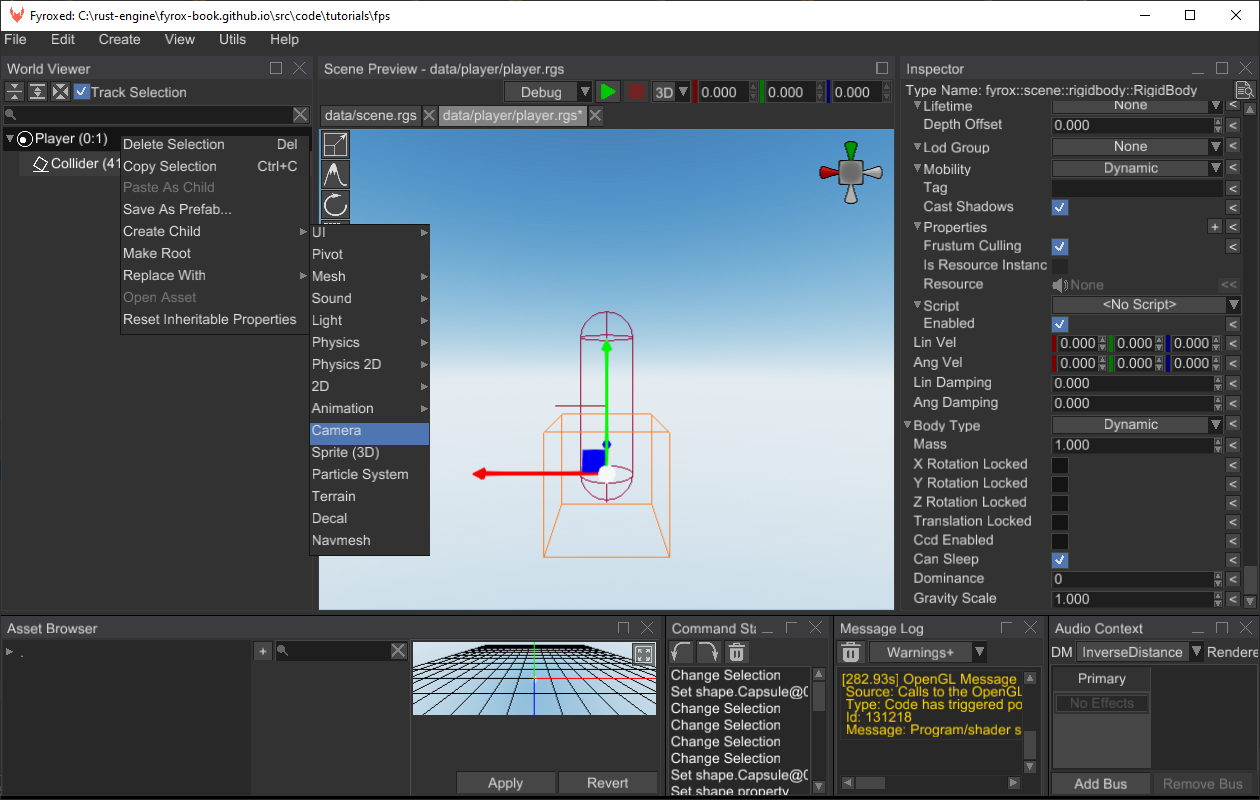

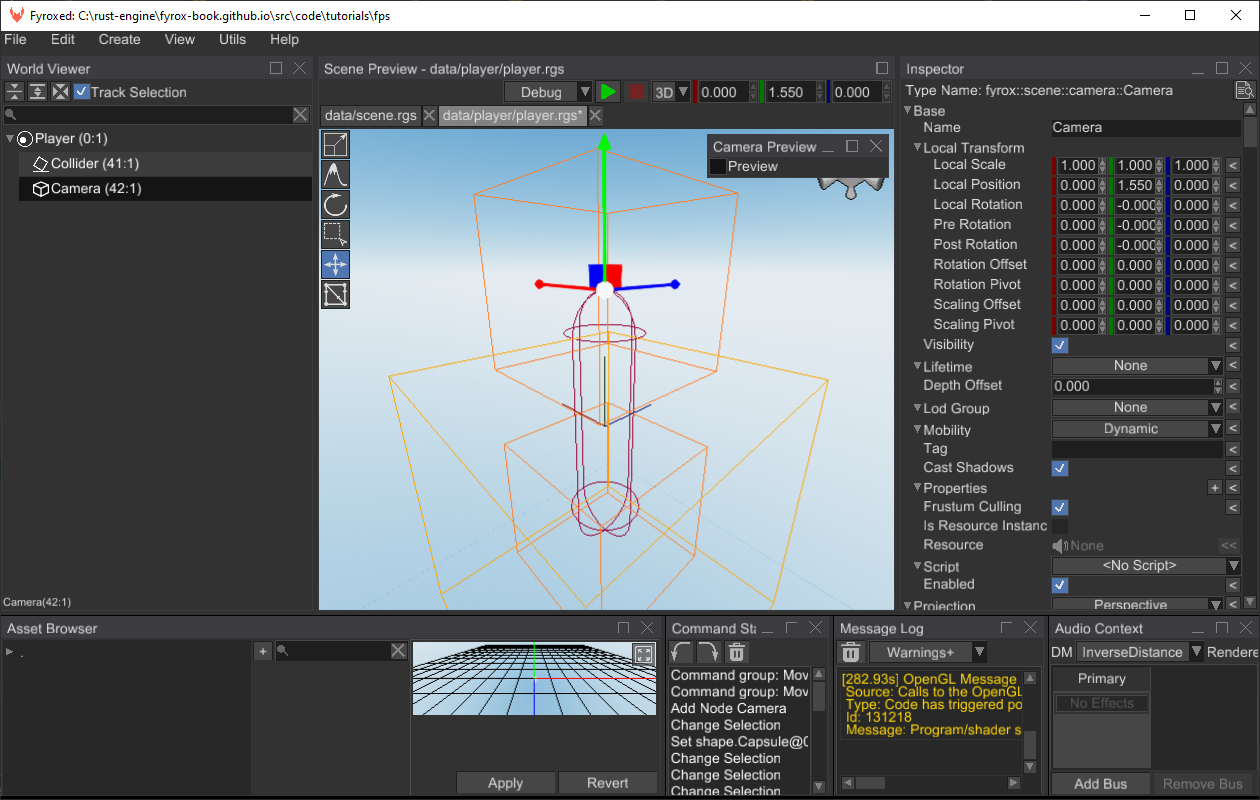

Populating a Scene

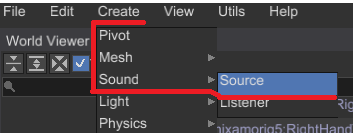

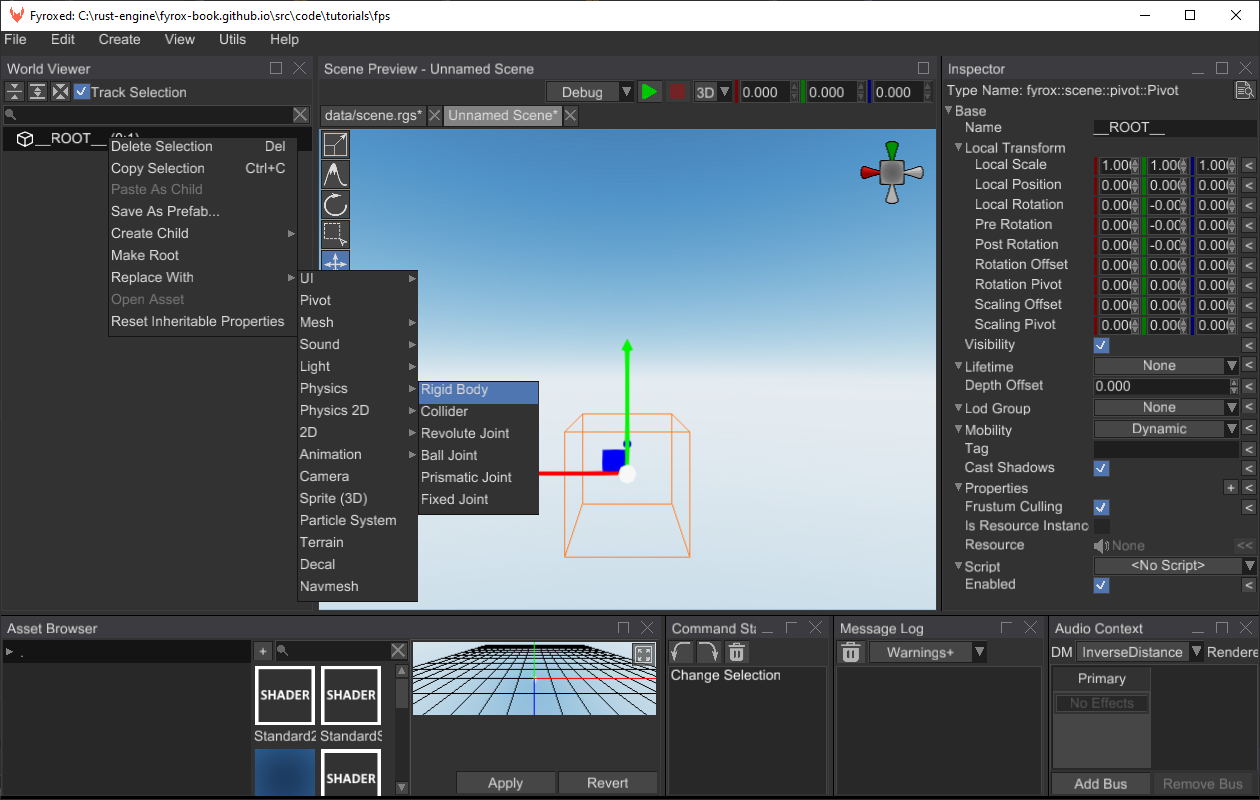

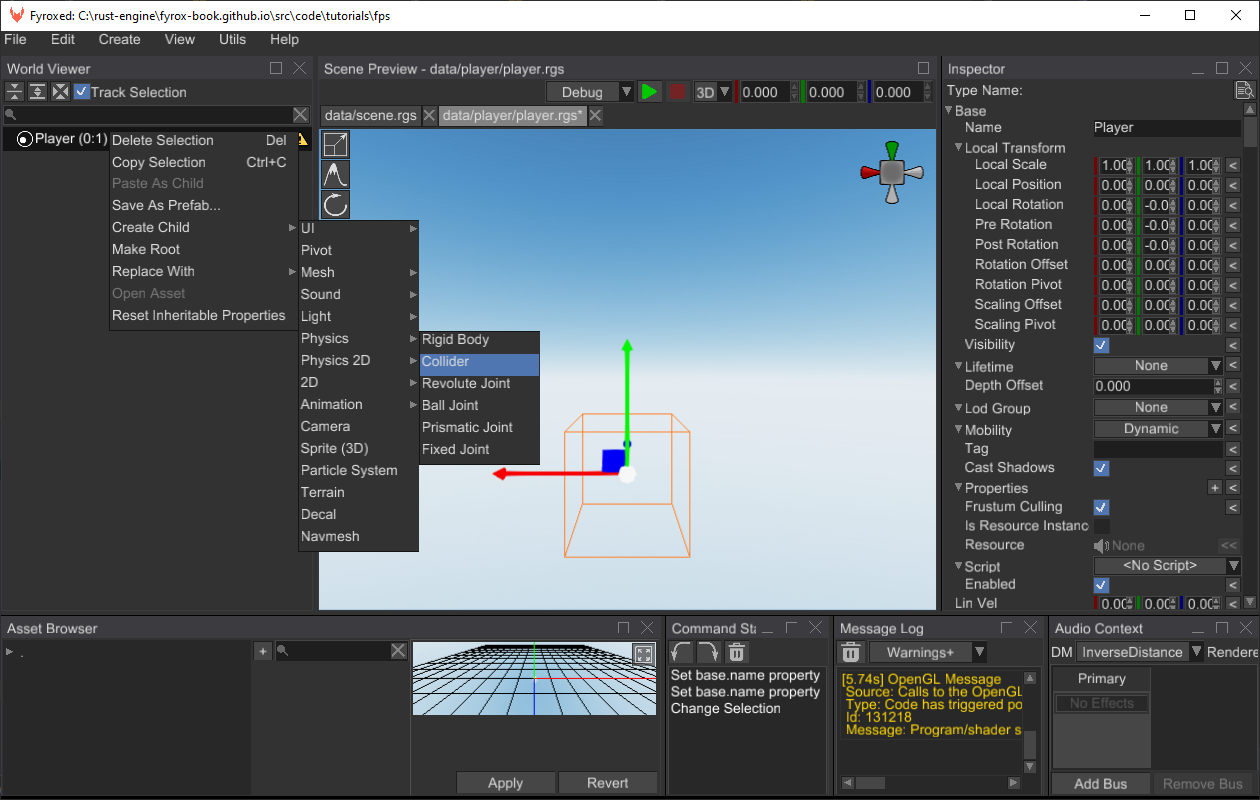

A scene can contain various game entities. There are two equivalent ways of creating these:

- By going to

Createin the main menu and selecting the desired entity from the drop down. - By right-clicking on a game entity in the

World Viewerand selecting the desired entity from theAdd Childsub-menu.

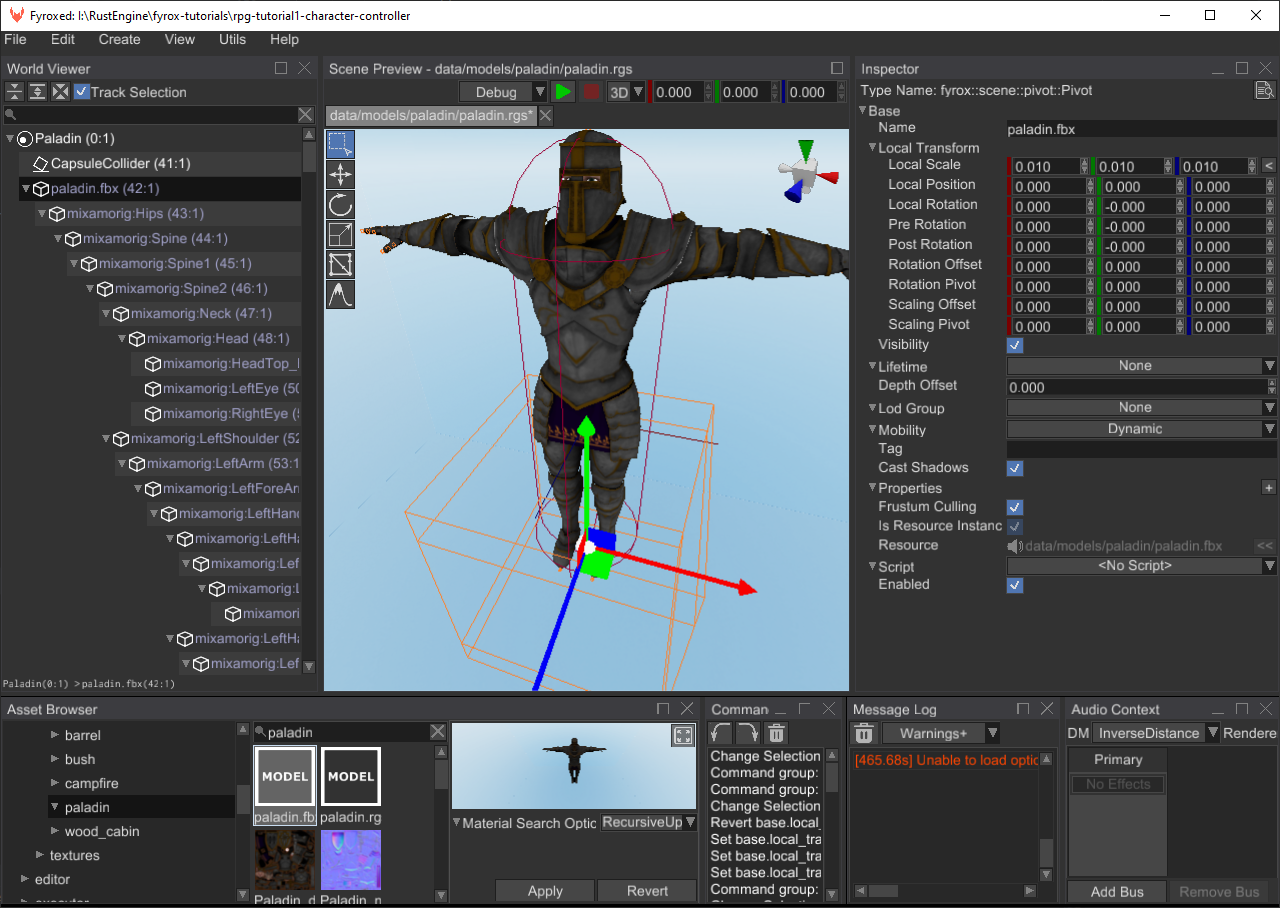

Complex objects usually made in 3D modelling software (Blender, 3Ds Max, Maya, etc.) can be saved in various formats.

Fyrox supports FBX format, which is supported by pretty much any 3D modelling software. You can instantiate such objects

by simply dragging the one you want and dropping it on the Scene Preview. While dragging it, you'll also see a preview

of the object.

You can do the same with other scenes made in the editor (rgs files), for example, you can create a scene with a few objects in it

with some scripts and re-use them within other scenes. Such scenes are called prefabs.

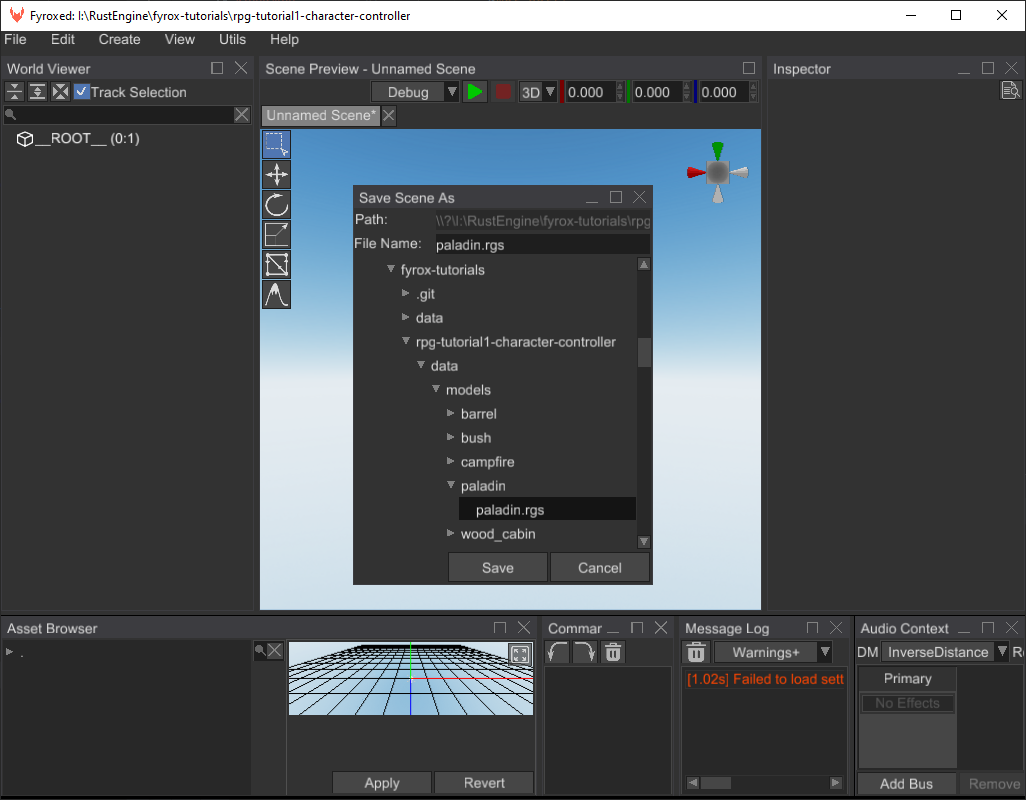

Saving a Scene

To save your work, go to File -> Save. If you're saving a new scene, the editor will ask you to specify a file name and a

path to where the scene will be saved. Scenes loaded from a file will automatically be saved to the path they were loaded

from.

Undoing and redoing

FyroxEd remembers your actions and allows you to undo and redo the changes done by these. You can undo or redo changes by either

going to Edit -> Undo/Redo or through the usual shortcuts: Ctrl+Z - to undo, Ctrl+Y - to redo.

Controls

There are number of control keys that you'll be using most of the time, pretty much all of them work in the Scene Preview window:

Editor camera movement

Click and hold [Right Mouse Button] within the Scene Preview window to enable the movement controls:

[W][S][A][D]- Move camera forward/backward/left/right[Space][Q]/[E]- Raise/Lower Camera[Ctrl]- Speed up[Shift]- Slowdown

Others

[Left Mouse Button]- Select[Middle Mouse Button]- Pan camera in viewing plane[1]- Select interaction mode[2]- Move interaction mode[3]- Scale interaction mode[4]- Rotate interaction mode[5]- Navigational mesh editing mode[6]- Terrain editing interaction mode[Ctrl]+[Z]- Undo[Ctrl]+[Y]- Redo[Delete]- Delete current selection.

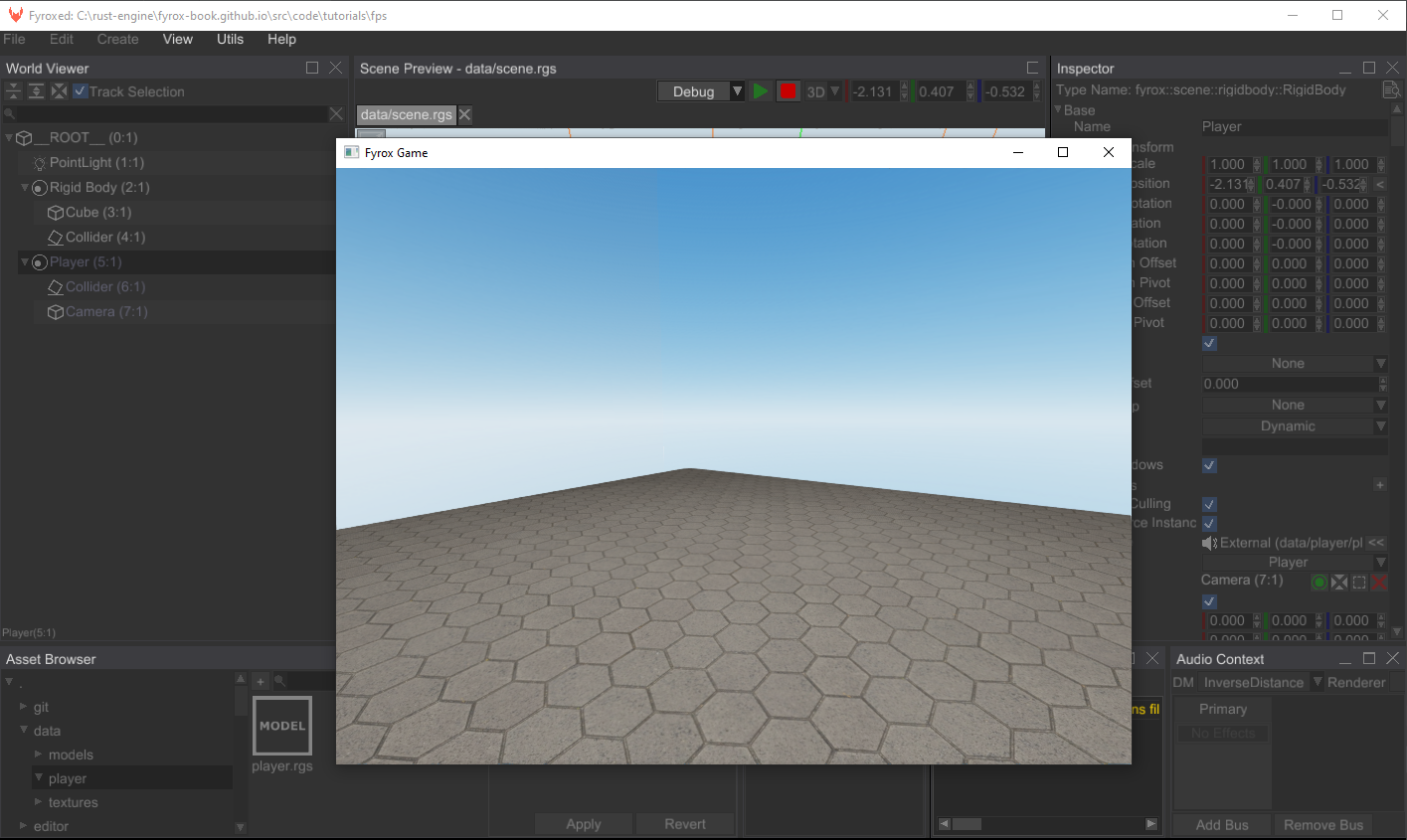

Play Mode

One of the key features of the editor is that it allows you to run your game from it in a separate process. Use the Play/Stop

button at the top of the Scene Preview window to enter or leave Play Mode. Keep in mind, that the editor UI will be locked while

you're in Play Mode.

Play Mode can be activated only for projects made with the fyrox-template (or for projects with a similar structure). The editor

calls cargo commands to build and run your game in a separate process. Running the game in a separate process ensures

that the editor won't crash if your game does, it also provides excellent isolation between the game and the editor, not

giving a chance to break the editor by running the game.

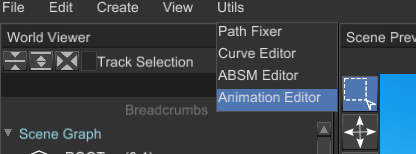

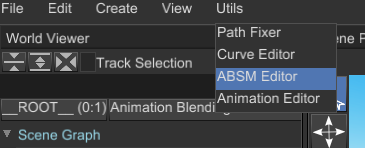

Additional Utilities

There are also number of powerful utilities that will make your life easier, they can be found under the Utils section of the

main menu:

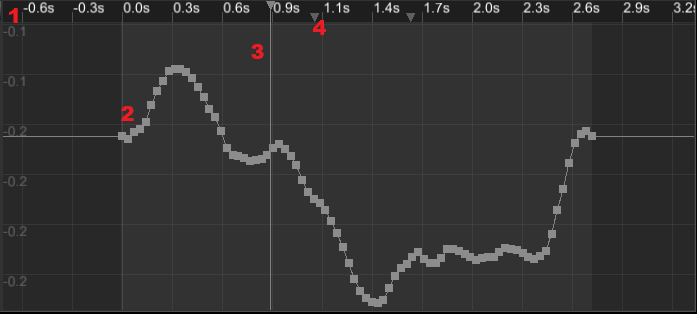

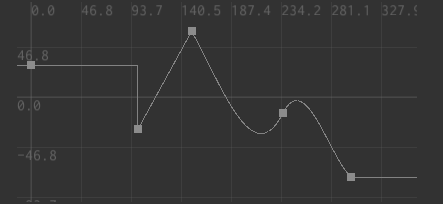

- Curve Editor - allows you to create and edit curve resources to make complex laws for game parameters.

- Path Fixer - helps you fix incorrect resource references in your scenes.

Scene and Scene Graph

When you're playing a game, you often see various objects scattered around the screen, all of them are forming a scene. A scene is just a set of a variety objects, as in many other game engines, Fyrox allows you to create multiple scenes for multiple purposes, for example, one scene could be used for a menu, a bunch of others for game levels, and another one for an ending screen. Scenes can also be used to create a source of data for other scenes, such scenes are called prefabs. Scenes can also be rendered in a texture, which can be used in other scenes - this way you can create interactive screens that show other places.

While playing games, you may have noticed that some objects behaves as if they were linked to other objects, for example, a character in a role-playing game could carry a sword. While the character holds the sword, it is linked to his arm. Such relations between the objects can be presented by a graph structure.

Simply speaking, a graph is a set of objects with hierarchical relationships between each object. Each object in the graph is called a node. In the example with the sword and the character, the sword is a child node of the character, and the character is a parent node of the sword (here we ignore the fact that in reality, character models usually contain complex skeletons, with the sword actually being attached to one of the hands' bones, not to the character).

You can change the hierarchy of nodes in the editor using a simple drag'n'drop functionality in the World Viewer - drag a

node onto some other node, and it will attach itself to it.

Building Blocks or Scene Nodes

The engine offers various types of "building blocks" for your scene, each such block is called a scene node.

- Base - stores hierarchical information (a handle to the parent node and handles to children nodes), local and global transform, name, tag, lifetime, etc. It has self-describing name - it's used as a base node for every other scene node via composition.

- Mesh - represents a 3D model. This one of the most commonly used nodes in almost every game. Meshes can be easily created either programmatically, or be made in some 3D modelling software, such as Blender, and then loaded into the scene.

- Light - represents a light source. There are three types of light sources:

- Point - emits light in every direction. A real-world example would be a light bulb.

- Spot - emits light in a particular direction, with a cone-like shape. A real-world example would be a flashlight.

- Directional - emits light in a particular direction, but does not have position. The closest real-world example would be the Sun.

- Camera - allows you to see the world. You must have at least one camera in your scene to be able to see anything.

- Sprite - represents a quad that always faces towards a camera. It can have a texture and size and can also can be rotated around the "look" axis.

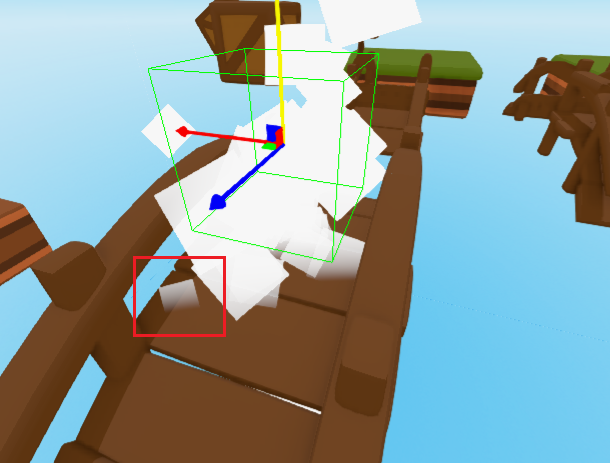

- Particle system - allows you to create visual effects using a huge set of small particles. It can be used to create smoke, sparks, blood splatters, etc.

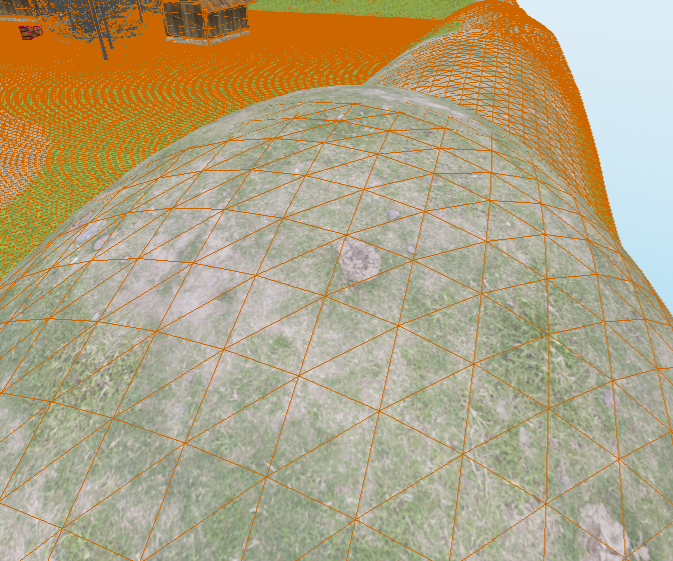

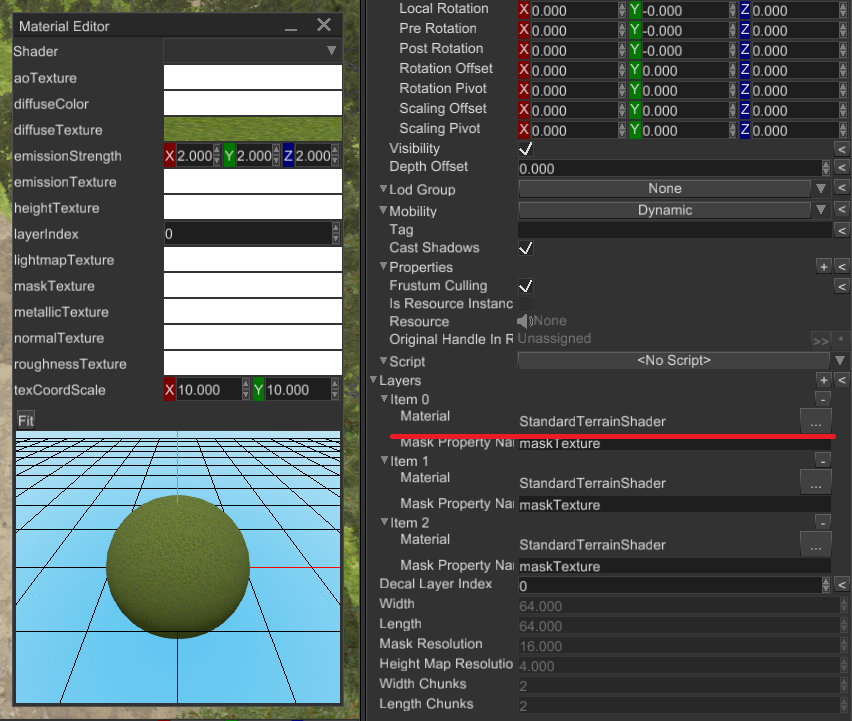

- Terrain - allows you to create complex landscapes with minimal effort.

- Decal - paints on other nodes using a texture. It is used to simulate cracks in concrete walls, damaged parts of the road, blood splatters, bullet holes, etc.

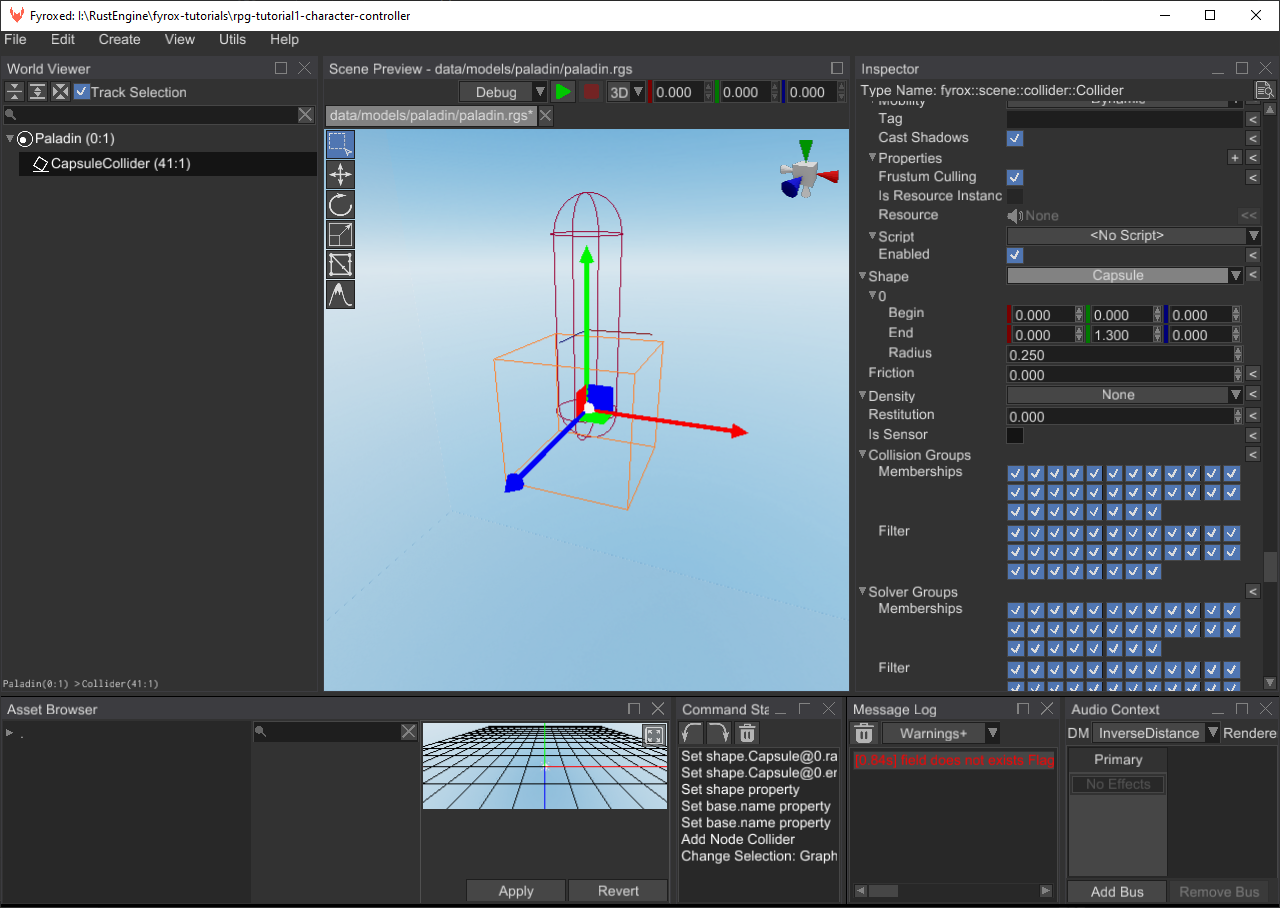

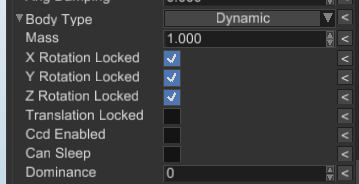

- Rigid Body - a physical entity that is responsible for the dynamic of the rigid. There is a special

variant for 2D -

RigidBody2D. - Collider - a physical shape for a rigid body. It is responsible for contact manifold generation,

without it, any rigid body will not participate in simulation correctly, so every rigid body must have at least

one collider. There is a special variant for 2D -

Collider2D. - Joint - a physical entity that restricts motion between two rigid bodies. It has various amounts

of degrees of freedom depending on the type of the joint. There is a special variant for 2D -

Joint2D. - Rectangle - a simple rectangle mesh that can have a texture and a color. It is a very simple version of a Mesh node, yet it uses very optimized renderer, that allows you to render dozens of rectangles simultaneously. This node is intended for use in 2D games only.

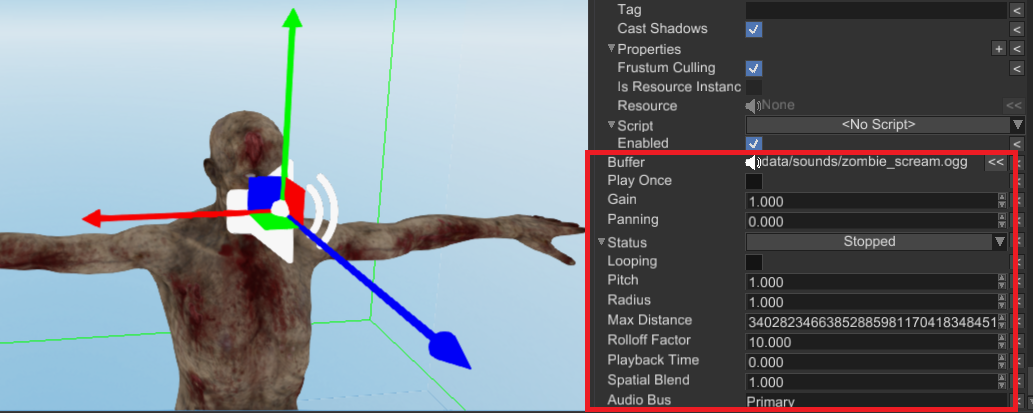

- Sound - a sound source universal for 2D and 3D. Spatial blend factor allows you to select a proportion between 2D and 3D.

- Listener - an audio receiver that captures the sound at a particular point in your scene and sends it to an audio context for processing and outputting to an audio playback device.

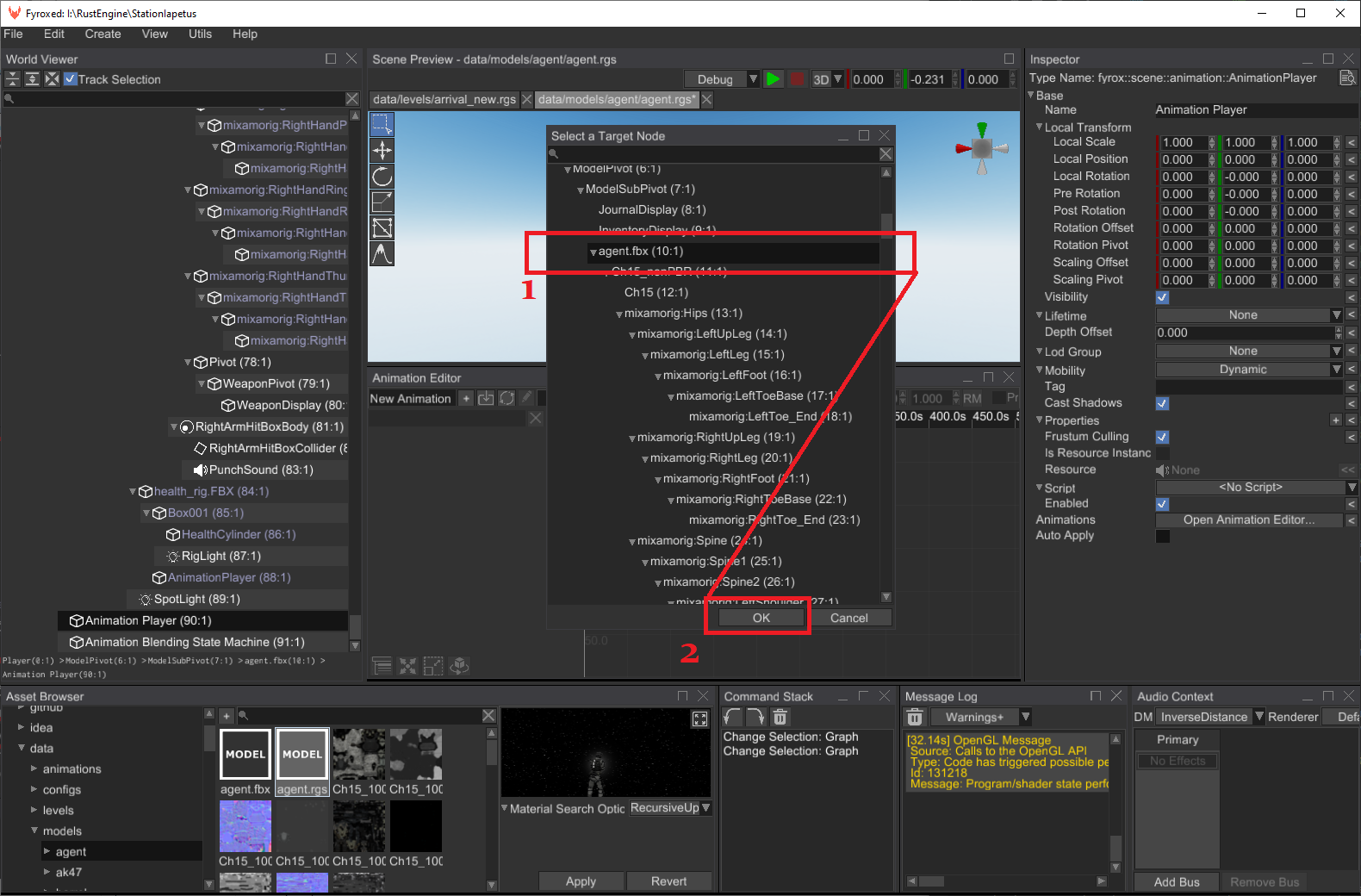

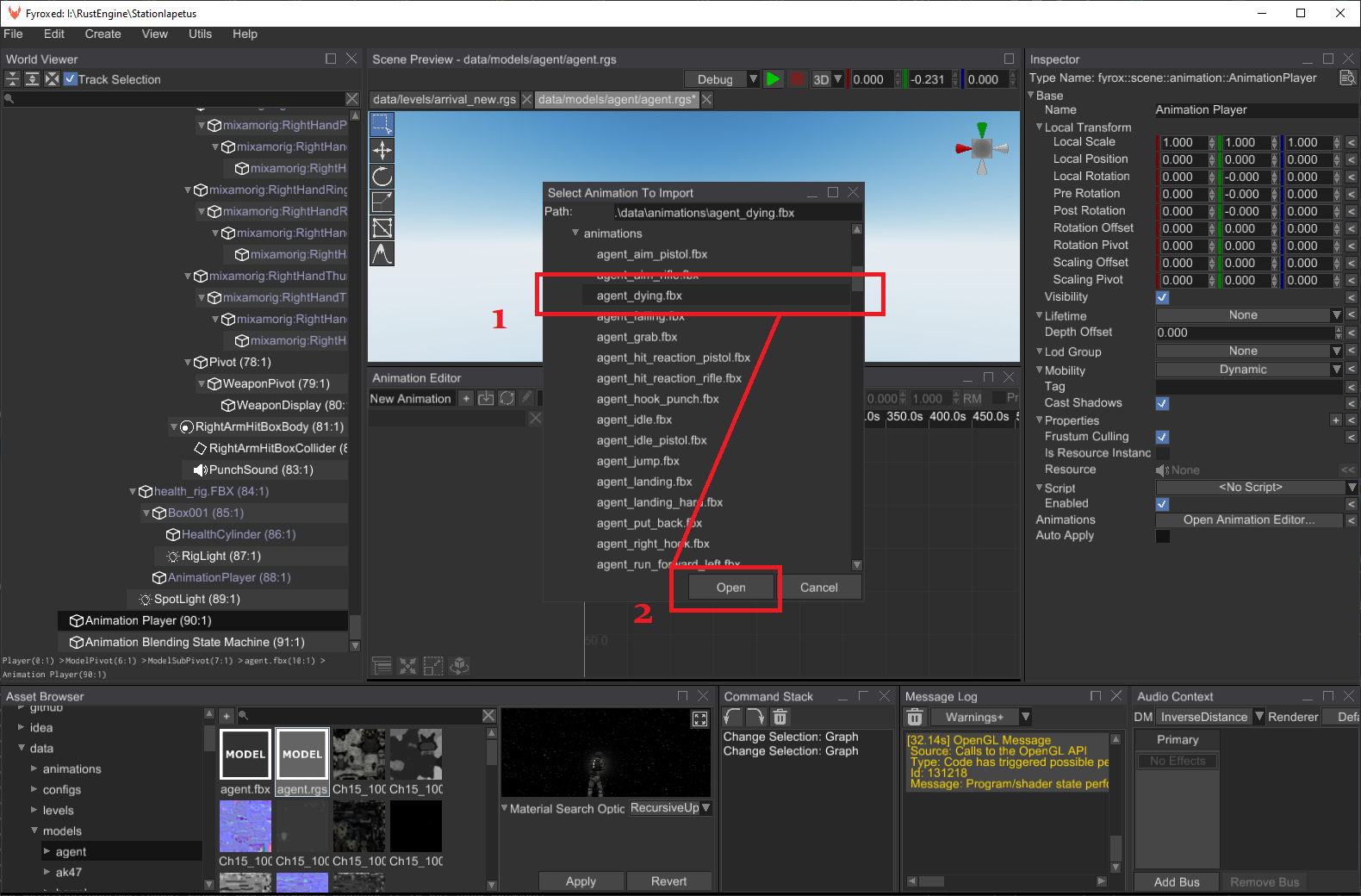

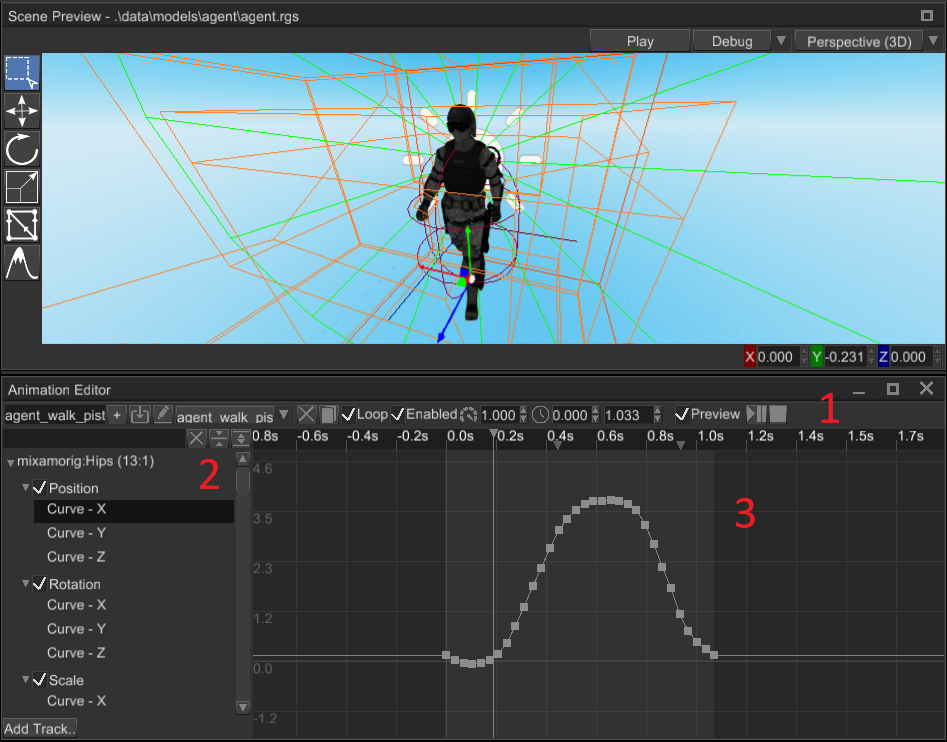

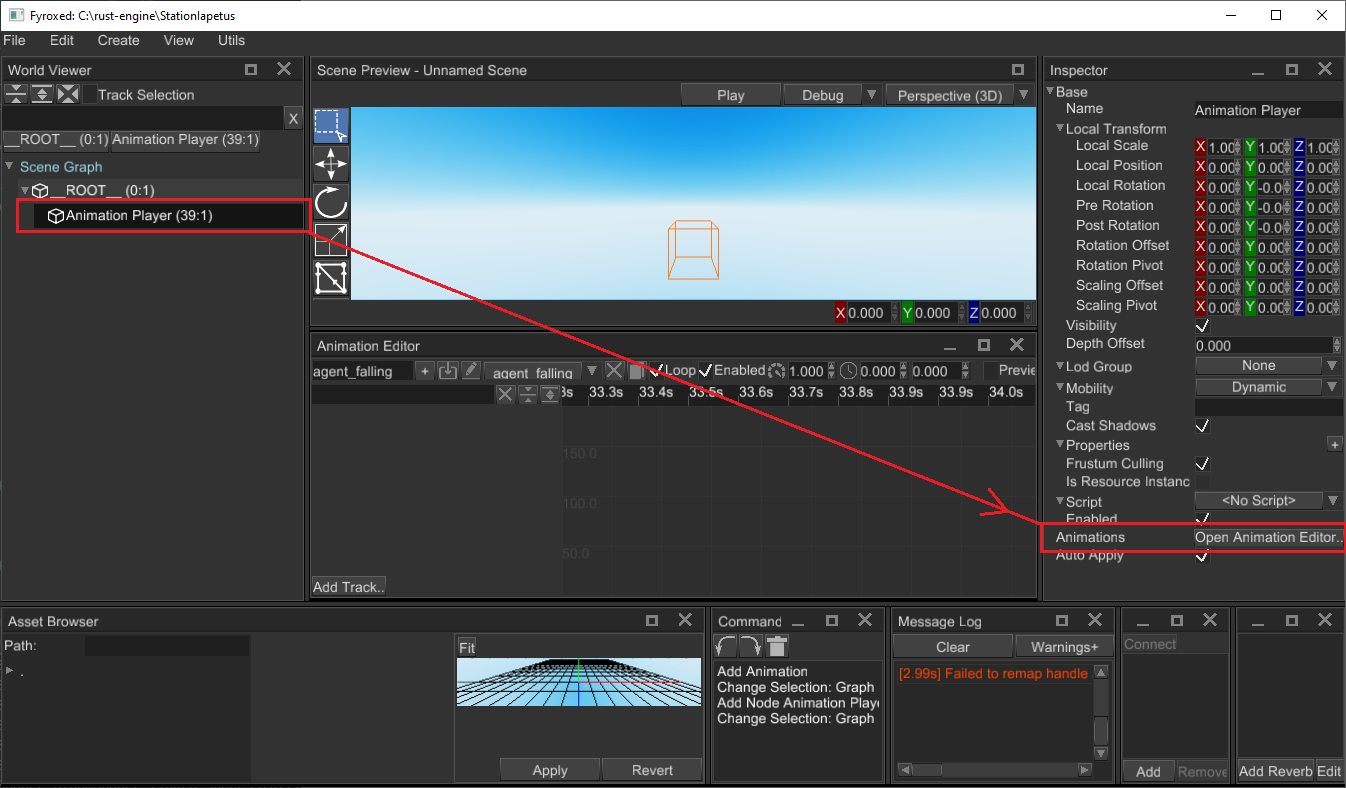

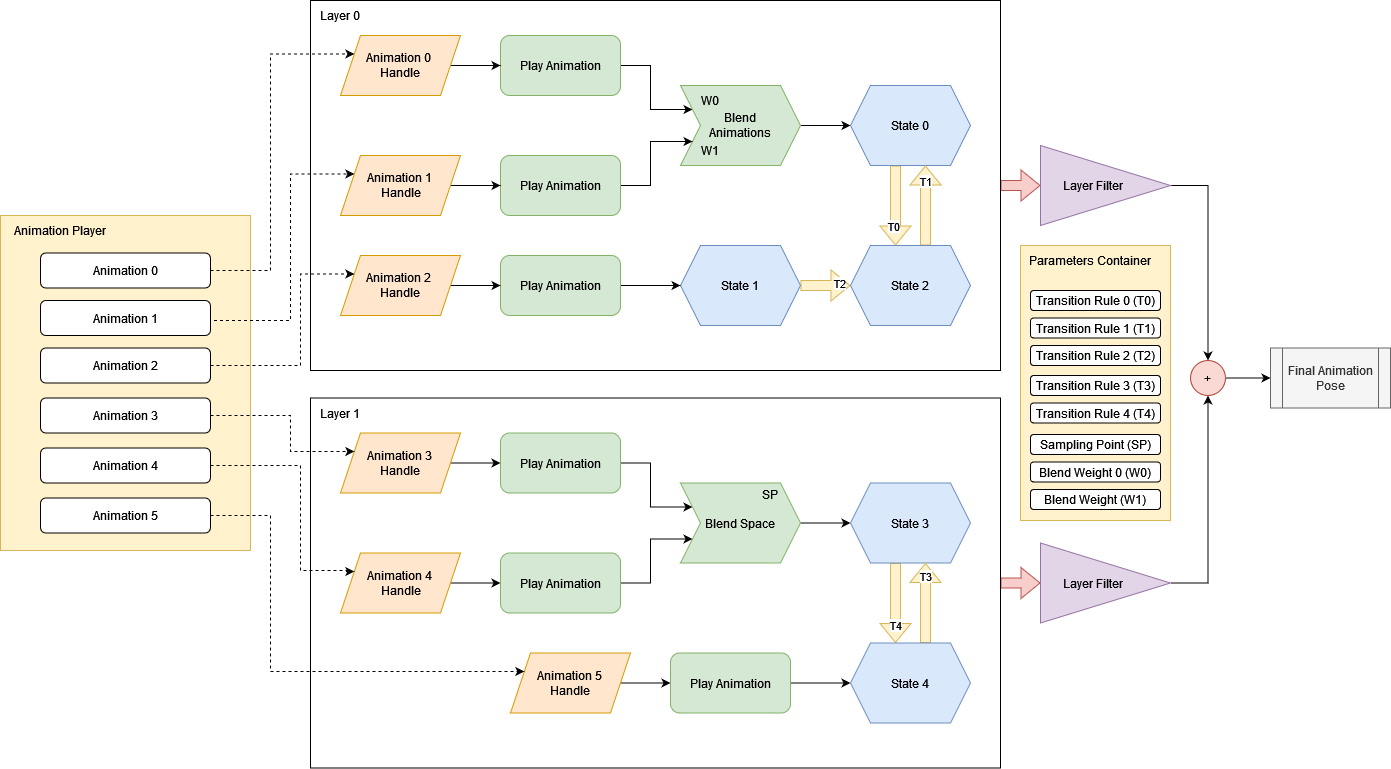

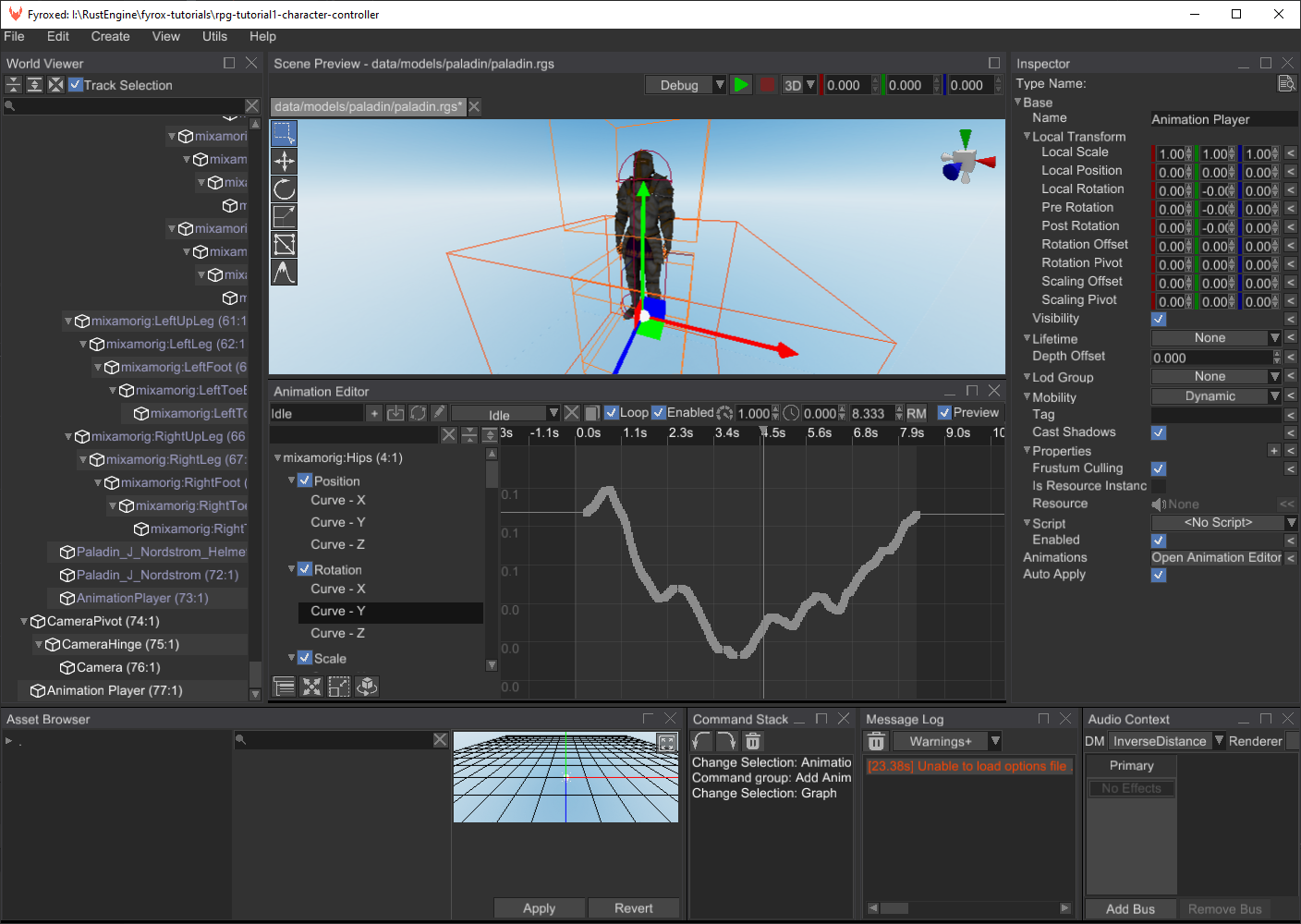

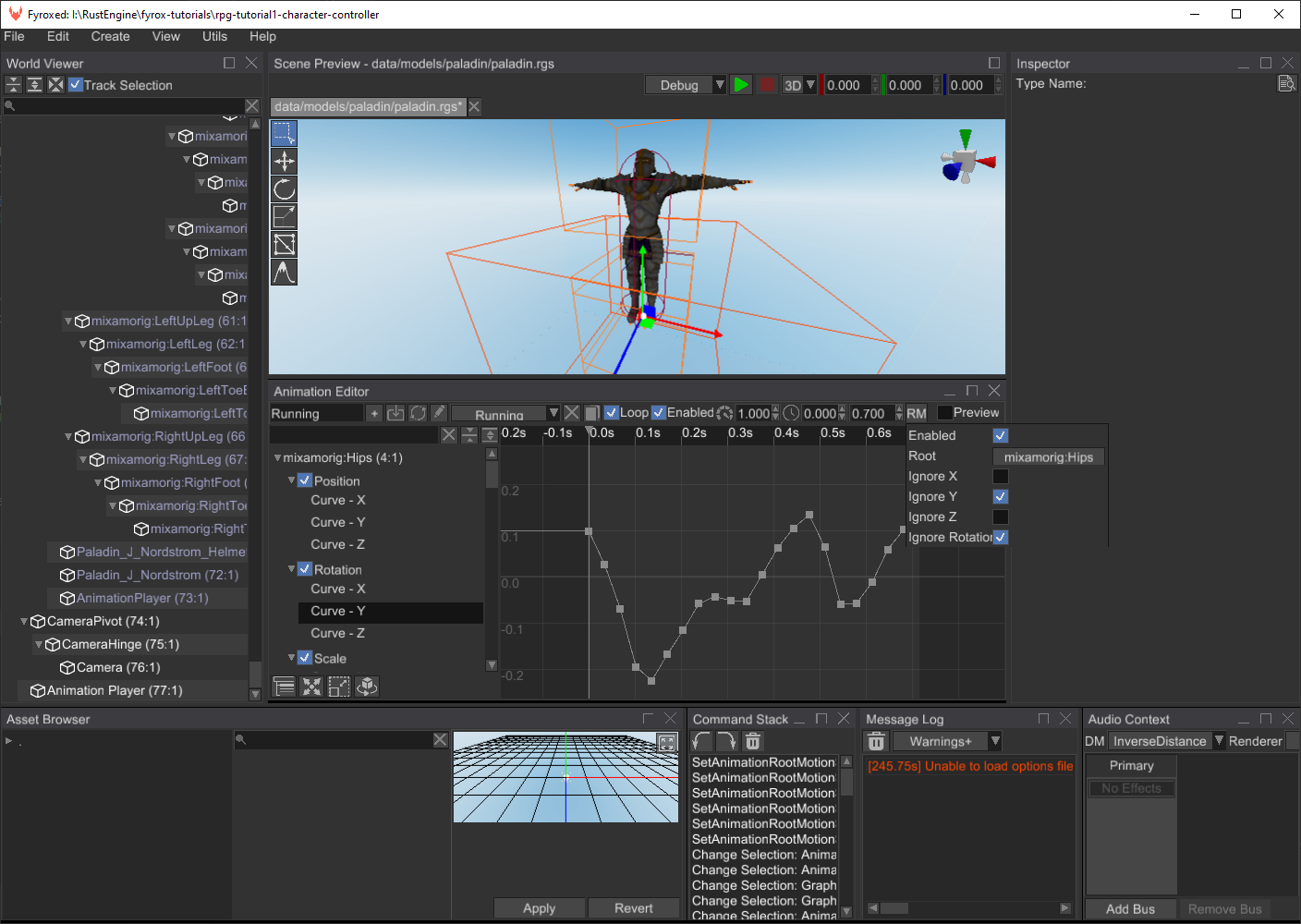

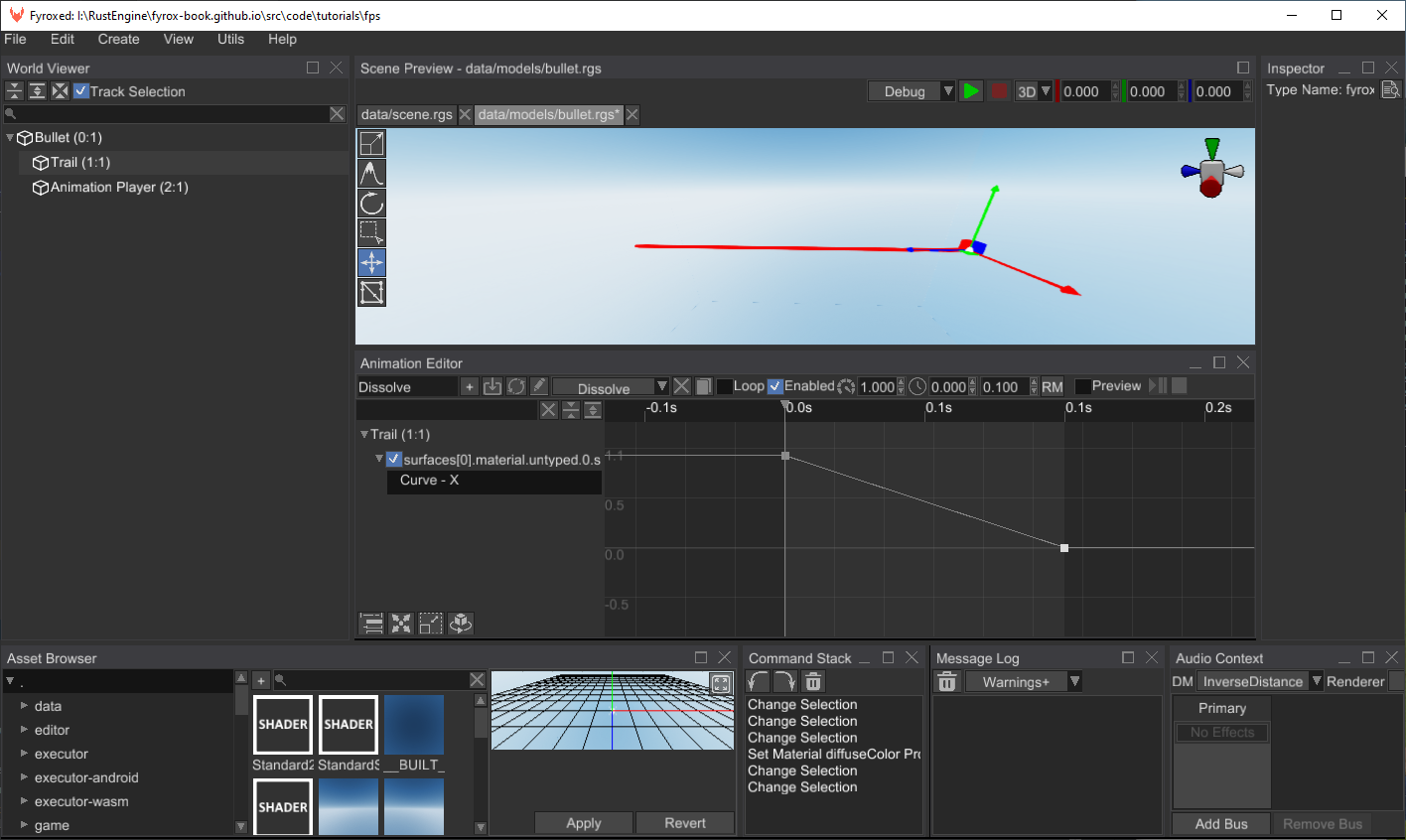

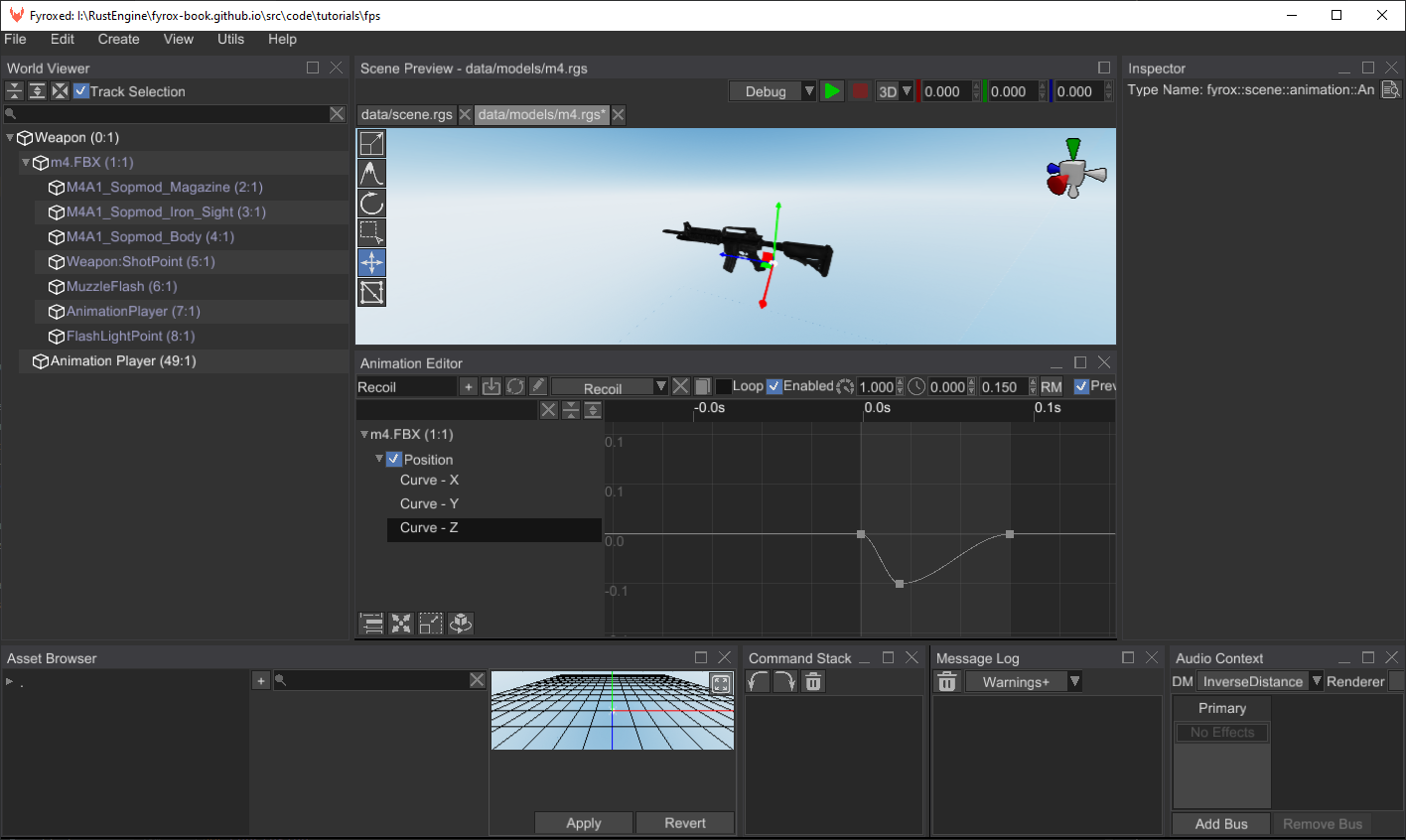

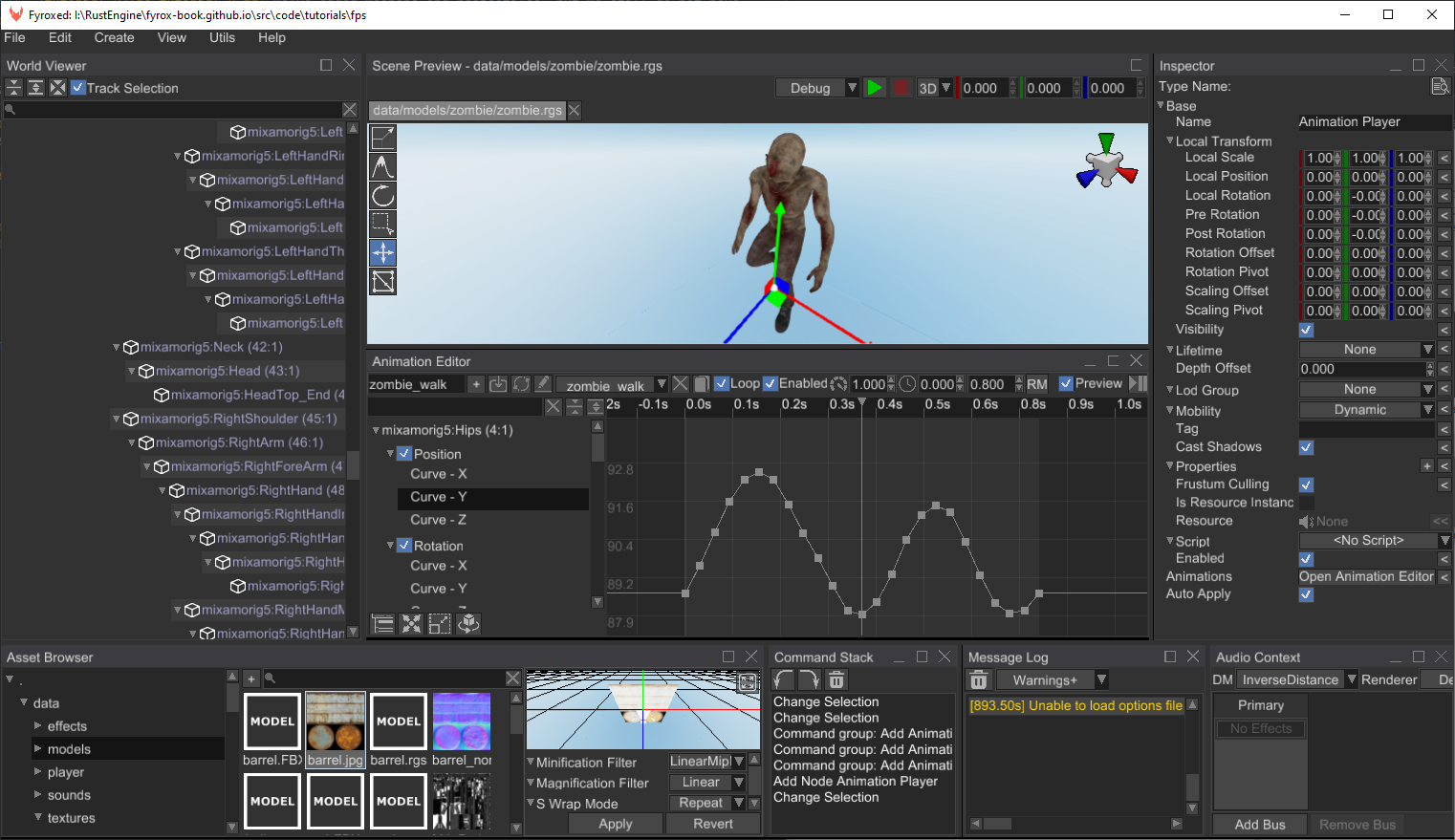

- Animation Player - a container for multiple animations. It can play animations made in the animation editor and apply animation poses to respective scene nodes.

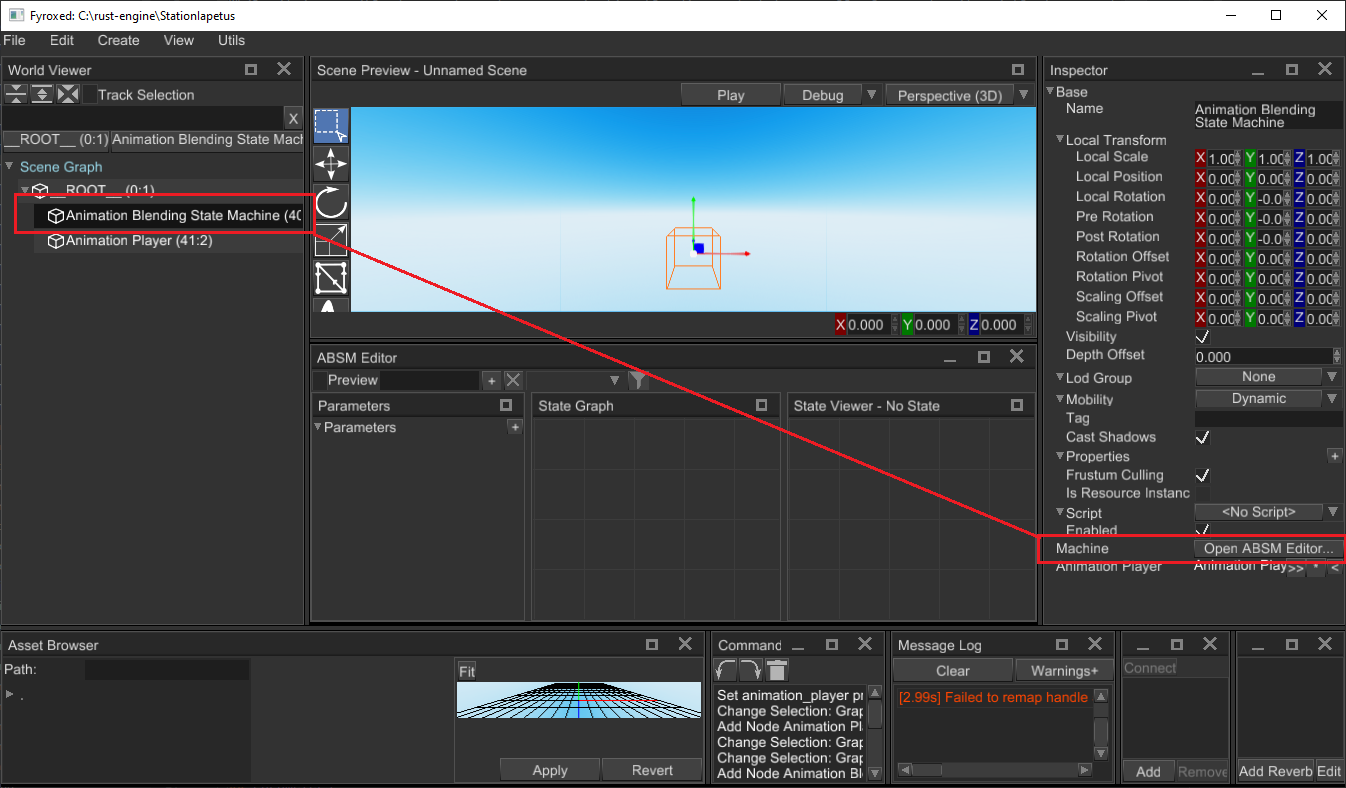

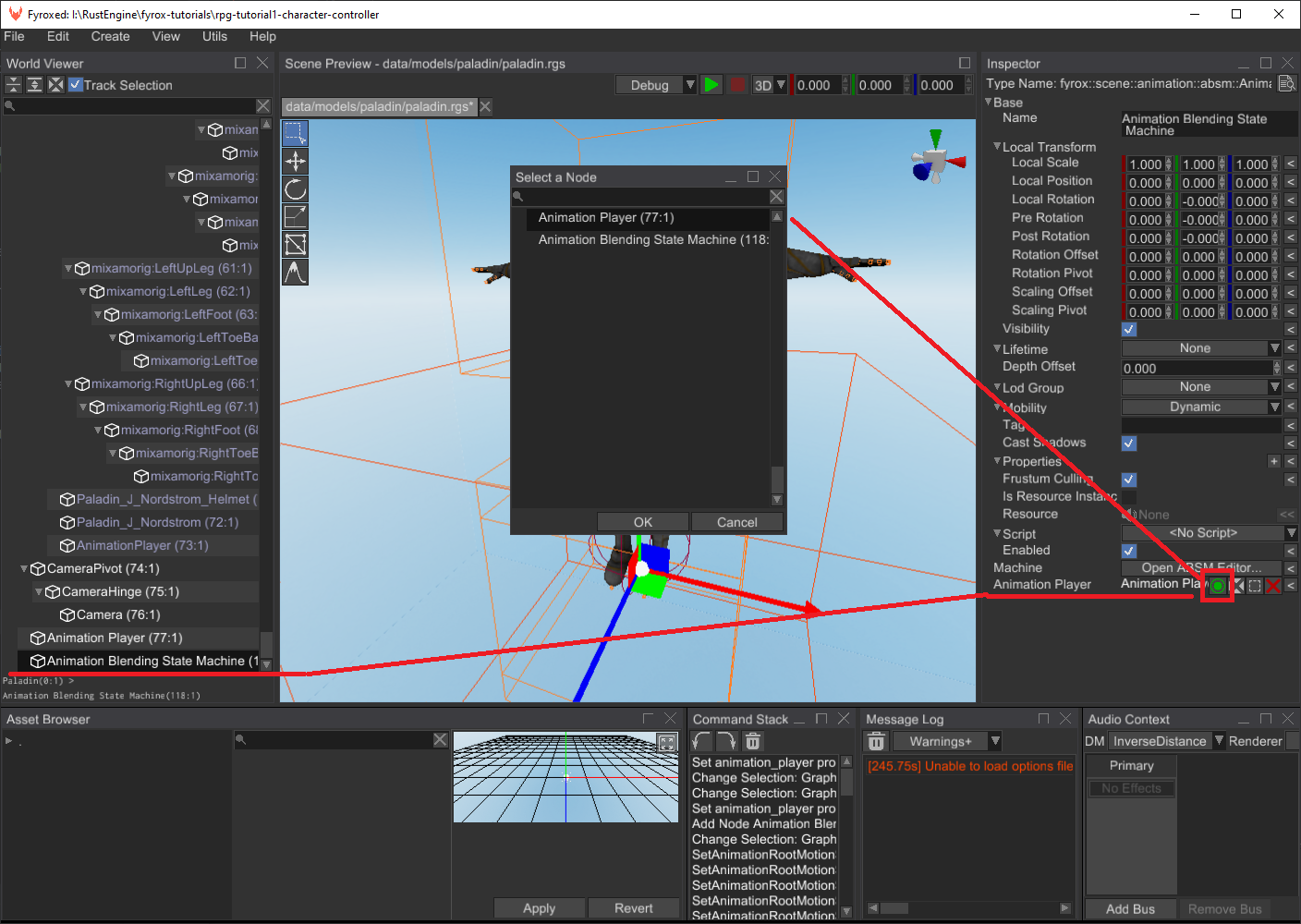

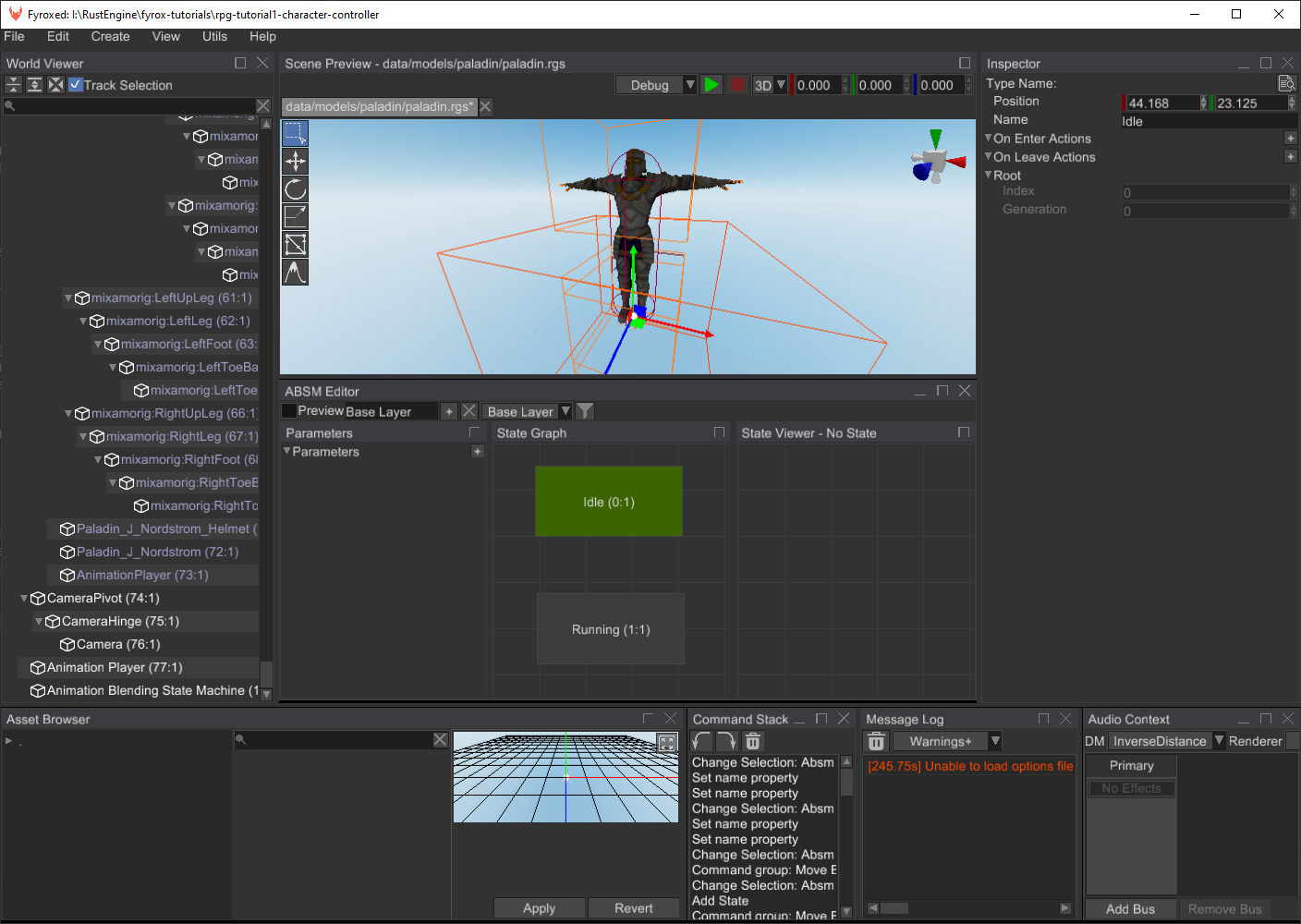

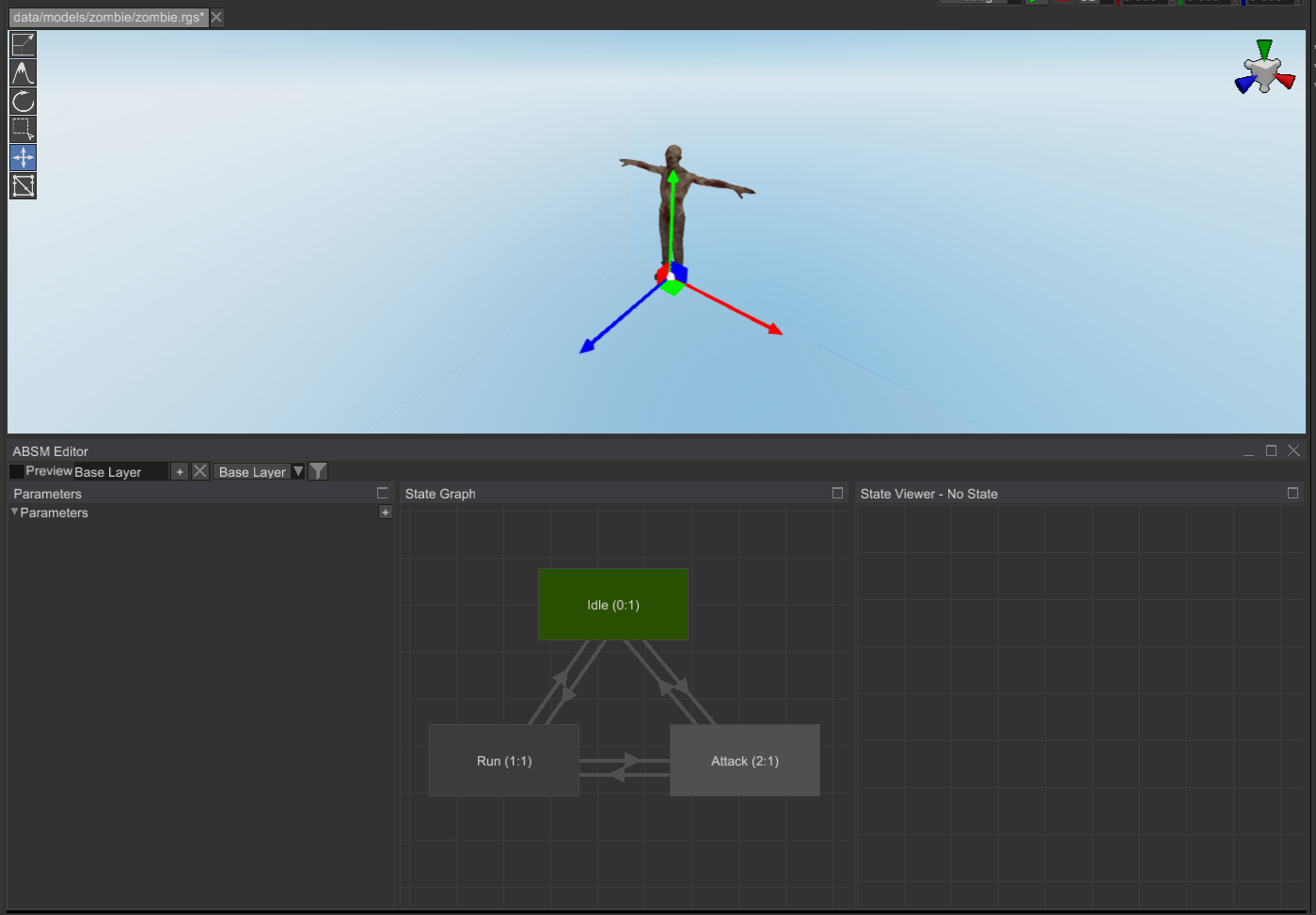

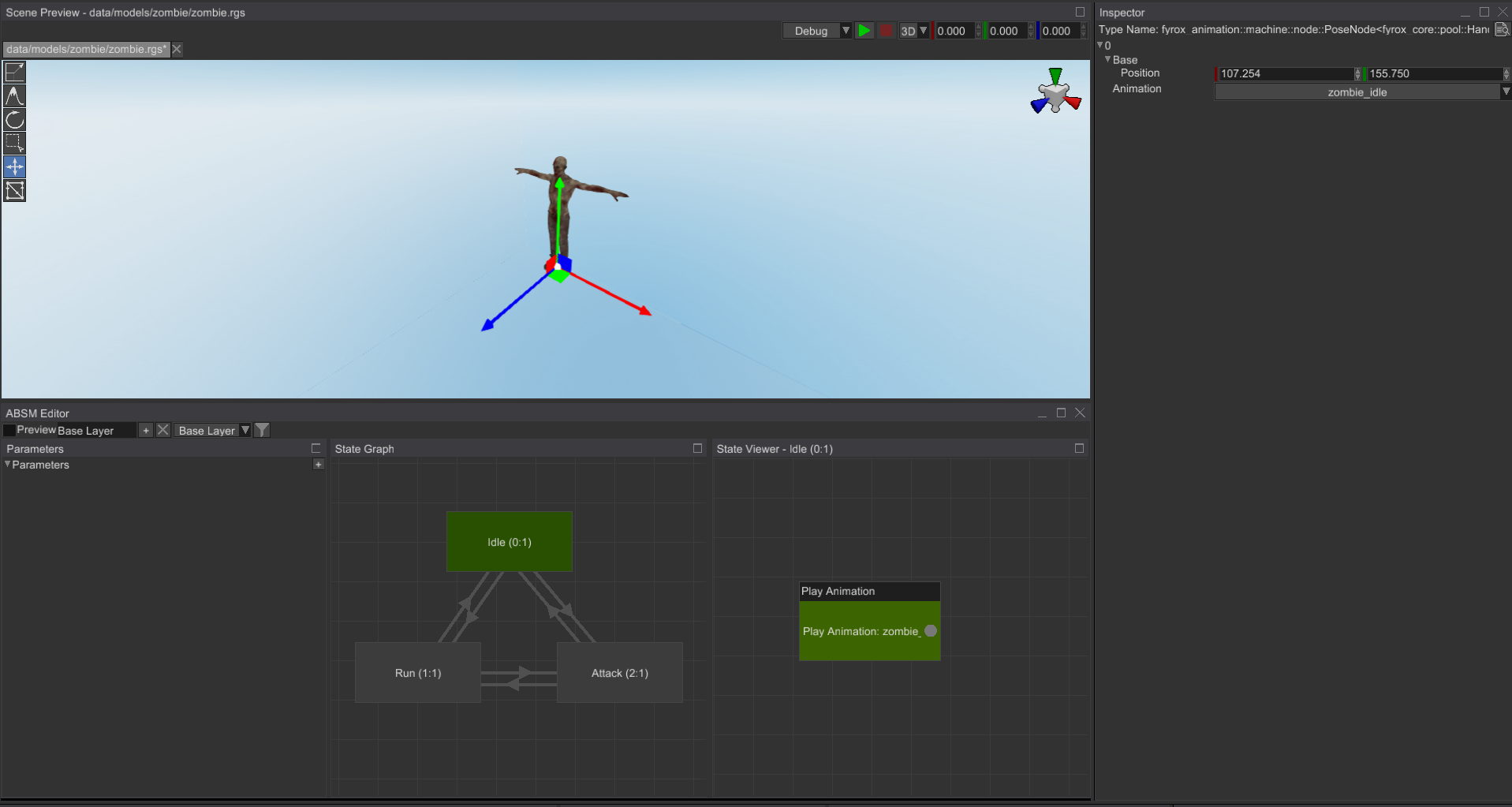

- Animation Blending State Machine - a state machine that mixes multiple animations from multiple states into one; each state is backed by one or more animation playing or blending nodes. See its respective chapter for more info.

Every node can be created either in the editor (through Create on the main menu, or through Add Child after right-clicking on

a game entity) or programmatically via their respective node builder (see API docs

for more info). These scene nodes allow you to build almost any kind of game. It is also possible to create your own

types of nodes, but that is an advanced topic, which is covered in a future chapter.

Local and Global Coordinates

A graph describes your scene in a very natural way, allowing you think in terms of relative and absolute coordinates when working with scene nodes.

A scene node has two kinds of transform - a local and global. The local transform defines where the node is located relative to its origin, its scale as a percentage, and its rotation around any arbitrary axis. The global transform is almost the same, but it also includes the whole chain of transforms of the parent nodes. Going back to the example of the character and the sword, if the character moves, and by extension the sword, the global transform of the sword will reflect the changes made to the character position, yet its local transform will not, since that represents the sword's position's relative to the character's, which didn't change.

This mechanism is very simple, yet powerful. The full grace of it unfolds when you're working with 3D models with skeletons. Each bone in a skeleton has its parent and a set of children, which allows you to rotate, translate, or scale them to animate your entire character.

Assets

Pretty much every game depends on various assets, such as 3D models, textures, sounds, etc. Fyrox has its own assets pipeline made to make your life easier.

Asset Types

The engine offers a set of assets that should cover all of your needs:

- Models - are a set of objects. They can be a simple 3D model (barrels, bushes, weapons, etc.) or complex scenes with lots of objects and possibly other model instances. Fyrox supports two main formats: FBX - which can be used to import 3D models, RGS - which are scenes made in FyroxEd. RGS models are special, as they can be used as hierarchical prefabs.

- Textures - are images used to add graphical details to objects. The engine supports multiple texture formats, such as PNG, JPG, BMP, etc. Compressed textures in DDS format are also supported.

- Sound buffers - are data buffers for sound sources. Fyrox supports WAV and OGG formats.

- Curves - are parametric curves. They're used to create complex functions for numeric parameters.

They can be made in the

Curve Editor(Utils -> Curve Editor) - HRIR Spheres - head-related impulse response collection used for head-related transfer function in the HRTF sound rendering.

- Fonts - arbitrary TTF/OTF fonts.

- Materials - materials for rendering.

- Shaders - shaders for rendering.

- It is also possible to create custom assets. See respective chapter for more info.

Asset Management

Asset management is performed from the Asset Browser window in the editor, you can select an asset, preview it, and edit

its import options. Here's a screenshot of the asset browser with a texture selected:

The most interesting part here is the import options section under the previewer. It allows you to set asset-specific import options and apply them. Every asset has its own set of import options. See their respective asset page from the section above to learn what each import option is for.

Asset Instantiation

Some asset types can be instantiated in scenes; for now, you can only create direct instances from models. This

is done by simply dragging the model you want to instantiate and dropping it on the Scene Preview. While dragging it,

you'll also see a preview of the model.

The maximum amount of asset instances is not limited by the engine but it is by the memory and CPU resources of your PC. Note that the engine does try to reuse data across instances as much as possible.

You can also instantiate assets dynamically from your code. Here's an example of that for a Model:

#![allow(unused)] fn main() { extern crate fyrox; use fyrox::{ core::pool::Handle, asset::manager::ResourceManager, resource::model::{Model, ModelResourceExtension}, scene::{node::Node, Scene}, }; use std::path::Path; async fn instantiate_model( path: &Path, resource_manager: ResourceManager, scene: &mut Scene, ) -> Handle<Node> { // Load the model first. Alternatively, you can store the resource handle somewhere and use it for instantiation. let model = resource_manager.request::<Model, _>(path).await.unwrap(); model.instantiate(scene) } }

This is very useful with prefabs that you may want to instantiate in a scene at runtime.

Loading Assets

Usually, there is no need to manually handle the loading of assets since you have the editor to help with that - just create

a scene with all the required assets. However, there are times when you may need to instantiate some asset dynamically, for

example, a bot prefab. For these cases, you can use the ResourceManager::request<T> method with the appropriate type,

such as Model, Texture, SoundBuffer, etc.

Data Management

The engine uses pools to store most objects (scene nodes in a graph, animations in an animation player, sound sources in an audio context, etc.). Since you'll use them quite often, reading and understanding this chapter is recommended.

Motivation

Rust ownership system and borrow checker, in particular, dictate the rules of data management. In game development, you

often have the need to reference objects from other objects. In languages like C, this is usually achieved by simply storing a raw

pointer and calling it a day. That works, yet it's remarkably unsafe - you risk either forgetting to destroy an object and leaking

memory or destroying an object still being referenced and then trying to access deallocated memory. Other languages, like C++, allow

you to store shared pointers to your data, which by keeping a reference count, ensures the previous doesn't happen at the cost of

a, most often, negligible overhead. Rust counts with smart pointers similar to this, though not without their limitations. There is the Rc/Arc - they function like shared pointers, except they don't allow mutating their content, only

reading it. If you want mutability, you use either a RefCell for a

single-threaded environment, or a Mutex for a multithreaded environment. That is where the problems begin. For

types such as Rc<RefCell> or Arc<Mutex>, Rust enforces its borrowing rules at runtime, which are unlimited readers but

a single writer. Any attempt to borrow mutably more than once at a time will lead to a runtime error.

Another problem with these shared references is that is very easy to accidentally create cyclical references

that prevent objects from ever being destroyed. While the previous could be lived with, the last problem is especially

severe in the case of games: the overhead of runtime checks. In the case of a Rc<RefCell>, it is a single

reference counter for given accesses to the data, but in the case of a Arc<Mutex>, it is a mutex lock.

The solution to these problems is far from ideal; it certainly has its own downfalls. Instead of scattering objects across memory and then having to manage the lifetime of each of them through reference counting, we can store all of the objects in a single and contiguous memory block and then use indices to access each object. Such a structure is called a pool.

Technical Details

A pool is an efficient method of data management. A pool is a vector with entries that can be either vacant or occupied. Each entry, regardless of its status, also stores a number called a generation number. This is used to understand whether an entry has changed over time or not. When an entry is reused, its generation number is increased, rendering all previously created handles leading to the entry invalid. This is a simple and efficient algorithm for tracking the lifetime of objects.

To access the data in the entries, the engine uses the previously mentioned handles. A handle is a pair of the index of an entry and a generation number. When you put an object in the pool, this gives you the handle that leads to the object, as well as the entry's current generation number. The number remains valid until you "free" the object, which makes the entry vacant again.

Advantages

- Since a pool is a contiguous memory block, it is far more CPU cache-friendly. This reduces the occurrences of CPU cache misses, which makes accesses to data blazingly fast.

- Almost every entity in Fyrox lives on its own pool, which makes it easy to create data structures like graphs, where nodes refer to other nodes. In this case, nodes simply need to store a handle to refer to other nodes.

- Simple lifetime management. There is no way to leak memory since cross-references can only be done via handles.

- Fast random access with a constant complexity.

- Handles are the same size as a pointer on a 64-bit architecture, just 8 bytes.

Disadvantages

- Pools can contain lots of gaps between currently used memory, which may lead to less efficient memory usage.

- Handles are sort of weak references, but worse. Since they do not own any data nor even point to their data, you need a reference to its pool instance in order to borrow the data a handle leads to.

- Handles introduce a level of indirection that can hurt performance in places with high loads that require random access, though this is not too significant as random access is already somewhat slow because of potential CPU cache misses.

Usage

You'll use Handle a lot while working with Fyrox. So where are the main usages of pools and

handles? The largest is in a scene graph. This stores all the nodes in a pool and gives handles

to each node. Each scene node stores a handle to their parent node and a set of handles to their children nodes. A scene graph

automatically ensures that such handles are valid. In scripts, you can also store handles

to scene nodes and assign them in the editor.

Animation is another place that stores handles to animated scene nodes. Animation Blending State Machine stores its own state graph using a pool; it also takes handles to animations from an animation player in a scene.

And the list could keep going for a long time. This is why you need to understand the basic concepts of data management, as to efficiently and fearlessly use Fyrox.

Borrowing

Once an object is placed in a pool, you have to use its respective handle to get a reference to it. This can

be done with either pool.borrow(handle) or pool.borrow_mute(handle), or by using the Index trait: pool[handle]. Note that

these methods panic when the handle given is invalid. If you want to be safe, use the try_borrow(handle) or

try_borrow_mut(handle) method.

extern crate fyrox; use fyrox::core::pool::Pool; fn main() { let mut pool = Pool::<u32>::new(); let handle = pool.spawn(1); let obj = pool.borrow_mut(handle); *obj = 11; let obj = pool.borrow(handle); assert_eq!(*obj, 11); }

Freeing

You can extract an object from a pool by calling pool.free(handle). This will give you the object back and make all current

handles to it invalid.

extern crate fyrox; use fyrox::core::pool::Pool; fn main() { let mut pool = Pool::<u32>::new(); let handle = pool.spawn(1); pool.free(handle); let obj = pool.try_borrow(handle); assert_eq!(obj, None); }

Take and Reserve

Sometimes you may want to temporarily extract an object from a pool, do something with it, and then put it back, yet not want to break every handle to the object in the process. There are three methods for this:

take_reserve+try_take_reserve- moves an object out of the pool but leaves the entry in an occupied state. This function returns a tuple with two values(Ticket<T>, T). The latter one being your object, and the former one being a wrapper over its index that allows you to return the object once you're done with it. This is called a ticket. Note that attempting to borrow a moved object will cause a panic!put_back- moves the object back using the given ticket. The ticket contains information about where in the pool to return the object to.forget_ticket- makes the pool entry vacant again. Useful in cases where you move an object out of the pool, and then decide you won't return it. If this is the case, you must call this method, otherwise, the corresponding entry will remain unusable.

Reservation example:

extern crate fyrox; use fyrox::core::pool::Pool; fn main() { let mut pool = Pool::<u32>::new(); let handle = pool.spawn(1); let (ticket, ref mut obj) = pool.take_reserve(handle); *obj = 123; // Attempting to fetch while there is an existing reservation, will fail. let attempt_obj = pool.try_borrow(handle); assert_eq!(attempt_obj, None); // Put the object back, allowing borrowing again. pool.put_back(ticket, *obj); let obj = pool.borrow(handle); assert_eq!(obj, &123); }

Forget example:

extern crate fyrox; use fyrox::core::pool::Pool; fn main() { let mut pool = Pool::<u32>::new(); let handle = pool.spawn(1); let (ticket, _obj) = pool.take_reserve(handle); pool.forget_ticket(ticket); let obj = pool.try_borrow(handle); assert_eq!(obj, None); }

Iterators

There are a few possible iterators, each one serving its own purpose:

iter/iter_mut- creates an iterator over occupied pool entries, returning references to each object.pair_iter/pair_iter_mut- creates an iterator over occupied pool entries, returning tuples of a handle and reference to each object.

extern crate fyrox; use fyrox::core::pool::Pool; fn main() { let mut pool = Pool::<u32>::new(); let _handle = pool.spawn(1); let mut iter = pool.iter_mut(); let next_obj = iter.next().unwrap(); assert_eq!(next_obj, &1); let next_obj = iter.next(); assert_eq!(next_obj, None); }

Direct Access

You have the ability to get an object from a pool using only an index. The methods for that are at and at_mut.

Validation

To check if a handle is valid, you can use the is_valid_handle method.

Type-erased Handles

The pool module also offers type-erased handles that can be of use in some situations. Still, try to avoid using these, as they may introduce hard-to-reproduce bugs. Type safety is always good :3

A type-erased handle is called an ErasedHandle and can be created either manually or from a strongly-typed handle.

Both handle types are interchangeable; you can use the From and Into traits to convert from one to the other.

Getting a Handle to an Object by its Reference

If you need to get a handle to an object from only having a reference to it, you can use the handle_of method.

Iterate Over and Filter Out Objects

The retain method allows you to filter your pool's content using a closure provided by you.

Borrow Checker

Rust has a famous borrow checker, that became a sort of horror story for newcomers. It usually treated like an enemy, that prevents your from writing anything useful as you may get used in other languages. In fact, it is a very useful part of Rust that proves correctness of your program and does not let you doing nasty things like memory corruption, data races, etc. This chapter explains how Fyrox solves the most common borrowing issues and makes game development as easy as in any other game engine.

Multiple Borrowing

When writing a script logic there is often a need to do a multiple borrowing of some data, usually it is other scene nodes. In normal circumstances you can borrow each node one-by-one, but in other cases you can't do an action without borrowing two or more nodes simultaneously. In this case you can use multi-borrowing:

#![allow(unused)] fn main() { #[derive(Clone, Debug, Reflect, Visit, Default, TypeUuidProvider, ComponentProvider)] #[type_uuid(id = "a9fb15ad-ab56-4be6-8a06-73e73d8b1f49")] #[visit(optional)] struct MyScript { some_node: Handle<Node>, some_other_node: Handle<Node>, yet_another_node: Handle<Node>, } impl ScriptTrait for MyScript { fn on_update(&mut self, ctx: &mut ScriptContext) { // Begin multiple borrowing. let mbc = ctx.scene.graph.begin_multi_borrow(); // Borrow immutably. let some_node_ref_1 = mbc.try_get(self.some_node).unwrap(); // Then borrow other nodes mutably. let mut some_other_node_ref = mbc.try_get_mut(self.some_other_node).unwrap(); let mut yet_another_node_ref = mbc.try_get_mut(self.yet_another_node).unwrap(); // We can borrow the same node immutably pretty much infinite amount of times, if it wasn't // borrowed mutably. let some_node_ref_2 = mbc.try_get(self.some_node).unwrap(); } } }

As you can see, you can borrow multiple nodes at once with no compilation errors. Borrowing rules in this case are enforced at runtime. They're the same as standard Rust borrowing rules:

- You can have infinite number of immutable references to the same object.

- You can have only one mutable reference to the same object.

Multi-borrow context provides detailed error messages for cases when borrowing has failed. For example, itt will tell you if you're trying to mutably borrow an object, that was already borrowed as immutable (and vice versa). It also provides handle validation and will tell you what's wrong with it. It could be either invalid index of it, or the generation. The latter means that the object at the handle was changed and the handle is invalid.

The previous example looks kinda synthetic and does not show the real-world code that could lead to borrowing issues. Let's fix this. Imagine that you're making a shooter, and you have bots, that can follow and attack targets. Then the code could look like this:

#![allow(unused)] fn main() { #[derive(Clone, Debug, Reflect, Visit, Default, TypeUuidProvider, ComponentProvider)] #[type_uuid(id = "a9fb15ad-ab56-4be6-8a06-73e73d8b1f49")] #[visit(optional)] struct Bot { target: Handle<Node>, absm: Handle<Node>, } impl ScriptTrait for Bot { fn on_update(&mut self, ctx: &mut ScriptContext) { // Begin multiple borrowing. let mbc = ctx.scene.graph.begin_multi_borrow(); // At first, borrow a node on which this script is running on. let mut this = mbc.get_mut(ctx.handle); // Try to borrow the target. It can fail in two cases: // 1) `self.target` is invalid or unassigned handle. // 2) A node is already borrowed, this could only happen if the bot have itself as the target. match mbc.try_get_mut(self.target) { Ok(target) => { // Check if we are close enough to target. let close_enough = target .global_position() .metric_distance(&this.global_position()) < 1.0; // Switch animations accordingly. let mut absm = mbc .try_get_component_of_type_mut::<AnimationBlendingStateMachine>(self.absm) .unwrap(); absm.machine_mut() .get_value_mut_silent() .set_parameter("Attack", Parameter::Rule(close_enough)); } Err(err) => { // Optionally, you can print the actual reason why borrowing wasn't successful. Log::err(err.to_string()) } } } } }

As you can see, for this code to compile we need to borrow at least two nodes simultaneously: the node with Bot

script and the target node. This is because we're calculating distance between the two nodes to switch

animations accordingly (attack if the target is close enough).

As pretty much any approach, this one is not ideal and comes with its own pros and cons. The pros are quite simple:

- No compilation errors - sometimes Rust is too strict about borrowing rules, and valid code not pass its checks.

- Better ergonomics - no need to juggle with temporary variable here and there to perform an action.

The cons are:

- Multi-borrowing is slightly slower (~1-4% depending on your use case) - this happens because the multi-borrowing context checks borrowing rules at runtime.

Message Passing

Sometimes the code becomes so convoluted, so it is simply hard to maintain and understand what it is doing.

This happens when code coupling get to a certain point, which requires very broad context for the code to

be executed. For example, if bots in your game have weapons it is so tempting to just borrow the weapon

and call something like weapon.shoot(..). When your weapon is simple then it might work fine, however when

your game gets bigger and weapons get new features simple weapon.shoot(..) could be not enough. It could be

because shoot method get more and more arguments or by some other reason. This is quite common case and in

general when your code become tightly coupled it becomes hard to maintain it and what's more important - it

could easily result in compilation errors, that comes from borrow checker. To illustrate this, let's look at

this code:

#![allow(unused)] fn main() { #[derive(Clone, Debug, Reflect, Visit, Default, TypeUuidProvider, ComponentProvider)] #[type_uuid(id = "a9fb15ad-ab56-4be6-8a06-73e73d8b1f49")] #[visit(optional)] struct Weapon { bullets: u32, } impl Weapon { fn shoot(&mut self, self_handle: Handle<Node>, graph: &mut Graph) { if self.bullets > 0 { let this = &graph[self_handle]; let position = this.global_position(); let direction = this.look_vector().scale(10.0); // Cast a ray in front of the weapon. let mut results = Vec::new(); graph.physics.cast_ray( RayCastOptions { ray_origin: position.into(), ray_direction: direction, max_len: 10.0, groups: Default::default(), sort_results: false, }, &mut results, ); // Try to damage all the bots that were hit by the ray. for result in results { for node in graph.linear_iter_mut() { if let Some(bot) = node.try_get_script_mut::<Bot>() { if bot.collider == result.collider { bot.health -= 10.0; } } } } self.bullets -= 1; } } } impl ScriptTrait for Weapon {} #[derive(Clone, Debug, Reflect, Visit, Default, TypeUuidProvider, ComponentProvider)] #[type_uuid(id = "a9fb15ad-ab56-4be6-8a06-73e73d8b1f49")] #[visit(optional)] struct Bot { weapon: Handle<Node>, collider: Handle<Node>, health: f32, } impl ScriptTrait for Bot { fn on_update(&mut self, ctx: &mut ScriptContext) { // Try to shoot the weapon. if let Some(weapon) = ctx .scene .graph .try_get_script_component_of_mut::<Weapon>(self.weapon) { // !!! This will not compile, because it requires mutable access to the weapon and to // the script context at the same time. This is impossible to do safely, because we've // just borrowed the weapon from the context. // weapon.shoot(ctx.handle, &mut ctx.scene.graph); } } } }

This is probably one of the typical implementations of shooting in games - you cast a ray from the weapon

and if it hits a bot, you're applying some damage to it. In this case bots can also shoot, and this is where

borrow checker again gets in our way. If you try to uncomment the

// weapon.shoot(ctx.handle, &mut ctx.scene.graph); line you'll get a compilation error, that tells you that

ctx.scene.graph is already borrowed. It seems that we've stuck, and we need to somehow fix this issue.

We can't use multi-borrowing in this case, because it still enforces borrowing rules and instead of compilation

error, you'll runtime error.

To solve this, you can use well-known message passing mechanism. The core idea of it is to not call methods immediately, but to collect all the needed data for the call and send it an object, so it can do the call later. Here's how it will look:

#![allow(unused)] fn main() { #[derive(Clone, Debug, Reflect, Visit, Default, TypeUuidProvider, ComponentProvider)] #[type_uuid(id = "a9fb15ad-ab56-4be6-8a06-73e73d8b1f49")] #[visit(optional)] struct Weapon { bullets: u32, } impl Weapon { fn shoot(&mut self, self_handle: Handle<Node>, graph: &mut Graph) { // -- This method is the same } } pub struct ShootMessage; impl ScriptTrait for Weapon { fn on_start(&mut self, ctx: &mut ScriptContext) { // Subscribe to shooting message. ctx.message_dispatcher .subscribe_to::<ShootMessage>(ctx.handle); } fn on_message( &mut self, message: &mut dyn ScriptMessagePayload, ctx: &mut ScriptMessageContext, ) { // Receive shooting messages. if message.downcast_ref::<ShootMessage>().is_some() { self.shoot(ctx.handle, &mut ctx.scene.graph); } } } #[derive(Clone, Debug, Reflect, Visit, Default, TypeUuidProvider, ComponentProvider)] #[type_uuid(id = "a9fb15ad-ab56-4be6-8a06-73e73d8b1f49")] #[visit(optional)] struct Bot { weapon: Handle<Node>, collider: Handle<Node>, health: f32, } impl ScriptTrait for Bot { fn on_update(&mut self, ctx: &mut ScriptContext) { // Note, that we know nothing about the weapon here - just its handle and a message that it // can accept and process. ctx.message_sender.send_to_target(self.weapon, ShootMessage); } } }

The weapon now subscribes to ShootMessage and listens to it in on_message method and from there it can

perform the actual shooting without any borrowing issues. The bot now just sends the ShootMessage instead of

borrowing the weapon trying to call shoot directly. The messages do not add any one-frame delay as you might

think, they're processed in the same frame so there's no one-or-more frames desynchronization.

This approach with messages has its own pros and cons. The pros are quite significant:

- Decoupling - coupling is now very loose and done mostly on message side.

- Easy to refactor - since the coupling is loose, you can refactor the internals with low chance of breaking existing code, that could otherwise be done because of intertwined and convoluted code.

- No borrowing issues - the method calls are done in different places and there's no lifetime collisions.

- Easy to write unit and integration tests - this comes from loose coupling.

The cons are the following:

- Message passing is slightly slower than direct method calls (~1-7% depending on your use case) - you should keep message granularity at a reasonable level. Do not use message passing for tiny changes, it will most likely make your game slower.

Scripting

A game based on Fyrox is a plugin to the engine and the editor. Plugin defines global application logic and can provide a set of scripts, that can be used to assign custom logic to scene nodes. Every script can be attached to only one plugin.

Fyrox uses scripts to create custom game logic, scripts can be written only in Rust which ensures that your game will be crash-free, fast and easy to refactor.

Next chapters will cover all parts and will help you to learn how to use plugins + scripts correctly.

Plugins

A game based on Fyrox is a plugin to the engine and the editor. Plugin defines global application logic and provides a set of scripts, that can be used to assign custom logic to scene nodes.

Plugin is an "entry point" of your game, it has a fixed set of methods that can be used for initialization, update, OS event handling, etc. Every plugin is statically linked to the engine (and the editor), there is no support for hot-reloading due to lack of stable ABI in Rust. However, it is possible to not recompile the editor everytime - if you don't change data layout in your structures the editor will be able to compile your game and run it with the currently loaded scene, thus reducing amount of iterations. You can freely modify application logic and this won't affect the running editor.

The main purpose of the plugins is to hold and operate on some global application data, that can be used in scripts and provide a set of scripts to the engine. Plugins also have much wider access to engine internals, than scripts. For example, it is possible to change scenes, add render passes, change resolution, etc. which is not possible from scripts.

Structure

Plugin structure is defined by Plugin trait. Typical

implementation can be generated by fyrox-template tool, and it looks something like this:

#![allow(unused)] fn main() { extern crate fyrox; use fyrox::{ core::{ futures::executor::block_on, pool::Handle, }, event::Event, event_loop::ControlFlow, gui::message::UiMessage, plugin::{Plugin, PluginConstructor, PluginContext, PluginRegistrationContext}, scene::{Scene, SceneLoader}, }; use std::path::Path; pub struct GameConstructor; impl PluginConstructor for GameConstructor { fn register(&self, _context: PluginRegistrationContext) { // Register your scripts here. } fn create_instance( &self, scene_path: Option<&str>, context: PluginContext, ) -> Box<dyn Plugin> { Box::new(Game::new(scene_path, context)) } } pub struct Game { scene: Handle<Scene>, } impl Game { pub fn new(scene_path: Option<&str>, context: PluginContext) -> Self { context .async_scene_loader .request(scene_path.unwrap_or("data/scene.rgs")); Self { scene: Handle::NONE } } } impl Plugin for Game { fn on_deinit(&mut self, _context: PluginContext) { // Do a cleanup here. } fn update(&mut self, _context: &mut PluginContext, _control_flow: &mut ControlFlow) { // Add your global update code here. } fn on_os_event( &mut self, _event: &Event<()>, _context: PluginContext, _control_flow: &mut ControlFlow, ) { // Do something on OS event here. } fn on_ui_message( &mut self, _context: &mut PluginContext, _message: &UiMessage, _control_flow: &mut ControlFlow, ) { // Handle UI events here. } fn on_scene_loaded(&mut self, _path: &Path, scene: Handle<Scene>, context: &mut PluginContext) { if self.scene.is_some() { context.scenes.remove(self.scene); } self.scene = scene; } } }

There are two major parts - GameConstructor and Game itself. GameConstructor implements PluginConstructor and it

is responsible for script registration (fn register) and creating the actual game instance (fn create_instance).

register- called once on start allowing you to register your scripts. Important: You must register all your scripts here, otherwise the engine (and the editor) will know nothing about them. Also, you should register loaders for your custom resources here. See Custom Resource chapter more info.create_instance- called once, allowing you to create actual game instance. It is guaranteed to be called once, but where it is called is implementation-defined. For example, the editor will not call this method, it does not create any game instance. The method hasscene_pathparameter, in short it is a path to a scene that is currently opened in the editor (it will beNoneif either there's no opened scene or your game was started outside the editor). It is described in Editor and Plugins section down below.

The game structure (struct Game) implements a Plugin trait which can execute actual game logic in one of its methods:

on_deinit- it is called when the game is about to shut down. Can be used for any clean up, for example logging that the game has closed.update- it is called each frame at a stable rate (usually 60 Hz, but can be configured in the Executor) after the plugin is created and fully initialized. It is the main place where you should put object-independent game logic (such as user interface handling, global application state management, etc.), any other logic should be added via scripts.on_os_event- it is called when the main application window receives an event from the operating system, it can be any event such as keyboard, mouse, game pad events or any other events. Please note that as forupdatemethod, you should put here only object-independent logic. Scripts can catch OS events too.on_ui_message- it is called when there is a message from the user interface, it should be used to react to user actions (like pressed buttons, etc.)on_graphics_context_initialized- it is called when a graphics context was successfully initialized. This method could be used to access the renderer (to change its quality settings, for instance). You can also access a main window instance and change its properties (such as title, size, resolution, etc.).on_graphics_context_destroyed- it is called when the current graphics context was destroyed. It could happen on a small number of platforms, such as Android. Such platforms usually have some sort of suspension mode, in which you are not allowed to render graphics, to have a "window", etc.before_rendering- it is called when the engine is about to render a new frame. This method is useful to perform offscreen rendering (for example - user interface).on_scene_begin_loading- it is called when the engine starts to load a game scene. This method could be used to show a progress bar or some sort of loading screen, etc.on_scene_loaded- it is called when the engine successfully loaded a game scene. This method could be used to add custom logic to do something with a newly loaded scene.

Control Flow

Some plugin methods provide access to ControlFlow variable, its main usage in the plugin is to give you ability to

stop the game by some conditions. All you need to do is to set it to ControlFlow::Exit and the game will be closed.

It also has other variants, but they don't have any particular usage in the plugins.

#![allow(unused)] fn main() { fn update(&mut self, _context: &mut PluginContext, control_flow: &mut ControlFlow) { if self.some_exit_condition { control_flow = ControlFlow::Exit; } } }

Plugin Context

Vast majority of methods accept PluginContext - it provides almost full access to engine entities, it has access

to the renderer, scenes container, resource manager, user interface, main application window. Typical content of the

context is something like this:

#![allow(unused)] fn main() { extern crate fyrox; use fyrox::{ engine::{SerializationContext, GraphicsContext, PerformanceStatistics, AsyncSceneLoader, ScriptProcessor}, asset::manager::ResourceManager, gui::UserInterface, scene::SceneContainer, window::Window, }; use std::sync::Arc; pub struct PluginContext<'a, 'b> { pub scenes: &'a mut SceneContainer, pub resource_manager: &'a ResourceManager, pub user_interface: &'a mut UserInterface, pub graphics_context: &'a mut GraphicsContext, pub dt: f32, pub lag: &'b mut f32, pub serialization_context: &'a Arc<SerializationContext>, pub performance_statistics: &'a PerformanceStatistics, pub elapsed_time: f32, pub script_processor: &'a ScriptProcessor, pub async_scene_loader: &'a mut AsyncSceneLoader, } }

Amount of time (in seconds) that passed from creation of the engine. Keep in mind, that this value is not guaranteed to match real time. A user can change delta time with which the engine "ticks" and this delta time affects elapsed time.

scenes- a scene container, could be used to manage game scenes - add, remove, borrow. An example of scene loading is given in the previous code snippet inGame::new()method.resource_manager- is used to load external resources (scenes, models, textures, animations, sound buffers, etc.) from different sources (disk, network storage on WebAssembly, etc.)user_interface- use it to create user interface for your game, the interface is scene-independent and will remain the same even if there are multiple scenes created.graphics_context- a reference to the graphics_context, it contains a reference to the window and the current renderer. It could beGraphicsContext::Uninitializedif your application is suspended (possible only on Android).dt- a time passed since the last frame. The actual value is implementation-defined, but on current implementation it is equal to 1/60 of a second and does not change event if the frame rate is changing (the engine stabilizes update rate for the logic).lag- a reference to the time accumulator, that holds remaining amount of time that should be used to update a plugin. A caller splitslaginto multiple sub-steps usingdtand thus stabilizes update rate. The main use of this variable, is to be able to resetlagwhen you're doing some heavy calculations in a game loop (i.e. loading a new level) so the engine won't try to "catch up" with all the time that was spent in heavy calculation.serialization_context- it can be used to register scripts and custom scene nodes constructors at runtime.performance_statistics- performance statistics from the last frame. To get a rendering performance statistics, useRenderer::get_statisticsmethod, that could be obtained from the renderer instance in the current graphics context.elapsed_time- amount of time (in seconds) that passed from creation of the engine. Keep in mind, that this value is not guaranteed to match real time. A user can change delta time with which the engine "ticks" and this delta time affects elapsed time.script_processor- a reference to the current script processor instance, which could be used to access a list of scenes that supports scripts.async_scene_loader- a reference to the current asynchronous scene loader instance. It could be used to request a new scene to be loaded.

Editor and Plugins

When you're running your game from the editor, it starts the game as a separate process and if there's a scene opened

in the editor, it tells the game instance to load it on startup. Let's look closely at Game::new method:

#![allow(unused)] fn main() { extern crate fyrox; use fyrox::{ core::{futures::executor::block_on, pool::Handle}, plugin::PluginContext, scene::{Scene, SceneLoader}, }; use std::path::Path; struct Foo { scene: Handle<Scene>, } impl Foo { pub fn new(scene_path: Option<&str>, context: PluginContext) -> Self { context .async_scene_loader .request(scene_path.unwrap_or("data/scene.rgs")); Self { scene: Handle::NONE } } } }

The scene_path parameter is a path to a scene that is currently opened in the editor, your game should use it if you

need to load a currently selected scene of the editor in your game. However, it is not strictly necessary - you may

desire to start your game from a specific scene all the time, even when the game starts from the editor. If the parameter

is None, then there is no scene loaded in the editor or the game was run outside the editor.

Executor

Executor is a simple wrapper that drives your game plugins, it is intended to be used for production builds of your game.

The editor runs the executor in separate process when you're entering the play mode. Basically, there is no significant

difference between running the game from the editor, or running it as a separate application. The main difference is that

the editor passes scene_path parameter for the executor when entering the play mode.

Usage

Executor is meant to be a part of your project's workspace, its typical look could something like this:

extern crate fyrox; use fyrox::{ core::{pool::Handle, uuid::Uuid}, engine::executor::Executor, plugin::{Plugin, PluginConstructor, PluginContext}, scene::{Scene}, }; struct GameConstructor; impl PluginConstructor for GameConstructor { fn create_instance( &self, _scene_path: Option<&str>, _context: PluginContext, ) -> Box<dyn Plugin> { todo!() } } fn main() { let mut executor = Executor::new(); // Register your game constructor here. executor.add_plugin_constructor(GameConstructor); executor.run() }

Executor has full access to the engine, and through it to the main application window. You can freely change desired

parts, Executor implements Deref<Target = Engine> + DerefMut traits, so you can use its instance as an "alias"

to engine instance.

To add a plugin to the executor, just use add_plugin_constructor method, it accepts any entity that implements

PluginConstructor traits.

Typical Use Cases

This section covers typical use cases for the Executor.

Setting Window Title

You can set window title when creating executor instance:

#![allow(unused)] fn main() { extern crate fyrox; use fyrox::engine::executor::Executor; use fyrox::window::WindowAttributes; use fyrox::engine::GraphicsContextParams; use fyrox::event_loop::EventLoop; let executor = Executor::from_params( EventLoop::new().unwrap(), GraphicsContextParams { window_attributes: WindowAttributes { title: "My Game".to_string(), ..Default::default() }, vsync: true, }, ); }

Scripts

Script - is a container for game data and logic that can be assigned to a scene node. Fyrox uses Rust for scripting, so scripts are as fast as native code.

When to Use Scripts and When Not

Scripts are meant to be used to add data and some logic to scene nodes. That being said, you should not use scripts to hold some global state of your game (use your game plugin for that). For example, use scripts for your game items, bots, player, level, etc. On the other hand do not use scripts for leader boards, game menus, progress information, etc.

Also, scripts cannot be assigned to UI widgets due to intentional Game <-> UI decoupling reasons. All user interface components should be created and handled in the game plugin of your game.

Script Structure

Typical script structure is something like this:

#![allow(unused)] fn main() { extern crate fyrox; use fyrox::{ core::{uuid::{Uuid, uuid}, visitor::prelude::*, reflect::prelude::*, TypeUuidProvider}, event::Event, impl_component_provider, scene::{graph::map::NodeHandleMap}, script::{ScriptContext, ScriptDeinitContext, ScriptTrait}, }; #[derive(Visit, Reflect, Default, Debug, Clone)] struct MyScript { // Add fields here. } impl_component_provider!(MyScript); impl TypeUuidProvider for MyScript { fn type_uuid() -> Uuid { uuid!("bf0f9804-56cb-4a2e-beba-93d75371a568") } } impl ScriptTrait for MyScript { fn on_init(&mut self, context: &mut ScriptContext) { // Put initialization logic here. } fn on_start(&mut self, context: &mut ScriptContext) { // Put start logic - it is called when every other script is already initialized. } fn on_deinit(&mut self, context: &mut ScriptDeinitContext) { // Put de-initialization logic here. } fn on_os_event(&mut self, event: &Event<()>, context: &mut ScriptContext) { // Respond to OS events here. } fn on_update(&mut self, context: &mut ScriptContext) { // Put object logic here. } fn id(&self) -> Uuid { Self::type_uuid() } } }

Each script must implement following traits:

Visitimplements serialization/deserialization functionality, it is used by the editor to save your object to a scene file.Reflectimplements compile-time reflection that provides a way to iterate over script fields, set their values, find fields by their paths, etc.Debug- provides debugging functionality, it is mostly for the editor to let it turn the structure and its fields into string.Clone- makes your structure clone-able, since we can clone objects, we also want the script instance to be cloned.Defaultimplementation is very important - the scripting system uses it to create your scripts in the default state. This is necessary to set some data to it and so on. If it's a special case, you can always implement your ownDefault's implementation if it's necessary for your script.TypeUuidProvideris used to attach some unique id for your type, every script must have a unique ID, otherwise, the engine will not be able to save and load your scripts. To generate a new UUID, use Online UUID Generator or any other tool that can generate UUIDs.

Script Template Generator

You can use fyrox-template tool to generate all required boilerplate code for a new script, it makes adding new scripts

much less tedious. To generate a new script use script command:

fyrox-template script --name MyScript

It will create a new file in game/src directory with my_script.rs name and fill with required code. Do not forget

to add the module with the new script to lib.rs like this:

#![allow(unused)] fn main() { // Use your script name instead of `my_script` here. pub mod my_script; }

Comments in each generated method should help you to figure out which code should be placed where and what is the purpose of every method.

⚠️ Keep in mind that every new script must be registered in

PluginConstructor::register, otherwise you won't be able to assign the script in the editor to a node. See the next section for more info.

Script Registration

Every script must be registered before use, otherwise the engine won't "see" your script and won't let you assign it

to an object. PluginConstructor trait has register method exactly for script registration. To register a script

you need to register it in the list of script constructors like so:

#![allow(unused)] fn main() { extern crate fyrox; use fyrox::{ scene::Scene, plugin::{Plugin, PluginConstructor, PluginContext, PluginRegistrationContext}, core::{ visitor::prelude::*, reflect::prelude::*, pool::Handle, uuid::Uuid, TypeUuidProvider }, impl_component_provider, script::ScriptTrait, }; #[derive(Reflect, Visit, Default, Copy, Clone, Debug)] struct MyScript; impl TypeUuidProvider for MyScript { fn type_uuid() -> Uuid { todo!() } } impl_component_provider!(MyScript); impl ScriptTrait for MyScript { fn id(&self) -> Uuid { todo!() } } struct Constructor; impl PluginConstructor for Constructor { fn register(&self, context: PluginRegistrationContext) { context.serialization_context.script_constructors.add::<MyScript>("My Script"); } fn create_instance(&self, _scene_path: Option<&str>, _context: PluginContext) -> Box<dyn Plugin> { todo!() } } }

Every script type (MyScript in the code snippet above, you need to change it to your script type) must be registered using

ScriptConstructorsContainer::add

method, which accepts a script type as a generic argument and its name, that will be shown in the editor. The name can be

arbitrary, it is used only in the editor. You can also change it at any time, it won't break existing scenes.

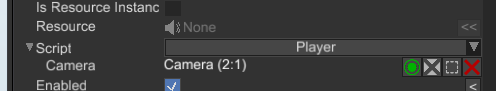

Script Attachment